3 Ways To Automate Your Labeling With Label Studio

In the rapidly advancing field of AI and machine learning, data labeling is crucial for building high-quality models. However, manual labeling is often time-consuming and very expensive. Label Studio offers several methods to automate and streamline the labeling process, ensuring that your models receive high-quality labeled data more efficiently, while still keeping a human in the loop for more difficult or tricky items. In this post, we'll delve into three effective methods to automate your labeling using Label Studio Community Edition: bootstrapping labels, semi-automated labeling, and active learning. Each method is discussed in detail, followed by links to technical resources for implementation.

It is also important to note that each of these methods require the use of our Machine Learning Backend. Rather than including instructions for setting up the ML backend for each example, we’ll just include these instructions at the beginning of the post.

Installing Label Studio and installing the Machine Learning Backend

- : Begin by installing Label Studio on your local machine or server. This can be done using pip or Docker, depending on your preference. This Zero to One tutorial post may be helpful here.

- : Integrate your model development pipeline with your data labeling workflow using the Label Studio ML Backend. This is an SDK that wraps your machine learning code and turns it into a web server. The web server can be connected to a running Label Studio instance to automate labeling tasks.

Now that you’ve got Label Studio installed and the ML Backend ready to go, we’ll take a deeper look at the ways in which Label Studio helps you save time and money by automating your labeling.

1. Bootstrapping Labels

Bootstrapping labels involves using a DIY workflow in the community edition of Label Studio utilizing the interactive LLM connector. This method leverages large language models (LLMs) like GPT-4 to generate initial labels for your dataset, significantly reducing the initial manual effort while still providing the opportunity for humans to review and adjust the dataset as-needed.

How It Works

Bootstrapping labels with Label Studio and GPT-4 involves several steps:

- Configure Label Studio:

- Set up your labeling project by defining the labeling interface and importing your dataset. See these configuration examples to get you started.

- Connecting to GPT-4:

- Interactive LLM Connector: Use the interactive LLM connector to integrate GPT-4. This requires obtaining an API key from OpenAI and configuring it within Label Studio.

- Define Prompt Templates: Create prompt templates that define how data will be presented to GPT-4 for labeling.

- Providing Initial Examples:

- Manual Labeling: Label a small subset of your dataset manually. These examples will help the LLM understand the labeling criteria and context.

- Feedback Loop: Use these labeled examples to fine-tune the LLM’s performance on your specific task.

- Generating Labels:

- Model Inference: Apply GPT-4 to the unlabeled dataset to generate initial labels. The model uses the initial examples to guide its labeling decisions.

- Review and Adjustment: Review the generated labels and make necessary adjustments to ensure accuracy. This step may involve iterative feedback to improve model performance.

For a detailed tutorial on bootstrapping labels using GPT-4, refer to the step-by-step tutorial written by Jimmy Whitaker, one of our resident Data Scientists.

2. Semi-Automated Labeling

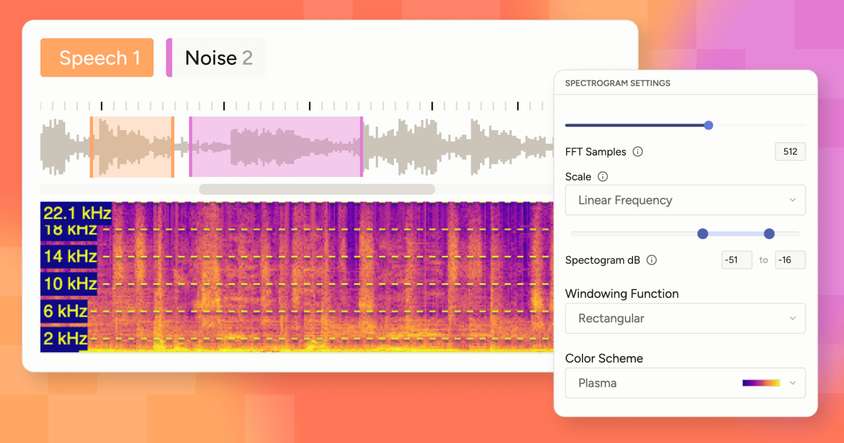

Semi-automated labeling is a powerful approach that combines automated pre-labeling with human verification. This method can significantly speed up the annotation process, especially for large datasets, by leveraging both custom models and popular pre-trained models like Segment Anything for image segmentation, GroundingDino for object detection, and SPaCy for named entity recognition (NER).

How It Works

Semi-automated labeling with Label Studio involves several key steps:

- Configure Label Studio:

- Set up your labeling project by defining the labeling interface and importing your dataset.

- Model Integration:

- Set Up ML Backend: Integrate your chosen pre-trained or custom model with Label Studio. This involves configuring the ML backend and connecting it to your Label Studio instance.

- Supported Models: Label Studio supports a variety of models, including Segment Anything for image segmentation, GroundingDino for object detection, and SPaCy for NER.

- Pre-Labeling:

- Automated Label Generation: Use the integrated model to generate initial labels for your dataset. This step significantly reduces the manual effort required for labeling.

- Batch Processing: Process large batches of data through the model to quickly generate a substantial number of labeled examples.

- Interactive Labeling:

- Review and Refinement: Human annotators review the pre-labeled data and make necessary adjustments to ensure accuracy. Label Studio’s interactive interface makes this process intuitive and efficient.

- Quality Control: Implement quality control measures to ensure the labeled data meets your standards. This may include cross-verification by multiple annotators and automated consistency checks.

- Iteration and Feedback:

- Model Improvement: Use feedback from human annotators to improve the model’s performance. This iterative process helps in refining the model for better accuracy in future pre-labeling tasks.

Semi-Automated Labeling Implementation Example

To provide a practical example, let's walk through a semi-automated labeling workflow using the SPaCy

Setting Up Label Studio:

Create a new labeling project in Label Studio and define the labeling interface for NER.

Integrating SPaCy:

Create a custom Python script to connect SPaCy to Label Studio’s ML backend:

import spacy

from label_studio_ml.model import LabelStudioMLBase

class SpacyNER(LabelStudioMLBase):

def __init__(self, **kwargs):

super(SpacyNER, self).__init__(**kwargs)

self.nlp = spacy.load("en_core_web_sm")

def predict(self, tasks, **kwargs):

predictions = []

for task in tasks:

text = task['data']['text']

doc = self.nlp(text)

predictions.append({

"result": [

{

"from_name": "label",

"to_name": "text",

"type": "labels",

"value": {

"start": ent.start_char,

"end": ent.end_char,

"labels": [ent.label_]

}

} for ent in doc.ents

]

})

return predictions- Pre-Labeling with SPaCy:

- Use the custom model to generate initial NER labels for your dataset. Import the pre-labeled data into Label Studio for review.

- Review and Refinement:

- Annotators review the pre-labeled data, make necessary corrections, and ensure high-quality annotations. Use Label Studio’s interface for efficient review and adjustment.

- Iteration and Improvement:

- Continuously improve the model by incorporating feedback from annotators and retraining the model on the corrected data.

For additional example code and tutorials, you can refer to the Label Studio ML guide and ML tutorials.

3. Active Learning

Active learning optimizes the labeling process by selecting the most informative samples for annotation. Instead of randomly selecting data points, active learning algorithms identify and prioritize samples that will have the greatest impact on model performance. This results in greater efficiency and improved label quality.

How It Works

Active learning with Label Studio Community Edition involves a manual approach where you can sort tasks and retrieve predictions to simulate an active learning process. Here’s an overview of the workflow:

- Configure Label Studio:

- Set up your labeling project by defining the labeling interface and importing your dataset.

- Initial Model Training:

- Small Labeled Dataset: Start by training an initial model using a small labeled dataset. This model will be used to identify the most informative samples for further annotation.

- Training Setup: Configure the training environment and ensure that the model is properly connected to Label Studio via the ML Backend for predictions.

- Uncertainty Sampling:

- Prediction and Uncertainty Estimation: Use the initial model to predict labels for the unlabeled data. Identify samples with the highest uncertainty, as these are likely to be the most informative. (With Label Studio Commmunity Edition this is a manual process, but the HumanSignal Platform has this built-in.)

- Selection Criteria: Implement criteria for selecting samples based on model uncertainty. This can be done using various uncertainty metrics such as entropy or margin sampling.

- Manual Annotation:

- Task Annotation: Annotators label the selected samples, focusing on those with the highest uncertainty. This targeted approach ensures that each annotated sample provides maximum value to the model.

- Feedback Integration: Incorporate feedback from annotators to refine the model’s predictions and improve its performance.

- Iteration and Retraining:

- Iterative Process: Repeat the process, continuously refining the model with the most informative samples. Each iteration should improve the model’s accuracy and reduce the uncertainty in its predictions.

- Active Learning Loop: Manually manage the active learning loop where the model, annotators, and Label Studio work together seamlessly.

For a more detailed guide on setting up an active learning loop in the Community Edition, visit the active learning documentation. This resource provides in-depth instructions on configuring your model, integrating it with Label Studio, and optimizing the active learning process.

Bonus! Fully-Automate Labeling With GenAI

We’ve heard from many teams who have explored using LLMs for data labeling, but were not able to achieve the same level of accuracy and reliability required for production model performance as human-in-the-loop labeling. That’s why we developed a new Prompts interface in the HumanSignal Platform (formerly Label Studio Enterprise) to deliver the efficiency of auto-labeling, without sacrificing quality.

You’ll can enable subject matter experts to efficiently scale their knowledge using a natural language interface, rather than training a large team of annotators. And you can ensure high quality annotations with real-time metrics based on ground truth data, plus constrained generation and human-in-the-loop workflows built into the platform. But one of the biggest benefits is that you’ll be able to save time not bouncing between tools, creating custom scripts, or worrying about multiple import/export formats because the HumanSignal Platform gives you a streamlined workflow from data sourcing to ground truth creation to auto-labeling and optional human-in-the-loop review.

To get a sense for exactly how this could benefit your labeling efforts, see what our global manufacturing customer Geberit was able to accomplish using the new Prompts interface for auto-labeling:

- 5x faster labeling throughput

- 95% annotation accuracy against ground truth at scale

- 4-5x cost savings vs. manual and semi-automated labeling efforts

Contact our sales team to learn more and schedule a demo.

Conclusion

Automating your labeling process with Label Studio Community Edition can significantly enhance efficiency and quality in data annotation. By leveraging methods such as bootstrapping labels, semi-automated labeling, and active learning, you can streamline your workflows and focus more on building and refining your models. Each method offers unique advantages and can be tailored to meet your specific needs.

By implementing these techniques, you can reduce the manual effort involved in data labeling, improve the consistency and quality of your labeled data, and ultimately build better-performing machine learning models. For more detailed instructions and technical resources, be sure to explore the linked resources for each method. Happy labeling!