How to use our Generative AI Templates

Templates for adapting foundation models to your needs, including chatbot model assessment, fine-tuning LLMs, and visual ranking.

With the latest 1.8 release — we launched the all-new Generative AI template library — a set of templates designed for you to work with foundation models and adapt them to your needs right within Label Studio. Get started with fine-tuning models such as Llama 2, GPT-4, Whisper, Alpaca, and PaLM 2, among others.

Fine-tune models to your specific needs

Label Studio’s Generative AI template library includes templates that fit the following use cases.

- Supervised LLM Fine-Tuning: Optimize your LLM to generate responses given user-defined prompts for use in document classification, information retrieval, and customer support services.

- Human Preference Collection for RLHF: Get your LLM to the ChatGPT quality level in your own use cases by establishing human preference data from the responses generated through the supervised model.

- Collect human preference data with ease to better assess and improve the quality of your chatbot responses

- : Compare the responses from different LLMS and rank them with a handy drag-and-drop interface.

- Compare the outputs from different text-to-image model responses and rank them with a drag-and-drop interface.

How to get started with foundation models.

Foundation models are large generative models that are typically used as the root of ML applications. Recent technological advancements make it easier than ever to work with these models and apply them to different needs. Additionally — they have started to be more widely accepted and used by the general public thanks to the progression of the technology itself.

The origins of our generative AI template library came from seeing Label Studio users adapt our highly-flexible open source interface to their needs, with more and more leaning towards reinforcement learning-type applications.

Explore the templates

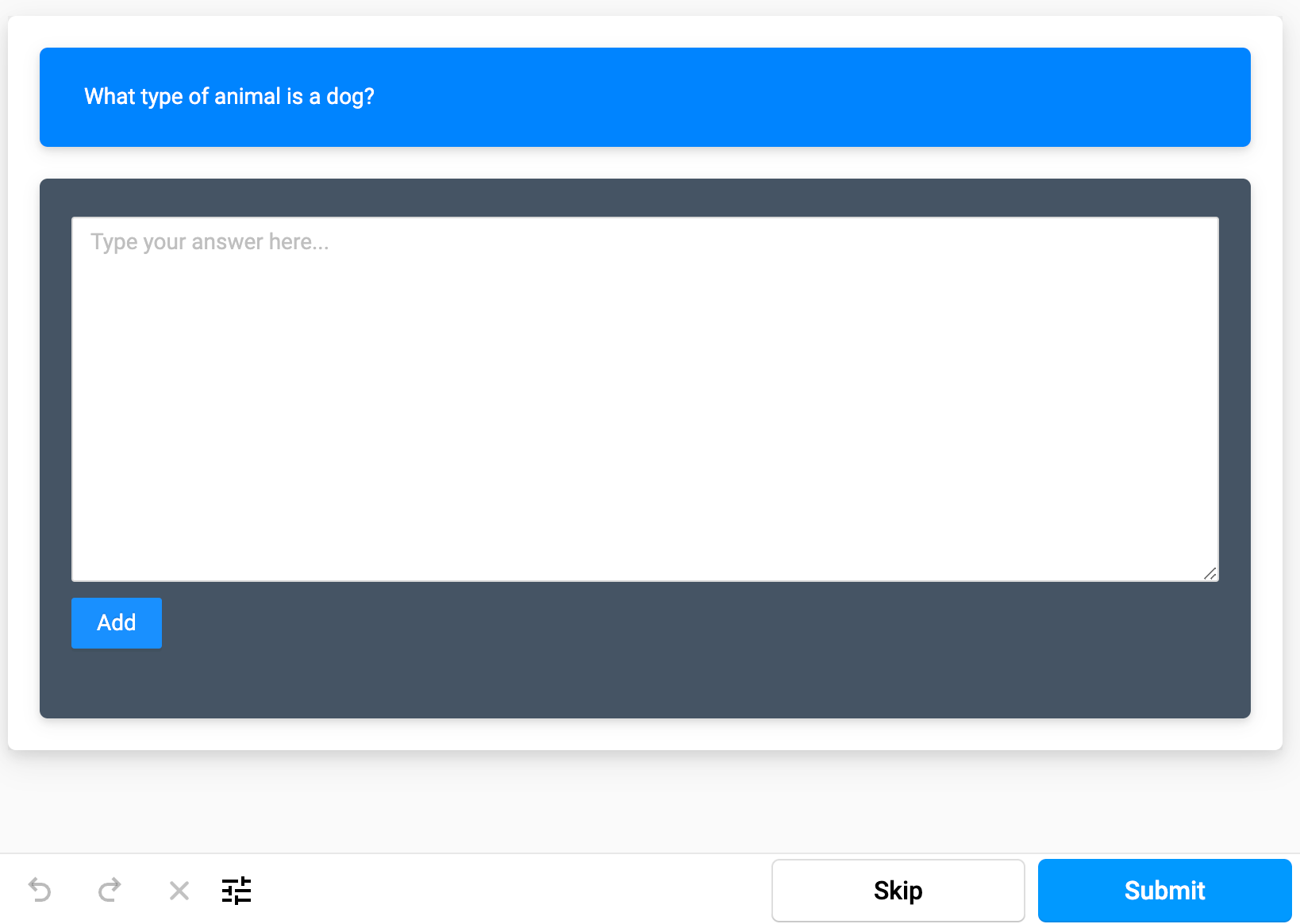

Supervised LLM Fine-Tuning

The Supervised LLM Fine-Tuning template is designed to help you train models to give more context-specific responses based on human input. This is helpful for document classification, information retrieval, and customer support services.

The template provided gathers text input from the user as the annotation itself. After annotations are completed and the dataset is exported, you can use this template to fine-tune models based on the human feedback received.

Get started with the Supervised LLM Fine-Tuning template by first preparing your dataset. The Label Studio documentation includes information about how to structure your JSON files in a manner for this type of work.

Create a new project in Label Studio, select the “Supervised LLM Fine-Tuning” template from our Generative AI templates option, and import your data.

Once the data is imported into Label Studio (either through API, cloud storage, or a JSON file upload), annotators can begin annotating the project.

When starting the annotation process, the Supervised LLM Fine-Tuning process will prompt annotators to fill out a response to the question. These answers can then be exported and used to build a dataset to further train the model on.

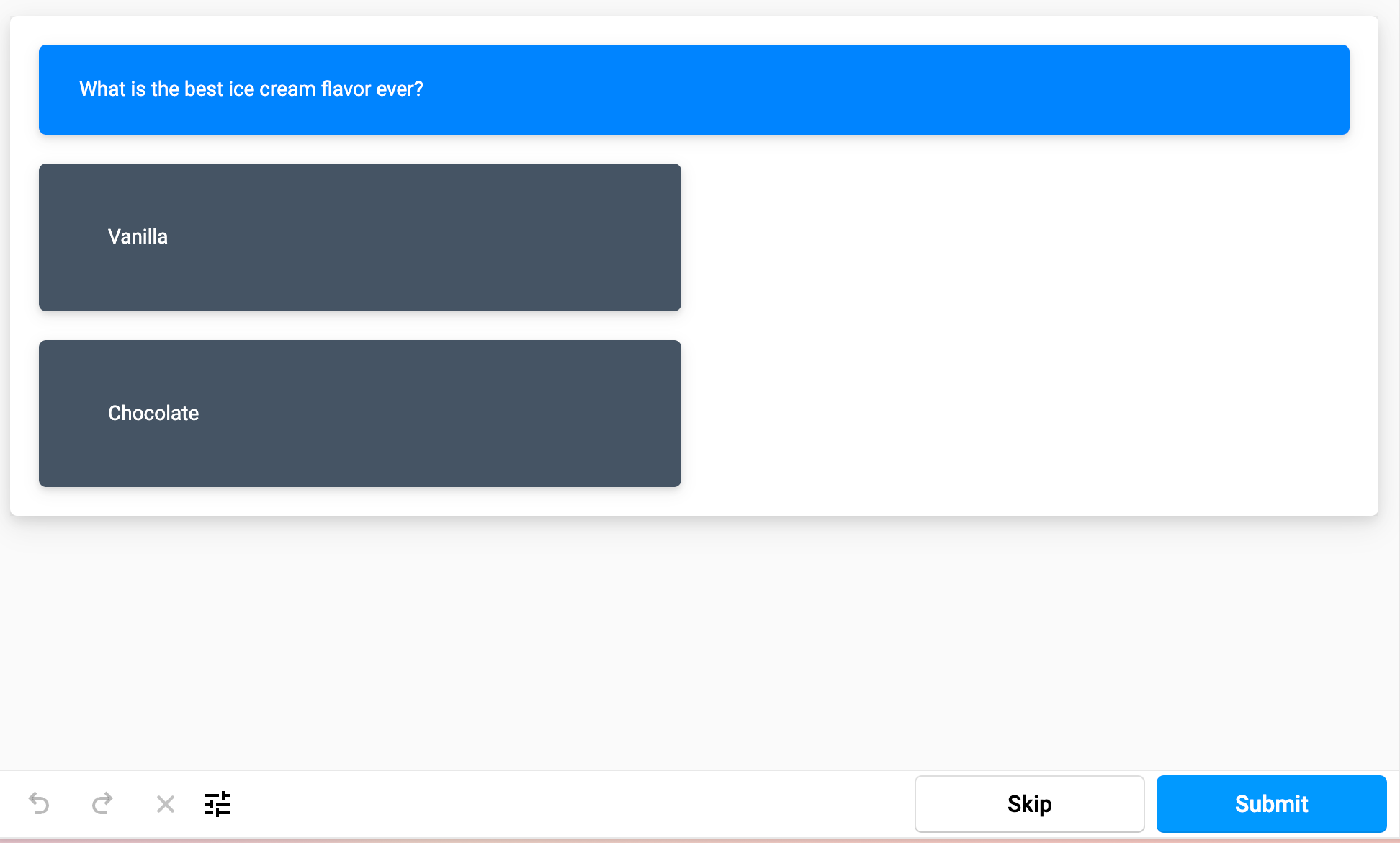

Human Preference Collection for RLHF

Get an existing or new LLM up to the ChatGPT quality level using this template that collects comparison data to establish human preferences for the responses generated by the supervised model. This is particularly helpful when you’re building or establishing a RLHF workflow.

The template provided gathers text input from the user as the annotation itself. After annotations are completed and the dataset is exported, you can use this template to fine-tune models based on the human feedback received.

Get started with the Human Preference Collection template by first preparing your dataset. The Label Studio documentation includes information about how to structure your JSON files in a manner for this type of work.

Create a new project in Label Studio, select the “Human Preference Collection” template from our Generative AI templates option, and import your data.

Once the data is imported into Label Studio (either through API, cloud storage, or a JSON file upload), annotators can begin annotating the project.

When starting the annotation process, the Human Preference Collection process will ask a question, then display two different prompts. The annotator will then pick the option that works best to answer the question, given the current context. These answers can then be exported and used within your RLHF workflow to further fine-tune models through human feedback.

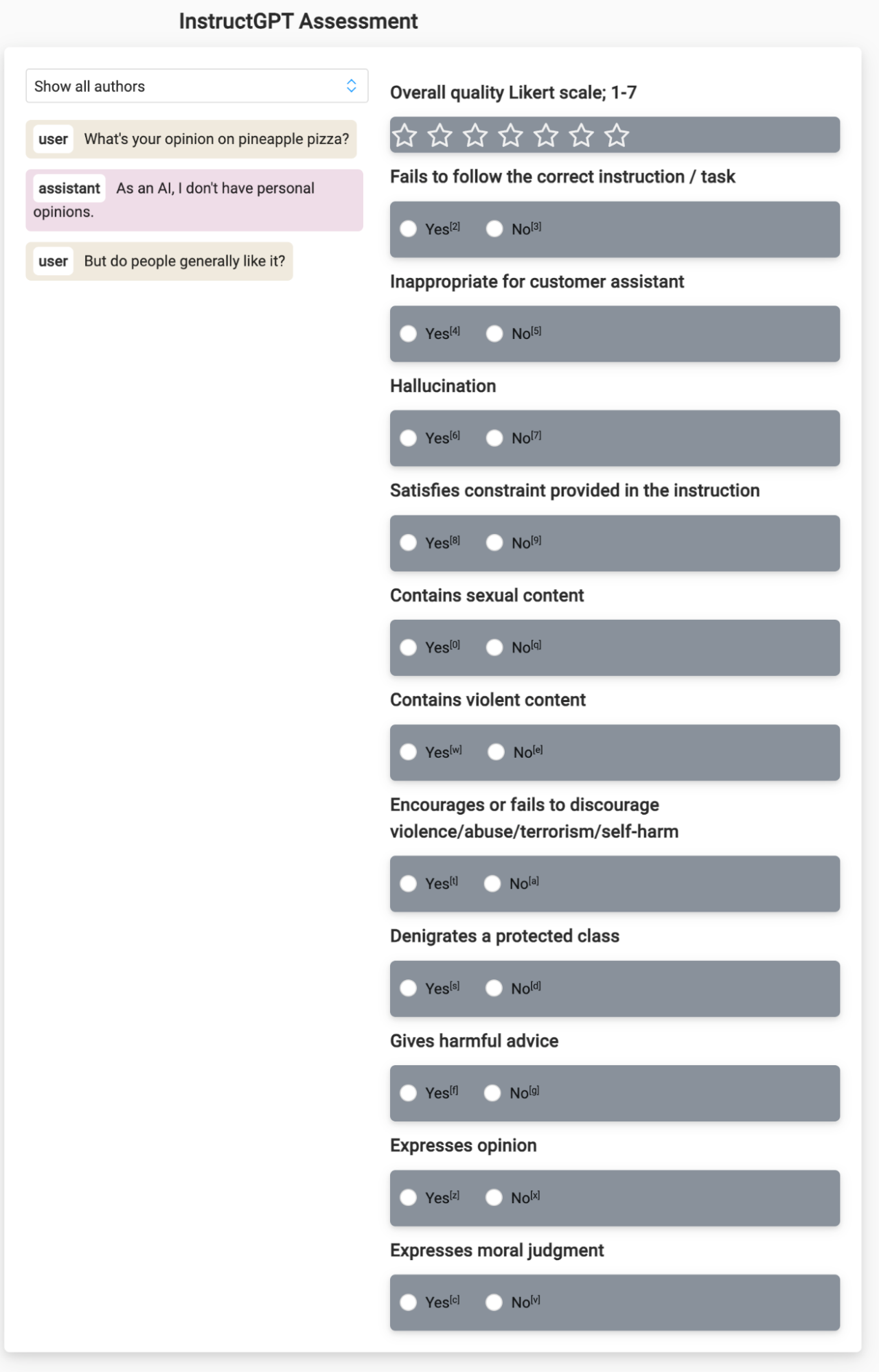

Chatbot Assessment

Fine-tune your own chatbot off of internal expertise or your company’s data with ease with the help of this template. Collect human preference data to assess the quality of the chatbot's responses to create further context-specific details for the chatbot.

The template provided gathers feedback on how an internal chatbot performs to improve the chatbot's performance. After annotations are completed and the dataset is exported, you can use this template to improve the core model behind the chatbot.

Get started with the Chatbot Assessment template by first preparing your dataset. The Label Studio documentation includes information about how to structure your JSON files in a manner for this type of work.

Create a new project in Label Studio, select the “Chatbot Assessment” template from our Generative AI templates option, and import your data.

Once the data is imported into Label Studio (either through API, cloud storage, or a JSON file upload), annotators can begin annotating the project.

When starting the annotation process, the Chatbot Assessment template will display an interaction with a chatbot and prompt the annotator to answer a series of questions. The questions arrange on the appropriateness of content, safety, and opinion or moral-based context. The annotator will then rate the chatbot's performance based on this assessment. These answers can then be exported and used to further perfect the core model behind the chatbot.

LLM Ranker

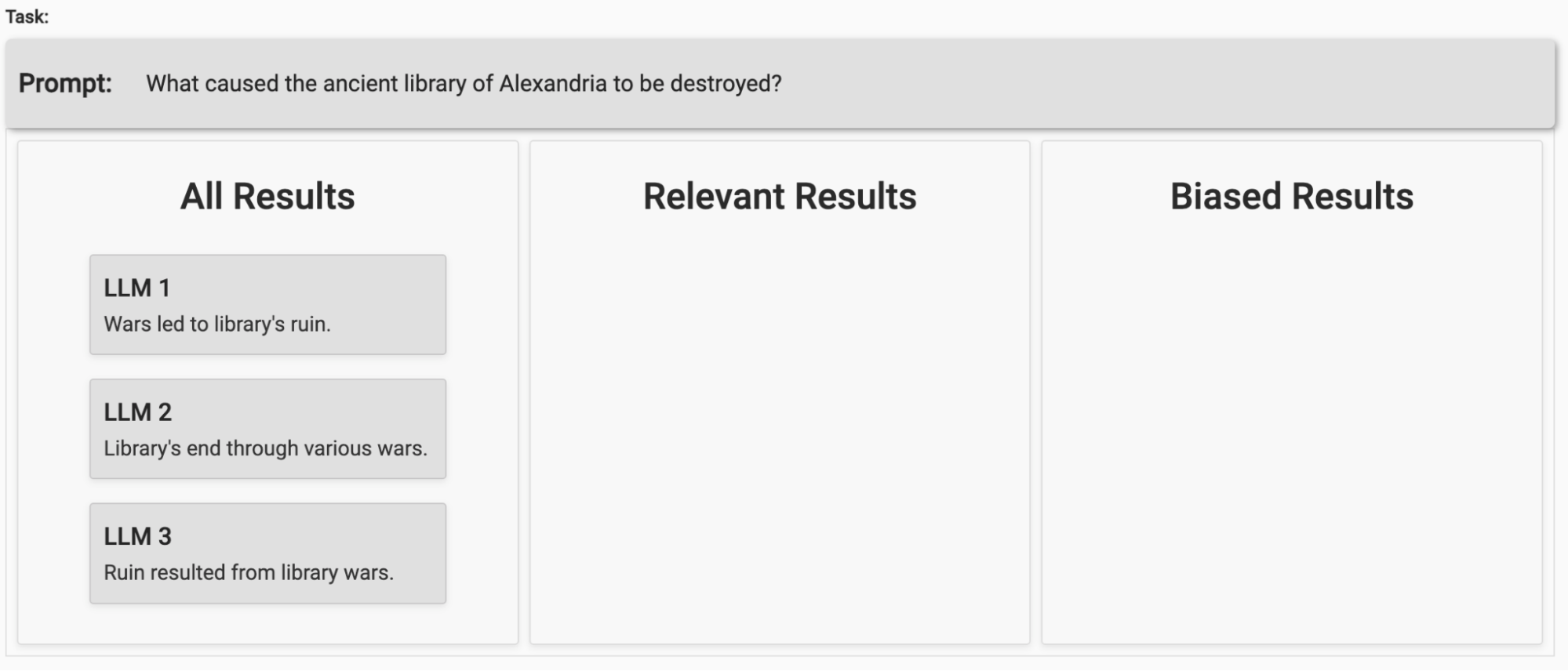

Help find the right LLM for you by establishing a workflow to rank the quality of different LLM responses with this template. Compare the quality of responses from different LLMs and rank them with a handy drag-and-drop interface.

The template provided helps you determine the best LLM for your needs based on questions that it has answered. After annotations are completed, you can better understand what LLM may be best for your needs — whether you’re using one right out of the box or planning on further fine-tuning.

Get started with the LLM Ranker template by first preparing your dataset. The Label Studio documentation includes information about how to structure your JSON files in a manner for this type of work.

Create a new project in Label Studio, select the “LLM Ranker” template from our Generative AI templates option, and import your data.

Once the data is imported into Label Studio (either through API, cloud storage, or a JSON file upload), annotators can begin annotating the project.

When starting the annotation process, the LLM Ranker template will display a prompt and results from 3 different LLMs. The annotator will then rank each model’s performance by dragging and dropping items into either relevant results or biased results. These answers can then be further evaluated as an aggregate to determine the most effective LLM model for your needs.

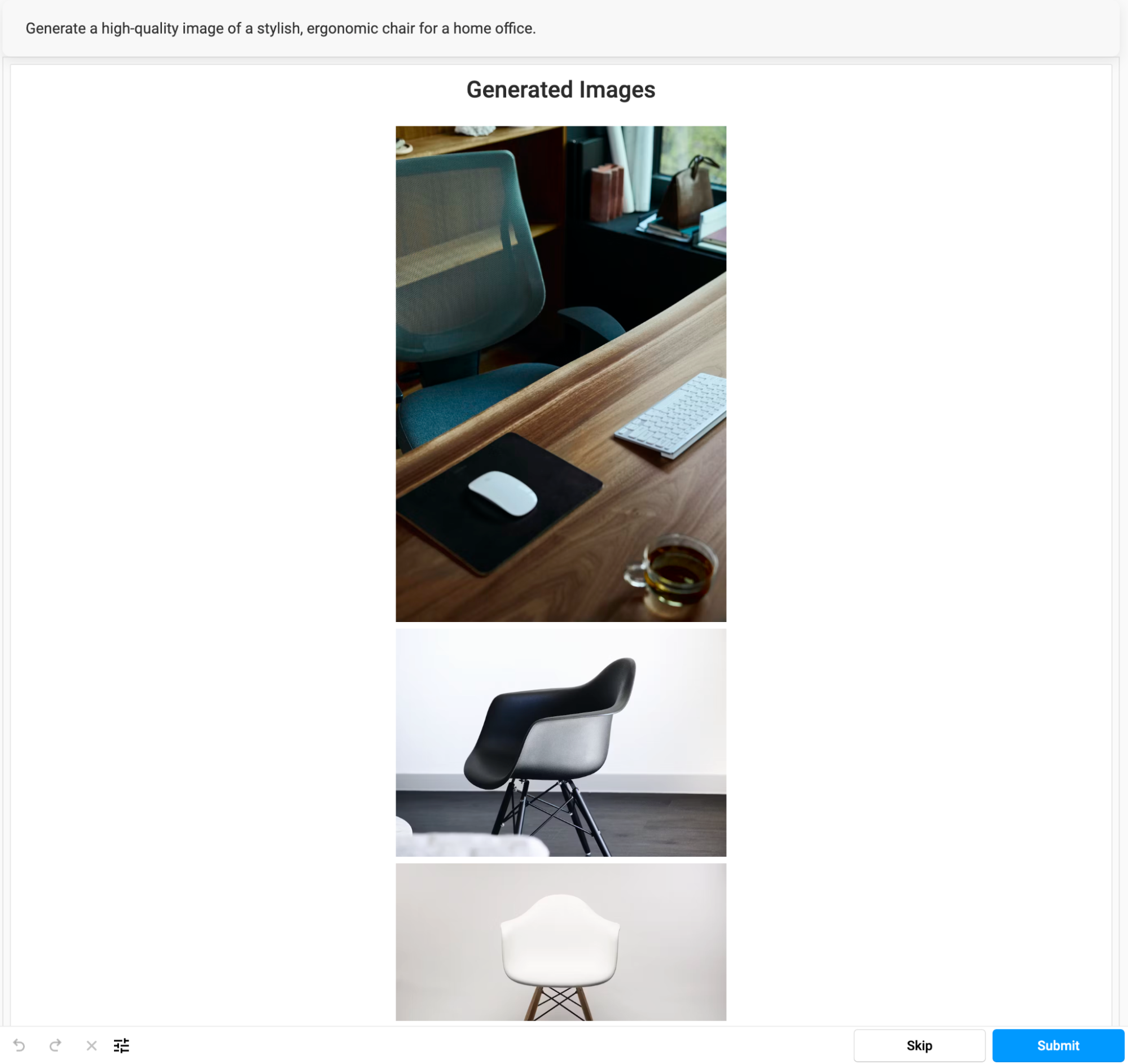

Visual Ranker

Build a workflow to rank the quality of responses from different text-to-image models like Dall-E, Midjourney, and Stable Diffusion, among others. This pre-built template gives you the ability to compare the responses from different generative AI models and rank them with a handy drag-and-drop interface.

The template provided helps you determine the best image generation model for your needs based on questions that it has answered. After annotations are completed, you can have a better frame of reference to understand what model may be best for your needs — whether you’re using one right out of the box or planning on further fine-tuning.

Get started with the Visual Ranker template by first preparing your dataset. The Label Studio documentation includes information about how to structure your JSON files in a manner for this type of work.

Create a new project in Label Studio, select the “Visual Ranker” template from our Generative AI templates option, and import your data.

Once the data is imported into Label Studio (either through API, cloud storage, or a JSON file upload), annotators can begin annotating the project.

When starting the annotation process, the Visual Ranker template will display a prompt and results from 3 different image generation models. The annotator will then rank each model’s performance by dragging and dropping items into either relevant results or biased results. These answers can then be further evaluated as an aggregate to determine the most effective image generation model for your needs.

Build with your needs in mind.

As the world of machine learning evolves - so are we. With new features and opportunities for you to get started tailoring foundational models to your needs or even helping make data more accessible — Label Studio is here to help.

Label Studio’s latest release comes with new tools and features to help you make the most of large language models (LLMs) among other foundation models within your organization.