Introduction to Data Labeling

Introduction to Data Labeling

The increasing growth in the use of AI and ML models (with an expected growth of 32.54% CAGR from 2020 to 2027) has led to a corresponding boom in the requirement for data annotation and data labeling.

But why is this important? Data is the heart of machine learning and artificial intelligence, but data labeling is critical to the success of ML/AI models. If your data isn’t labeled, your model cannot recognize patterns and make predictions, leading to unreliable results. And while AI can now help with automated labeling, the risks of having incorrectly-labeled data corrupt model outcomes are still there.

Consider a scenario where you're building a machine learning model to identify different breeds of dogs based on pictures. You might already have a data set of thousands of dog images, but how do you teach the model to identify each breed accurately? The solution is to label each dog image with its corresponding breed, which helps the model understand the unique characteristics of each breed and differentiate between them precisely.

Furthermore, the accuracy of your data directly impacts the performance of your model. Without proper data labeling, your ML/AI model won't be reliable and the entire process will be a waste of time.

To help you get started with data labeling, we've created this guide that outlines the best practices to ensure you label your data accurately.

Challenges in data labeling

Data labeling involves annotating a lot of data to train machine learning models. It’s not a trivial task, and you therefore must be prepared for the several challenges that come with it.

1. Assembling data from disparate sources

The process of acquiring data can be complex, as it may come in various formats such as images, databases, and text. Each type of data necessitates different labeling techniques, which can be both time-consuming and intricate.

Moreover, the varying quality levels of data sources can adversely affect the accuracy of the entire data set, consequently impacting the resulting model.

To tackle this challenge, a comprehensive plan for data integration is necessary. Such a plan should encompass developing a standardized data format, cleaning and preprocessing the data, and ensuring compatibility across all data sources from start to finish.

2. Ensuring data accuracy over time

Updating the labeling process to reflect changes in data is crucial for maintaining accuracy. However, since data labeling is a manual process, errors and inconsistencies can occur, which can negatively impact the model's performance. Re-labeling data periodically is necessary to maintain accuracy, but this can be a time-consuming and tedious task, especially for organizations dealing with large data sets.

In such cases, ensuring ongoing quality control and monitoring is crucial for maintaining data accuracy. This can involve performing regular spot-checks of labeled data, re-labeling data as needed, and implementing processes to correct repetitive labeling errors. Additionally, implementing a robust data management system to track changes to the data over time can ensure that it remains accurate and relevant.

3. Removing bias from the data labeling process

Bias can occur in many forms, from the selection of data sources to the choice of annotators to the annotation guidelines. You must address the sources of buyers to ensure the resulting model is unbiased and doesn’t perpetuate existing prejudices.

Studies suggest that the diversity in data is a major factor in whether the resulting model is unbiased. For example, if the training data comprises data from a specific demographic, the resulting model may not generalize well to other demographics, leading to biased directions.

It's crucial to note that different types of bias (think: linguistic and cultural bias) can have a negative impact on the model.

For example, an annotator may assume a particular dialect or accent like American-African Vernacular English AAVE sounds ‘negative‘ or ‘uneducated,‘ leading to biased labeling. In this case, you must educate annotators about data nuances and provide them with tools to detect and remove bias from their labeling decisions.

4. Selecting the proper tools

When it comes to data storage and labeling, there are countless tools available, each with unique advantages and limitations. This can make choosing the right tool a complicated task, as you must take various factors into account, such as budget, organizational needs, project supervision, and labeling operations.

To ensure that you make the best decision, it is essential to approach the selection process in a structured manner.

Begin by gaining a clear understanding of the data labeling process requirements. This includes identifying the necessary features, evaluating different tools based on these features, and conducting a pilot study to verify that the selected tool meets all project requirements.

Now that we establish the challenges, let’s discuss what to do instead to make your data labeling process more efficient and accurate.

Identify and understand the data

To build an accurate machine learning model, the first step is to collect the appropriate amount of raw data.

This involves identifying the right data, which forms the foundation of the model. Depending on the algorithm used, the required sample size can vary, with some requiring a small sample set and others needing a larger one. Additionally, algorithms may be designed to work with either categorical or numerical data inputs.

Here are a few of the most common data types you’ll encounter in machine learning:

- Text

- Images

- Audio

- Video

- Time series

- Sensor

But simply having this data isn’t enough — you must also analyze and derive insights from it to make meaningful decisions.

Think of it this way: data set development is the backbone of machine learning, so it’s important to ask the right questions that the data can really answer. And in order to ask the right questions, you need to understand why you need the data and what insights you hope to gain from it.

Moreover, data sources may differ from one company to another. While some organizations have been collecting information internally for years, others may use publicly available data sets. Either way, at this stage, data is inconsistent, corrupted, or simply unsuitable, which is why your data will need to undergo cleaning and preprocessing before any labels are created.

As a rule, you should have the right amount of diverse data as ML models require a large volume of data to provide accurate results. Research recommends having 10 times the number of degrees of freedom in your model. For example, if you have a model with 10 variables, you need a data set with at least 100 observations.

By selecting the right data, you’ll avoid wasting time on irrelevant data or selecting data sets that are too large or too small for your specific needs. So, take time to carefully consider your data sources and the required volume to make your ML project a success.

Build your technology stack

The right technology stack ensures efficiency, accuracy, and data security throughout the data labeling process. Here are some guidelines to guide you through the crucial task of building one for your team:

Choose the right data store for your needs

When choosing technology for your data labeling process, it’s important to let the specific problem guide your choices. Instead of choosing technology first and then trying to apply it to the problem, understand your problem and then select solutions that best fit your requirements.

Different data stores suit different problem domains; you should choose a data store that meets your specific requirements. But, generally speaking, you’ll do well to consider the following factors to guide your data storage selection process:

- Cost-effectiveness

- Scalability (capable of collecting and storing large volumes of data to create ML/AI models)

- Performance with parallel access and optimized latency and throughput

- Availability and durability for no (or lower) downtime

- Public cloud compute with GPU-accelerated virtual instances (this reduces the capital cost of building infrastructure for ML model development while allowing you to scale as needed)

- Wide integration facility to reduce barriers to adoption for ML and AI storage

Analyze and transform the data

Analyzing and transforming your data are necessary steps before labeling. This involves several steps, including:

- Building data pipelines: Build data pipelines that can aggregate data from disparate locations into a single source and automate the data acquisition process. Consider integrating data from various sources, such as databases, data lakes, or APIs, into a centralized location your team can easily access and analyze.

- Anonymizing the data: If you’re dealing with personally identifiable information (PII), you must take the necessary measures to remove or mask sensitive information to protect the privacy of individuals whose data is being analyzed and labeled. This is also helpful to remain compliant with data regulations like the GDPR and CCPA.

This will help you transform your data into features that better represent the underlying problem of the predictive ML models, helping you make informed technology decisions.

Secure access to the data

Limit access to only require stakeholders to protect sensitive information from being disclosed to unauthorized individuals or groups and prevent malicious actors from accessing or tampering with data.

For instance, you can implement access controls, such as user authentication and authorization mechanisms. This involves verifying a user’s identity through a set of credentials (think: biometric data, username, and password), after which they’re authorized to perform specific actions on the data, based on their assigned level of access privileges.

This is particularly helpful when resolving tokenized identifiers.

Tokenization is a technique used to replace sensitive data elements with non-sensitive placeholders with no extrinsic meaning or value. While it's an effective technique to protect sensitive data, it also introduces the potential risk of unauthorized access to the original data, especially when the tokens aren’t secured properly.

Implementing security measures such as user authentication, encryption, and access controls helps boost data security and prevent unauthorized access.

Select a data labeling software

Investing in high-performing data labeling software can help you work smarter instead of harder. With the ability to automate the tedious labeling process, these tools offer an advantage in terms of both efficiency and accuracy. Additionally, they promote collaboration and quality control throughout the data set creation process.

However, with so many options for data storage and labeling tools available, it's important to find the one that best suits your specific needs.

Ensure that your chosen tool meets all of your criteria, and consider seeking out tools that support active learning. This technique involves strategically sampling observations to gain new insights into the problem, ultimately reducing the total amount of data required for labeling.

Note: Learn how to set up active learning with Label Studio.

Define how you’ll label data

Lastly, you'll want to define how your technology stack will label data.

Depending on how much data you want to label, how fast you need to label them, and the resources you can apply to the exercise, you can choose from the following five data labeling approaches.

- Internal, where you use in-house data science experts to label data

- Programmatic, where you learn programming skills to automate the data labeling process, eliminating the need for human annotations

- Synthetic, where you use computing resources to generate new project data from existing data sets

- Outsourcing, where you use and manage freelance ad hoc data professionals to do data labeling (for example, Amazon Mechanical Turk)

- Crowdsourcing involves using micro-tasking solutions to distribute data labeling tasks among a large number of people. This can be integrated into regular activities, such as the Recaptcha project.

…which brings us to the next data labeling foundation.

Build your data labeling team

When deciding how to source and train your data labeling team, in general you have the following two options:

- Insourcing: Requires more human, time, and financial resources, but leads to predictable results in the long term. You also have more control over the data labeling process.

- Outsourcing: More cost-effective, but maintaining consistency can be harder with growing turnover. Annotators may also need to undergo proper training to establish consistency in contextualization and understanding project guidelines.

Consider your existing requirements, and accordingly make a choice — but don't undermine the importance of domain expertise and diversity in effective data labeling.

The importance of subject matter experts in machine learning

Machine learning (ML) is a powerful tool, but it is not a replacement for subject matter expertise. As stated in a Wiley Analytical Science Magazine article, "ML is a tool for experts, not a replacement of experts." This is particularly true in specialized domains that rely heavily on human judgment, such as X-ray filmography.

While ML algorithms can diagnose broken bones from X-rays, they face challenges in accurately identifying variances such as different angles, X-rays from different hospitals, and image anomalies. Human experts, on the other hand, can generalize their learnings from a set of images and extrapolate to changes, resulting in accurate labeling of images.

But subject matter expertise alone is not enough.

To remove bias, you also need a diverse team of annotators. Most training data sets have ambiguous information, and by building a team of diverse annotators who can express a subjective point of view and introduce different perspectives, you can improve the accuracy and fairness of the resulting ML model.

Create a data labeling process

At this stage, you’ve set up a diverse data labeling team and have provided them with the right tooling, but your job is far from done.

You also need to ensure you have an efficient data labeling process in place. While you can check out our guide on the building blocks of the process, there are a few guidelines to keep in mind.

Outline your data labeling process

It is crucial to document the entire process of labeling your data from start to finish, particularly if any team members are outsourcing.

This documentation should clearly define the roles and responsibilities of each team member, as well as the labeling process as a whole, including the necessary tasks and their respective timelines. By doing so, you can ensure that all team members are on the same page and that the labeling process remains organized and efficient.

So, no matter what steps you take moving forward, make sure you're keeping detailed records of the process.

Define your process management methodology

Define a process management methodology that covers all the important aspects of the data labeling process, including planning, execution, monitoring, and quality control. This will ensure the labeling is completed efficiently and on time.

Build a data tagging style guide

Create a style guide for tagging to ensure consistency and uniformity in labeling throughout your data set. This guide should provide clear explanations of what's required, and establish a mission for annotators to work towards, to minimize potential mistakes during the labeling process.

Create a data tagging taxonomy

Data tagging is a valuable categorization technique that can enhance the accuracy and quality of models by preventing duplication and errors. This system involves grouping labels into layers of obstruction, which creates a structured framework for organizing data.

There are two types of data tagging systems to choose from: flat and hierarchical.

The flat data tagging taxonomy is a straightforward list of unlayered tags, and it is ideal for companies with low data volumes or segmented departments.

On the other hand, the hierarchical data tagging taxonomy follows an order of abstraction and is better suited for companies with large data sets. This system allows for more specificity and details when adding new tags, providing a more comprehensive and detailed categorization.

Note: Learn more about the building blocks of an efficient data labeling process.

Leverage initial tagging for model training

Use a small subset of data and manually label it to create the initial set of training data to optimize the tagging process for the specific data set. This is known as initial tagging and is necessary to train the model to ensure it generates reliable results.

Establish ground truth annotations

Define the ground truth annotations for your project. You can either have expert annotators manually label a subset of the data, or use crowd workers.

These annotations are the correct labels for each data point and will serve as the benchmark for the accuracy of the model. By comparing your model’s predictions against them, you can easily measure accuracy and address discrepancies in the labeling process.

Finalize your data tagging process

Clarify how you’ll tag your data going forward. Aim to build a comprehensive system of tags or labels so your team can categorize and organize your data in a way that’s both effective and efficient.

This can help ensure your data labeling process is ongoing and that the resulting model is continuously improving.

Speaking of…

Continuously improve your data labeling operations

The quality of data annotations depends on how precise the tags are for a specific data point, how accurate the coordinate point is for bounding box and keypoint annotations, and whether it’s free from biases. And ensuring this is an ongoing process.

Define metrics for data labeling

The first step towards improving data labeling operations is defining the right metrics to measure success. This helps you understand the quality of the labeled data and identify areas for improvement.

Some data labeling metrics to consider are as follows:

- Inter-annotator agreement (IAA): This measures the level of agreement between different annotators. High agreement levels indicate the labeling process is consistent and accurate.

- Label distribution: This measures the distribution of labels across the data set. If the labels aren’t distributed evenly, it indicates the labeling process is biased.

- Annotator performance: This measures the performance of individual annotators, helping you identify areas of improvement.

Observe metrics to make improvements

Once you’ve defined the metrics, it’s now time to monitor them regularly to optimize your data labeling process to ensure higher quality results.

Observing metrics over time will help you identify trends and areas for improvement. For example, if the IAA metric drops suddenly, you’ll know you should review the process to ensure consistency.

Quality measures

Quality measures are another crucial aspect of continuous process improvement. This includes:

- Consensus tagging is when multiple annotators label the same data point, using a consensus approach to determine the final level. This can be useful for reducing errors in improving the accuracy of the label data.

- Label auditing involves auditing a sample of labeled data to ensure the accuracy and consistency of the tables. If any error is identified, they can be corrected immediately, improving the overall David in process.

- Active learning is an approach where you use machine learning algorithms to select the most informative data points for labeling. This approach not only reduces the amount of data that needs to be labeled but also ensures superior quality results.

- Transfer learning involves retraining a pre-existing model that has been trained for one use case to label similar data sets for another use case. This not only accelerates the labeling process but also improves the accuracy of the labeled data.

By incorporating the above quality measures, among others, data labeling operations can be continuously improved, ultimately leading to superior results in machine learning models.

How Label Studio can help

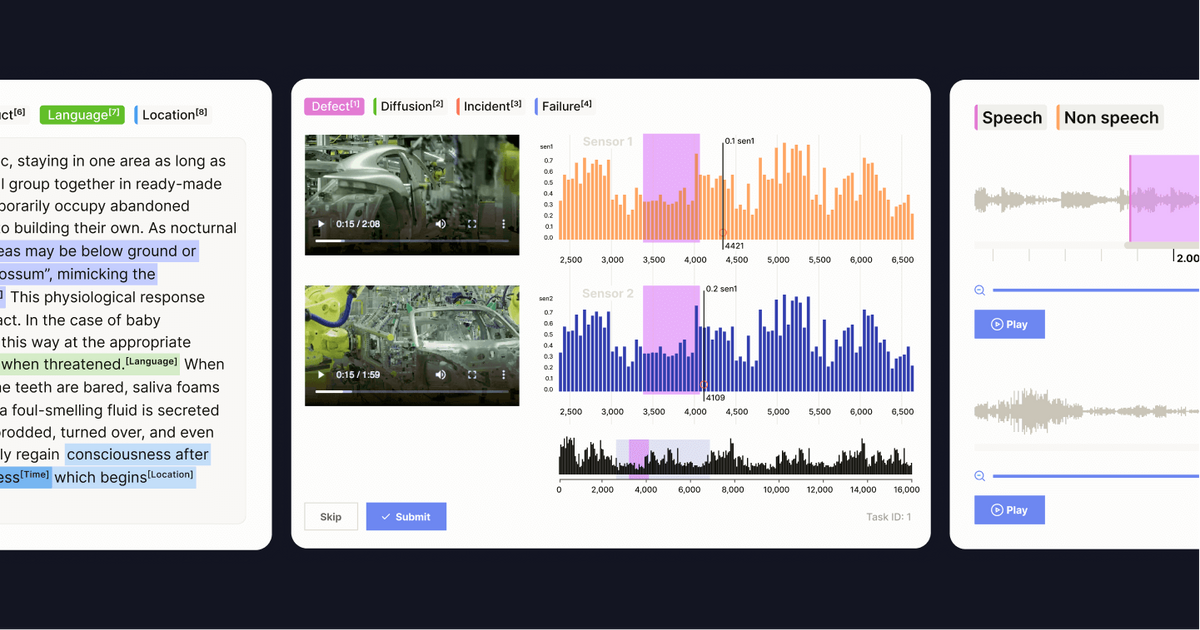

Label Studio is a powerful web application platform that offers the best-in-class data labeling service and exploration of multiple data types.

Simply put, Label Studio makes data labeling easy, efficient, and accessible for almost every possible data type, be it text, image, audio, video, time series, or multi-domain. It allows you to precisely and efficiently label the initial data set based on your specific requirements, and enables human-in-the-loop oversight for models already in production.

Think of it as a more flexible and efficient way to do data labeling that can help you generate more reliable results.

You can integrate a model and use this tool for preliminary labeling on the data set. After that, a human annotator can review and modify the automated labels, verifying the accuracy of the labels and updating inaccurate labels.

The end result? Data sets with higher accuracy can be easily integrated into machine learning applications.

How Label Studio works

Label Studio takes data from various sources like APIs, files, web UI, audio URLs, and HTML markups. It then pipelines the data to a labeling configuration with three sub-processes — task, completion, and prediction — to ensure accurate labeling and optimized data sets.

What's more, the machine learning back end of the platform can be equipped with popular and efficient ML frameworks to create accurate data sets automatically.

If you're looking for a data labeling tool that generates high-level data sets with a precise labeling workflow and offers easy-to-use automation, get started with Label Studio today.