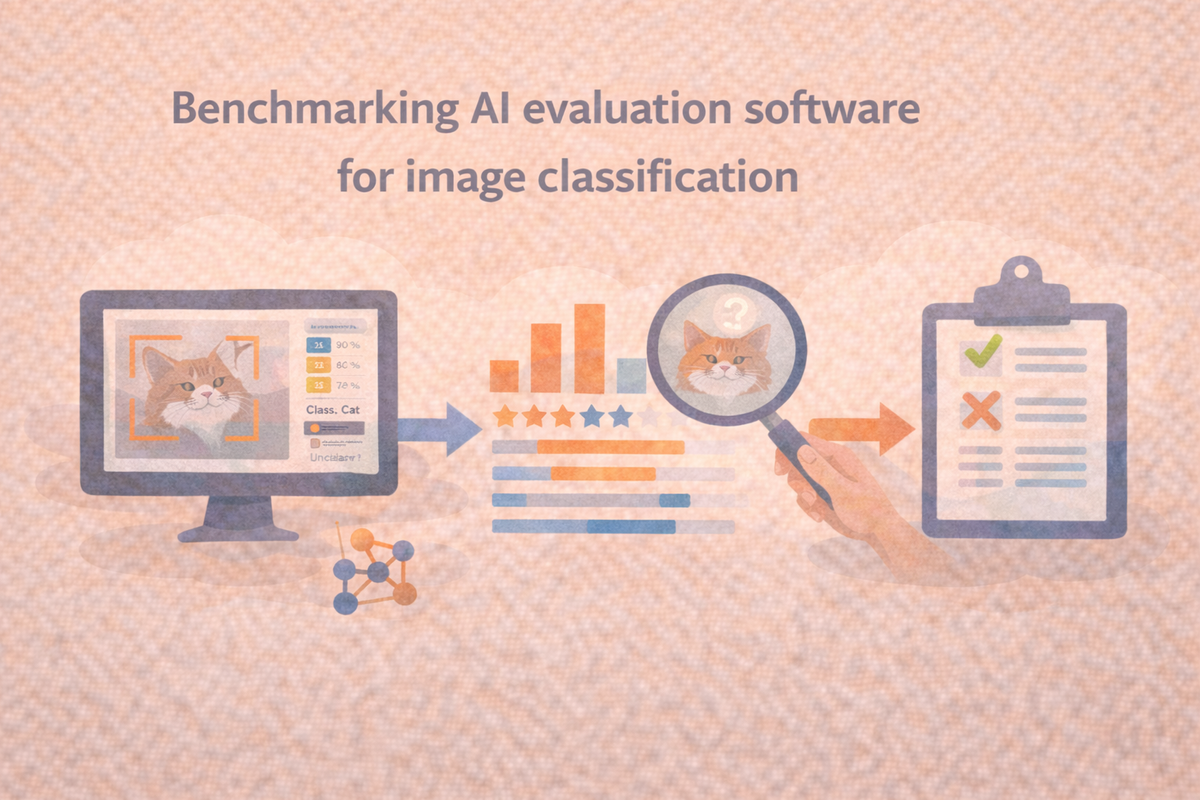

How to benchmark AI evaluation software for image classification

Benchmarking evaluation software sounds meta, but it matters. Two tools can run the same model and dataset and still lead you to different conclusions if they handle splits, preprocessing, thresholds, or reporting differently. A good benchmark proves that the evaluation workflow is repeatable, comparable across runs, and useful for debugging real model failures.

To benchmark AI evaluation software for image classification, test whether it produces consistent results on a fixed dataset, supports the metrics you need, makes preprocessing and thresholds explicit, and helps you diagnose errors beyond a single accuracy number. The best evaluation setup combines repeatable runs, clear reporting, and failure analysis that surfaces what changed and why.

Start with a “known-good” dataset and lock it down

Pick a dataset that matches your task type (single-label, multi-label, or hierarchical). Then freeze the evaluation inputs so you can compare tools fairly.

That means:

- A fixed test split (or multiple fixed splits) with versioned IDs

- A documented label set, including how “unknown” or “other” is handled

- A single, explicit preprocessing recipe (resize/crop/normalization) that you can replicate

If one tool silently applies center-crop and another uses letterboxing, your benchmark turns into a preprocessing comparison instead of an evaluation comparison. Your goal is to control the inputs so the evaluation software is what you are benchmarking.

Define the task clearly: top-1, multi-label, or open-set

Image classification benchmarks fall apart when the task definition is fuzzy. Be explicit about:

- Single-label vs multi-label: multi-label needs per-class thresholds and different metrics

- Class imbalance: overall accuracy can hide poor minority-class performance

- Open-set behavior: do you need “abstain” or “unknown” handling, and how is it scored?

This is also where you decide what a “correct” prediction means. Some domains accept near-misses (taxonomy parent classes), others do not.

Compare metrics based on what you need to learn

A strong evaluation tool should support a mix of aggregate performance and diagnostic views. Accuracy alone rarely tells you where the model fails.

Here’s a metrics map you can use to benchmark whether the software supports what you need:

| Metric / output | Best for | What it can hide |

| Top-1 accuracy | Simple single-label tasks | Minority class failures, brittle edge cases |

| Top-k accuracy | Cases where multiple labels are acceptable | Overconfidence, poor ranking calibration |

| Precision / recall | Imbalanced classes | Behavior across thresholds if not plotted |

| F1 (macro vs micro) | Comparing balanced vs skewed performance | Which classes drive the score |

| Confusion matrix | Systematic confusion between classes | Multi-label behavior, threshold effects |

| Calibration (ECE, reliability plots) | Whether probabilities can be trusted | Fine-grained error types |

| Per-slice metrics | Behavior across subsets (lighting, device, region) | Global averages that look “fine” |

When benchmarking evaluation software, the question is not “does it compute accuracy?” Almost every tool can. The question is whether it makes the right breakdowns easy and repeatable.

Benchmark repeatability and comparability across runs

Your evaluation software should make it easy to answer: “Did the model improve, and where?”

Set up a minimal regression test:

- Run evaluation on the same model twice and confirm results match exactly.

- Run evaluation on two model versions and confirm the software can compare runs without manual stitching.

- Change one controlled variable (threshold, preprocessing, label mapping) and confirm the change is captured explicitly in the report.

If evaluation artifacts are not versioned, comparisons become subjective. Benchmark whether the software records the full run context: dataset version, model version, config, and metric definitions.

Test whether it supports error analysis, not just scoring

The practical value of evaluation software is how quickly it helps a team debug failures.

Benchmark whether the tool can:

- Pull representative false positives and false negatives

- Show confidence distributions for correct vs incorrect predictions

- Surface class-level failure patterns that suggest label issues or taxonomy ambiguity

- Track “hard examples” consistently across model versions

A useful benchmark is time-to-insight: how long it takes a reviewer to identify the top three failure modes after looking at the evaluation output.

Include robustness, drift, and data quality checks

Real classification performance shifts when data changes. Even if your primary benchmark is offline, evaluation software should help you test robustness.

Good robustness checks include:

- Performance by image quality (blur, compression, low light)

- Performance by source (camera type, geography, domain segment)

- Stress tests (occlusion, crop sensitivity, background changes)

Even simple slices reveal whether your evaluation workflow can catch regressions before deployment.

A practical benchmarking checklist

Use this table as your scoring rubric when comparing evaluation software:

| Category | What “good” looks like |

| Reproducibility | Same inputs produce identical outputs; configs are saved |

| Transparency | Preprocessing, thresholds, and label mapping are explicit |

| Metric coverage | Supports task-appropriate metrics and per-class views |

| Comparisons | Run-to-run comparisons are built in and traceable |

| Debuggability | Fast access to error examples and failure patterns |

| Slice analysis | Easy to define subsets and compare slice performance |

| Governance | Exportable reports, audit trail, and versioning of artifacts |

Frequently Asked Questions

Frequently Asked Questions

What is the most common mistake when benchmarking evaluation software?

Letting tools use different preprocessing, splits, or label mappings. Lock down inputs first, then compare evaluation behavior.

Should I benchmark on a public dataset or my own data?

Start with a public dataset to validate repeatability and metric correctness, then benchmark on a representative internal set to test real-world slices and edge cases.

How do I handle class imbalance in benchmarking?

Use macro-averaged metrics and per-class breakdowns, then validate that the evaluation software makes minority-class failures easy to spot.