Adala 0.0.3 Release: More ways to capture feedback, parallel skills execution, and more!

Adala 0.0.3 introduces key updates enhancing AI agent feedback and control. Notable features include the extension of feedback collection to prediction methods and new console and web environments for improved feedback integration.

Explore the latest Adala release in GitHub.

We've decoupled Runtimes from Skills, allowing for more flexible skill definitions and controlled outputs. This update also refines basic skill classes into three core classes: TransformSkill, AnalysisSkill, and SynthesisSkill, enabling better composition and class inheritance. Additionally, ParallelSkillSet allows for concurrent skill execution, increasing efficiency.

The update also improves skills connectivity with multi-input capabilities and introduces a new input/output/instruction format for greater clarity and precision in skill deployment.

Environments

We’ve extended at what point feedback can be collected back into the agent through an environment. Before it was only possible during the ‘learn’ step, but now it’s possible to request validation while running the prediction method. We also introduced new integrations where feedback could be collected:

- Console Environment - users can provide feedback via console terminal or jupyter notebook.

- Web Environment - connecting to a 3rd-party API to retrieve user feedback. This is a wrapper to support webhook-based communication for services like Discord and Twilio.

- Specific feedback protocol follows the following policy:

- Request feedback POST /request-feedback

- Retrieve feedback GET /feedback

- Web server example implementation that uses Discord app to request human feedback

- Specific feedback protocol follows the following policy:

Changes to the collection of user feedback

Adala's latest update enhances its ability to interpret more subtle user feedback. In the previous version, Adala v0.0.2, users needed to provide explicit ground truth labels or texts as feedback. For instance, if the agent predicted "Positive," the user had to respond with the correct label, like "Negative," to indicate a mistake. However, in the new version, Adala v0.0.3, users have the flexibility to give feedback in various forms, such as simple affirmations or rejections (Yes/No, Accept/Reject) or even by guiding the agent with reasoning, without needing to state the explicit ground truth label. For example, if the agent predicts "Positive," a user could respond with "Reject" or explain why the prediction is incorrect.

Runtimes

Runtimes are now decoupled from Skills constrained generation schema. That enables skills to be defined using generic template syntax, and runtimes support controllable output through the use of native methods, or frameworks like Guidance. We’ve also expanded on the supported runtimes, currently supported are:

- OpenAI

- Guidance

- LangChain (new!)

Skills

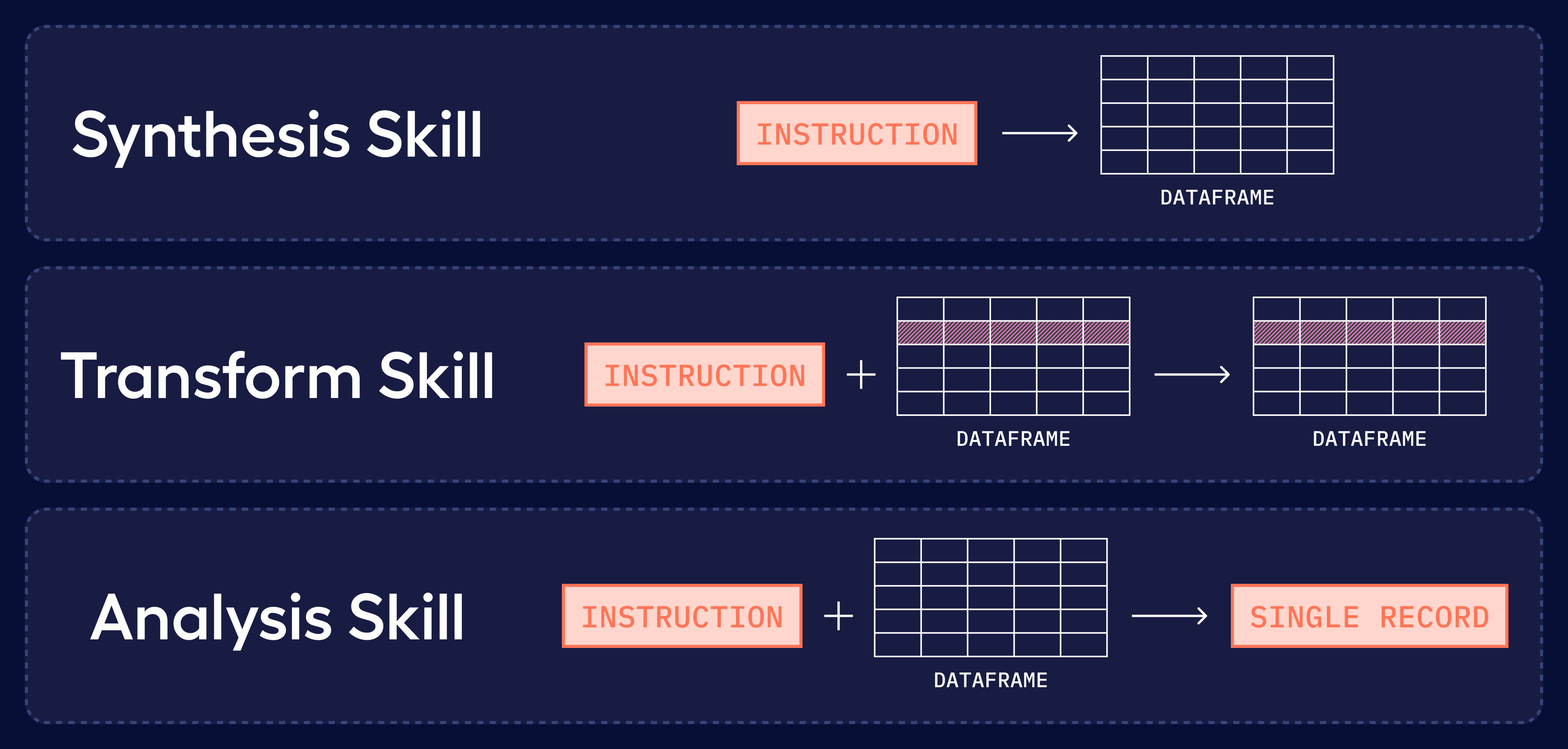

Refactoring skills into 3 core parts, that together cover a variety of different ways how data processing can happen:

- TransformSkill - can process dataframe, record by record, and returns another dataframe. Typical use cases - automated data labeling or summarization for the datasets of structured data.

- AnalysisSkill - can compress dataframe into a single record. Use case: data analysis, dataset summarization, prompt finetuning.

- SynthesisSkill - can create a new dataframe based on a single record. For example, synthesizing dataset given the prompt

Combining these skills with LinearSkillSet or ParallelSkillSet (new! from external contribution) enables complex agent workflows.

- ParallelSkillSet - combines multiple agent’s skills together to perform data processing in parallel. For example, you may classify texts in multi-label setup, or perform self-consistency checks to improve the quality of LLM-based chatbots.

Skills connectivity

Updates in a way how skills interconnect - multi-input skills. It enables agents to take multiple data columns as input to infer with additional control parameters (e.g. target language, style, or any additional data).

For example, it is possible to include two data columns:

TransformSkill(input_template="Translate {text} into {language}", ...)Skills format

The input/output/instruction template now supports simple f-string specifications and JSON-schema defined variables format. This approach decouples

For example:

TransformSkill(

instructions="Translate text and classify it"

output_template="Translation: {translation}, classification: {classes}"

field_schema={

"classes": {

"type": "array",

"items": {

"type": "string",

"enum": ["Positive", "Negative"]

...

)