Evaluating Mistral OCR with Label Studio

Evaluating Mistral OCR with Label Studio

Organizations rely on an overwhelming volume of documents—reports, scanned contracts, academic papers, and beyond. Unlocking the value within these materials requires more than just extracting text; it demands a model that understands the full structure of a document, including images, tables, and equations.

Mistral OCR iis a new Optical Character Recognition (OCR) API that tackles the complexity of real-world documents. Unlike basic OCR tools, Mistral OCR extracts text, images, tables, and even equations, preserving layout and structure with impressive accuracy.

For AI teams working with large-scale document processing, evaluating OCR models is essential. That’s where Label Studio comes in. High-quality annotation is the key to measuring and improving OCR performance, ensuring that extracted text and document structures align with real-world use cases.

Why Evaluate Mistral OCR?

OCR technology is only as good as its accuracy across diverse document types. Mistral OCR not only processes text but also retains formatting, understands multilingual inputs, and extracts structured data. Evaluating its output helps teams verify:

- How well text is recognized across different fonts, languages, and layouts

- Whether tables, equations, and images are captured accurately

- The model’s ability to maintain logical flow in extracted content

Using Label Studio, you can annotate and compare OCR results to ground truth data, fine-tuning performance for your specific needs.

Try It Yourself: Mistral OCR + Label Studio

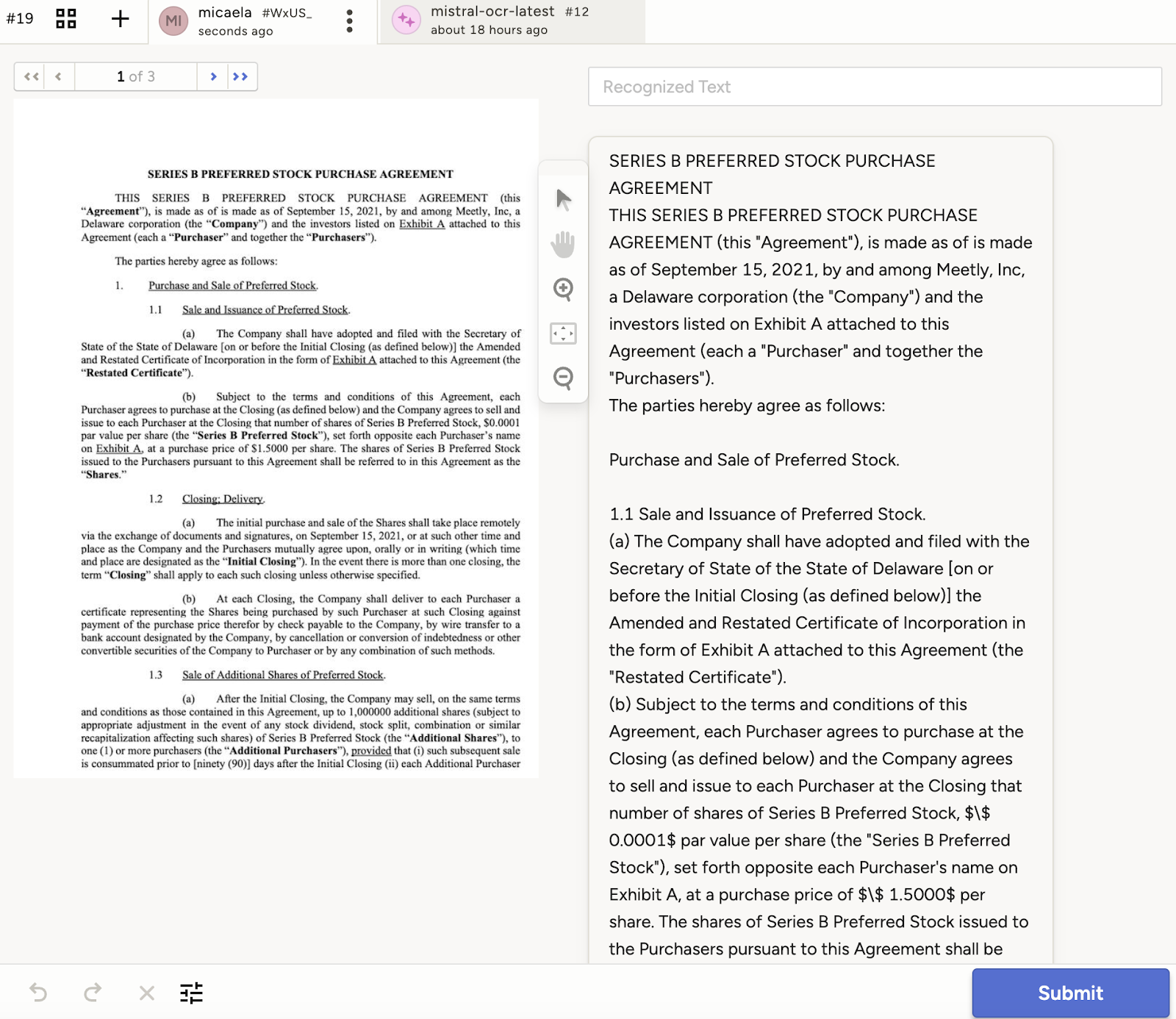

To help teams get started, we’ve prepared a sample task in Label Studio that demonstrates Mistral OCR’s capabilities. With this setup, you can:

- Upload the images that make up your document (learn more about this in our documentation for Multi-page Document Annotation!) Automatically extract text and document structure using Mistral OCR

- Compare extracted content to original documents

- Refine outputs through human-in-the-loop annotation

Mistral OCR is already powering large-scale document understanding, and with Label Studio, you can assess its performance on your own data. Whether you’re working with legal documents, academic papers, or complex technical reports, this combination helps ensure that your OCR pipeline delivers reliable results.

Get Started

The sample notebook is available here so you can test Mistral OCR with Label Studio today. Let us know what you discover!