Generate predictions & evaluate models using AI workflows—Label Studio 1.12.0 Release

Considering the huge productivity wins AI and ML are bringing to virtually every industry, it's a bit ironic that the data teams creating these new models haven't had an easy path to automate their own workflows. If our job is to build automation, why aren't our tools, well, more automated?

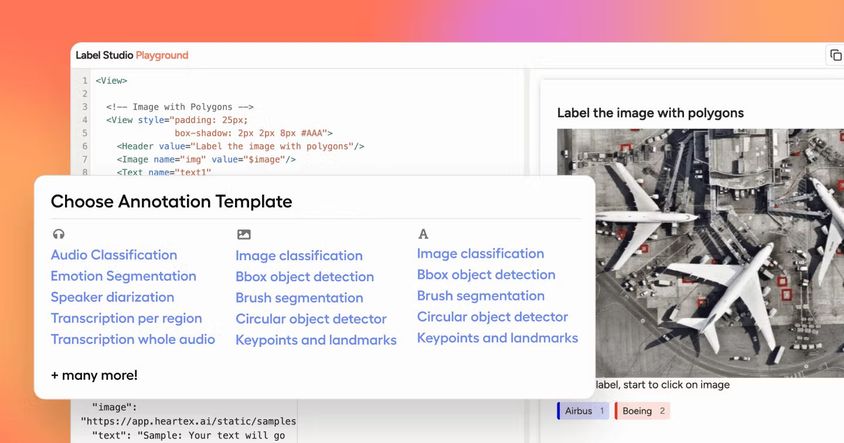

It's not that there hasn't been any interest or activity in automating data discovery and annotation workflows. There are many services to automate image annotation & classify data, but they have limitations: lack of support for multi-modal data, no ability to select your own models, and UIs with very little opportunity for customization.

This is why we're really excited to be introducing powerful new workflow automations in a way that's as customizable and adaptive as you'd expect from Label Studio. The latest release 1.12.0, is available today. To deliver something built to the demanding specifications of professional data teams, we set four key requirements for AI integrations:

- The ability to automate labeling with *any* model: a commercial LLM API like GPT4, Claude, popular open source models from Hugging Face, LangChain, Segment Anything, GroundingDINO, YOLO, or even your own custom model.

- Developer tooling that makes it easy to connect and evaluate a running model to Label Studio with a simple integration interface (while still offering the ability to customize the integration in code).

- Automation workflows that naturally extend Label Studio and feel both instantly familiar and instantly productive to annotators.

- Automation that harnesses the full power of Label Studio's multimodal support and rich support for customizing the UI. Use computer vision, OCR, LLMs, chatbots, agents, and traditional NLP inside workflows tailor-built to your team’s unique requirements.

What’s possible with automation and Label Studio

Below we’ll discuss how to connect your selected model to Label Studio, but let's first focus on the productivity gains from augmenting Label Studio with a Generative AI or ML model. With a connected model running, your team can:

Automate labeling predictions across any dataset.

AI models can greatly accelerate all of the complex and time consuming tasks that slow down annotators:

- Automatically identify objects contained in images and create complex regions—no need to trace by hand

- Generate answers for a dataset of questions using context provided by your own data and documents (RAG). Annotators can then review, edit, and approve the predicted answers.

- Generate human-friendly summary paragraphs from large collections of data points

- Automatically identify key moments and speakers to predict annotations on an audio timeline

This all works similarly to loading a set of existing predictions into Label Studio, except in this case, the labeling task is sent to the model as context and the predictions returned by your model are applied right inside of Label Studio. Annotators can then review and accept predictions rather than tediously entering their own.

Speed up manually intensive data labeling with AI assistance and magic tools.

For example, an image annotation task may require tediously drawing complex regions click by click around objects, and then manually inputting text from the region as a label, which can take several minutes per image. Now you can use a model like Segment Anything to automatically trace objects in images, and an OCR model to auto-populate text predictions to reduce labeling time from minutes to seconds.

For document summarization and question / answer tasks, an annotator can now easily and interactively prompt an LLM to assist with crafting the summary or prompting the response given the context dialog, similar to ChatGPT UI, saving the successful prompt to be used for the next labeling task.

Evaluate Model Performance

Once a dataset is annotated, Label Studio Enterprise can help teams evaluate the performance of a model by comparing its predictions against the ground truth labels. The ML backend will run your model to produce automated responses across your dataset, and then the Label Studio environment can be used to review the results, evaluate LLM performance, and look over AI safety metrics. Model evaluation workflows are a powerful way to test models before production use, identify challenging edge cases, and discover and respond to data drift once a model is deployed.

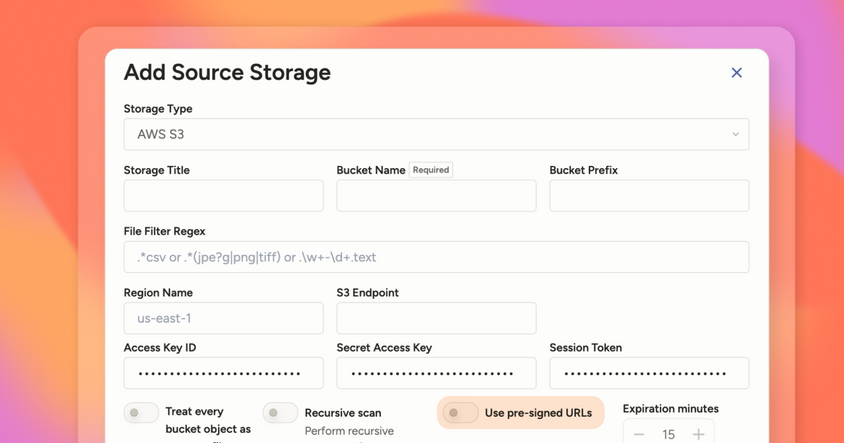

Connecting a model to Label Studio

Some adventurous data teams in our community were already building ML-powered automation for Label Studio, but the process was complex and challenging. This release puts automation easily in reach for any project. Based on the community feedback, we’ve made big investments in simplifying and streamlining the connection between your model and Label Studio.

Our github quickstart for label-studio-ml lists some popular model integrations for labeling tasks: everything from OCR for scanned documents to bounding complex shapes rapidly with Segment Anything or GroundingDINO to incorporating large models like GPT4 for complex automations ranging from document classification & summarization to ChatGPT-like model evaluation workflows.

To start an integration, clone the label-studio-ml-backend repository. Change your directory into one of the sample models and run docker-compose up.

This will download the model weights and other dependencies automatically before starting and running a local web server. The web server functions as a bridge between the model and Label Studio.

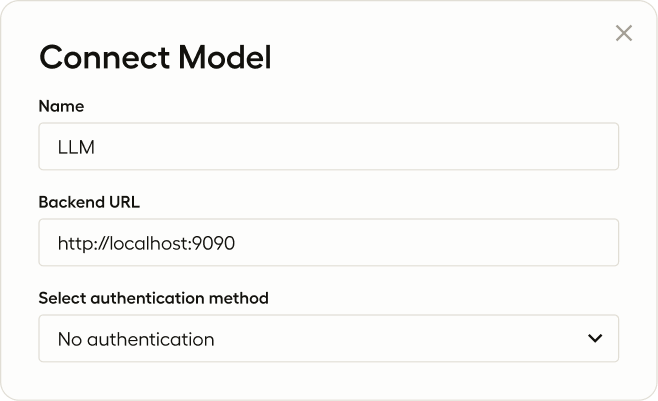

With the backend now running, open Label Studio and go to the settings for one of your projects. Select Settings > Model and then click Connect Model. Here you'll name the model and paste in the URL / port where the model backend is running (the default is ).

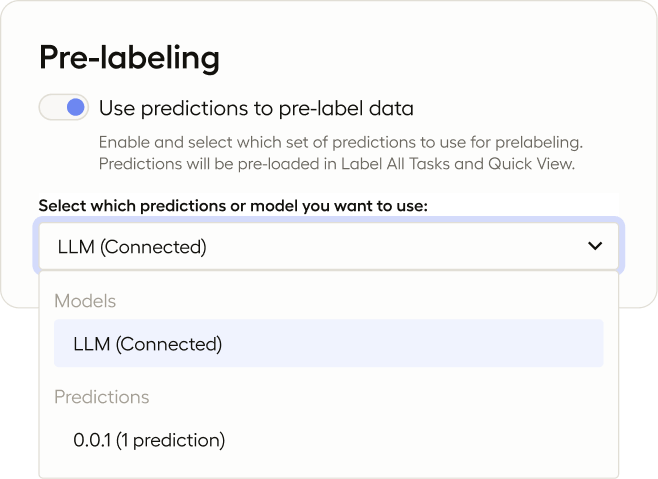

That's it! Now the model is ready to accelerate tasks inside of Label Studio. For example, you can now head to Settings > Annotation and turn on pre-labelling. Select the name of the model you connected above. Annotators exploring labeling tasks will see predictions automatically generated by the model.

Building your own ML connection

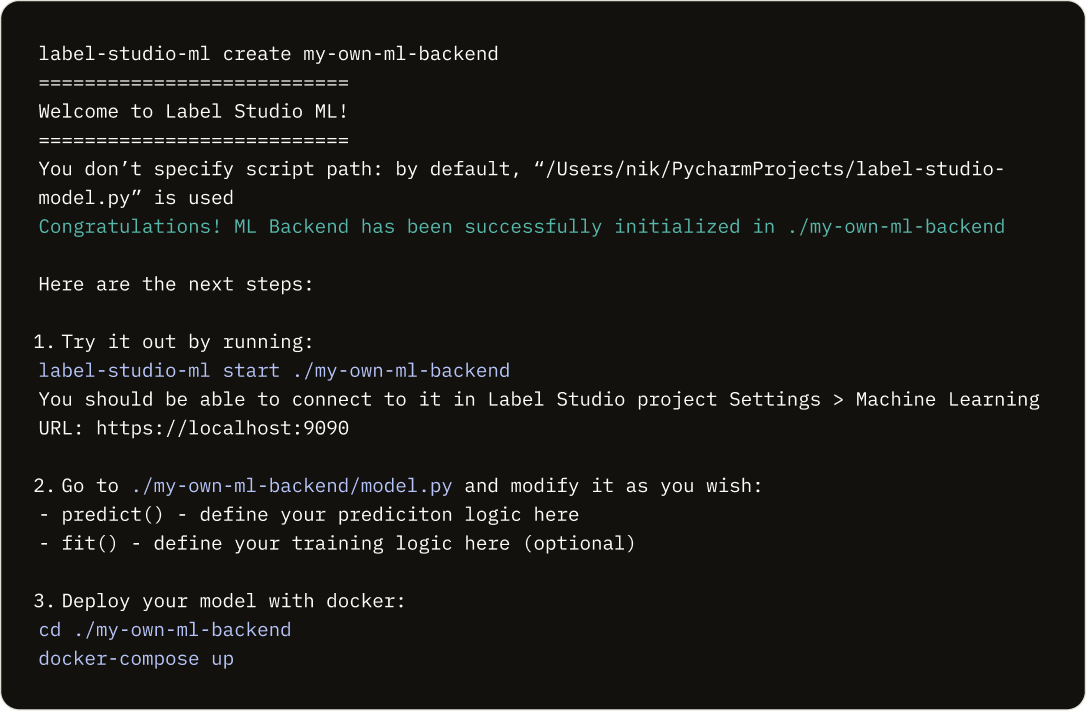

Going further, this release also includes a CLI command to create your own ML backend, utilizing any model you wish.

The ML backend you create will wrap your model with a web server, like the examples above. The web server is optimized to receive annotation prompts from Label Studio and return predictions as JSON in the exact shape Label Studio expects.

The project that gets scaffolded by the create command will include a model.py file. Here you'll find wrappers for the API methods that are used by Label Studio to communicate with the model. You can override the methods to implement your own logic.

More features in the 1.12.0 release

In addition to the ML backend updates, the Label Studio 1.12 release includes several new features and user experience improvements:

- A new Remove Duplicated Tasks action available in the Data Manager automatically consolidates annotations from duplicated tasks, saving time with dataset preparation.

- Several updates to the UI to improve performance and user experience, including better formatting for longer text strings when using the grid view in the Data Manager.

- A new Reset Cache action to reset and recalculate the labeling cache, particularly useful for situations in which you are attempting to modify the labeling configuration and you receive a validation error pointing to non-existent labels or drafts.

- Added support for

X-Api-Key: <token>as an alternative toAuthentication: Token <token>. This will make it easier to use API keys when integrating with cloud-based services. Special thanks to community member mc-lp for making this feature request.

Find the full list of features and bug fixes in the Label Studio 1.12.0 release notes.

A community framework

Hopefully you're starting to see the power and promise of automated workflows inside of Label Studio and the control and flexibility you have to customize how you use ML to accelerate data annotation and model evaluation.

While we’ve built a few ready-to-deploy examples, our primary goal was to create an integration framework that could empower the entire community to build and share model integrations. What models would help your unique labeling automations? If you use the CLI tool to create your own integration, be sure to submit a PR to the examples repository to share your work.

If you need any help along the way, drop by the Slack community for Label Studio. There you’ll find an active group of over 12,000 other researchers eager to discuss labeling workflows, fine-tuning LLMs, and validating models.

We’re excited to see data teams across the community share and collaborate on their own automations! Here’s to making building ML more efficient, more powerful, and more enjoyable.