Accelerate Image and Video Labeling with Segment Anything 2

Meta just released Segment Anything 2 (SAM 2), a significant upgrade to their widely-used foundation model for real-time object segmentation. This update takes things to the next level by extending its powerful image segmentation capabilities into video, allowing for real-time object segmentation with minimal human intervention.

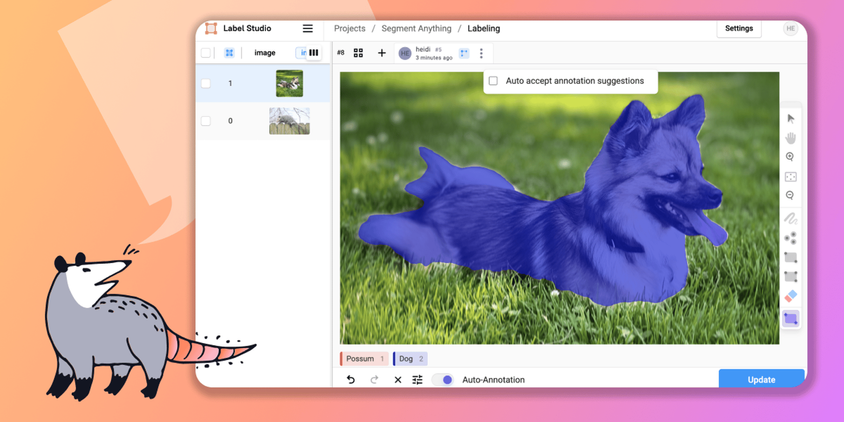

What's missing from Segment Anything alone is a way to view and refine auto-generated masks. This is why Segment Anything and Label Studio are such a powerful pairing to provide instant visual feedback and an interactive labeling interface for human review. Now with SAM 2, the process is faster and more accurate than ever before.

Integrating SAM 2 with Label Studio

Wondering how to get started? Integrating SAM 2 with Label Studio is easier than you might think, thanks to two new ML Backend connectors. In a few simple steps, you can connect SAM 2 to Label Studio and start leveraging its advanced capabilities for your projects.

Check out this quick video tutorial that walks you through the process:

Ready to dive deeper? Here are some resources to help you out:

- ML Backend Integration for images

- ML Backend Integration for video

- Image Segmentation Template for Label Studio or

What’s New in SAM 2?

SAM 2 brings several exciting new features that make it a game-changer for both image and video segmentation:

- Unified Model for Image and Video: SAM 2 combines the capabilities of image and video segmentation into a single, adaptable model that can handle unseen objects across a variety of contexts without requiring custom training.

- Improved Performance and Accuracy: SAM 2 delivers better results with fewer interactions. For video segmentation, it achieves superior accuracy while using 3x fewer interactions compared to previous methods. For image segmentation, it’s 6x faster and more precise than the original Segment Anything Model (SAM).

- Advanced Visual Challenge Handling: SAM 2 excels at handling complex visual scenarios, such as object occlusion and reappearance, making it a robust choice for challenging tasks.

- Interactive Refinement: Users can iteratively refine segmentation results, making the Label Studio UI an essential tool for achieving high-quality annotations.

Open Source and Human-in-the-Loop

One of the most exciting aspects of SAM 2 is that it’s available under the Apache 2.0 open-source license. This makes cutting-edge technology accessible to all, allowing data science teams everywhere to innovate without barriers.

Meta has also released an extensive dataset under an open license, further enabling the community to explore and expand the capabilities of SAM 2. But it’s not just about automation—Meta recognizes the importance of human-in-the-loop workflows to refine and enhance model performance. By incorporating human feedback, SAM 2 continuously improves, automating the tedious tasks while empowering you to focus on more strategic and impactful work.

Hosting SAM 2

While SAM 2 brings powerful new capabilities, it also comes with increased infrastructure requirements. If you’re primarily working with image object detection and segmentation, the original SAM model remains a strong, more accessible choice. But for those ready to take on the latest and greatest, SAM 2 is worth the investment in upgraded infrastructure.

For more in-depth information on SAM 2 and how to integrate it with Label Studio, check out Meta's official announcement here and the original guide to using Segment Anything with Label Studio here.