Scalable Labeling Systems for Faster LLM Tuning

You're in the driver's seat of a high-performance race car, the roar of the engine echoing in your ears, the crowd cheering you on. The race is on, and you're in it to win it. But what happens when your fuel is contaminated or "dirty"? No matter how advanced, your car will sputter, falter, and eventually fail to deliver the performance you need to win the race.

This is the same scenario when it comes to machine learning models. They are the race cars of artificial intelligence, designed to deliver fast and accurate results. But just like a race car, they need the right fuel - in this case, high-quality data that has been appropriately prepared. Feed them with "dirty" data, and they'll inundate your organization with fast but potentially undesirable results. As Ford adopted the motto, “Quality is job #1” for their vehicles, Human Signal echoes that in the field of data labeling. Scalable labeling systems are the foundation of successful machine learning pipelines, especially for large language models.

Imagine you have a pit crew (a team of data annotators) ready and waiting to ensure your car gets the best fuel quickly and efficiently. That's what a scalable data labeling process offers. It's your pit crew in the AI race, ensuring your machine learning models are powered by the highest quality data, enabling them to perform at their best and keep up with your company's critical AI initiatives. So, buckle up, and let's explore why you need a scalable data labeling process to win in this high-stakes race.

Much of this data 'contamination' can be traced back to one crucial element: data labeling. It's a prevalent problem.

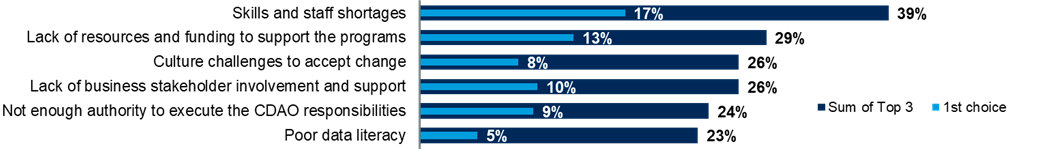

A Gartner survey found that data and analytics leaders who rated themselves “effective” or “very effective” across 17 executive leadership traits correlated with those reporting high organizational and team performance. Furthermore, that study cites skills and staff shortages as the first roadblock for data and analytics initiatives.

[Source: Gartner 2023]

So, what's the lesson here? If there is a staff shortage, take your staff and make your data labeling team members more efficient and the quality of their data labeling efforts better through high-quality tools. Using Label Studio, you can take the human element of machine learning and scale it up.

The Challenges in Building a Scalable Data Labeling Process

New data strategies bring new data problems. A few roadblocks in the current data landscape make building a scalable data labeling process challenging.

Volume Management

Modern businesses have become especially adept at creating data, but in general, that data isn’t actionable. Drawing insights, informing decisions, and making action plans is hard. To train ML models alone, data teams must follow the “ten times” rule, which means ingesting ten times the data you “need” to establish a data set. That means these models need thousands, and certainly no fewer than hundreds, of data points to reference. However, that doesn’t have to mean ten times more staff; it only relies on making them more productive.

Teams constantly discover new things with such large volumes of data in play. Organizations require constant revisions to existing data sets — sometimes more revisions than any team can manage independently. Simply put, automation and some human intervention are an absolute must.

Data Drift

ML models can’t be left to their own devices because the advancements in AI cannot account for every outlier, discrepancy, and nuance in data. ML models work fast, but they can also work wrong.

That leads to significant problems like data drift, where source and target data start to differ significantly the longer ML models are left running on their own. A scalable data labeling process still needs a human's light touch to ensure the data fed to these models starts on the right track.

Quality Assurance

Data quality is critical. Inaccurate data labeling can be a sucker punch to your organization. It ultimately leads to your models processing “bad” data, and your business outcomes might suffer for it.

Take what happened to Amazon in 2021, for instance. An MIT study found that Amazon reviews were mislabeled as positive when they were actually negative (and vice versa). These incorrect reviews could lead to consumers mistakenly buying a product they thought had good reviews — when it was poorly reviewed. Once that product arrives at the consumers’ doorstep, they’ll quickly realize that what they paid for is not what they got and see that misleading review labels are to blame (That or review farms cranking out often humorous but grossly inaccurate reviews). In this case, incorrect data labeling affects the integrity and reliability of the organization and directly impacts consumer experience.

Label Studio is a flexible data labeling platform that enhances the quality of data labeling through configurable layouts and templates, machine learning-assisted labeling, and seamless integration with your ML/AI pipeline. It allows direct labeling in connected cloud storage and offers a Data Manager for efficient data preparation and management using advanced filters. These features collectively make Label Studio an effective tool for ensuring efficient, accurate, and scalable data labeling, thereby improving the performance of machine learning models.

Bias

Inaccurate labeling can lead to pitfalls other than outputs going awry and unhappy customers. It can also lead to machine learning bias, which has the potential to damage both your company’s culture and reputation.

The science behind AI and ML is still rapidly developing and changing, which means it’s still imperfect and filled with human-made blindspots. For example, TechCrunch reported that most data annotators will likely label phrases in African American Vernacular English (AAVE) as toxic. This leads to ML models trained on that existing standard of labels to view AAVE as toxic as well automatically.

What Makes a Scalable Labeling System Work?

A few essential building blocks exist for a scalable and efficient data labeling process. For starters, the process must be:

- Well-documented - If few resources, reference points, or guidelines are available on the data labeling process, then they’ll be next to impossible to scale.

- Easily onboarded - Data labeling processes need to scale quickly. That means teams can’t spend weeks upon weeks onboarding new annotators — otherwise, they risk falling even further behind.

- Highly consistent between annotators - If annotators can’t come to a consensus on what labeling is accurate, then labeling isn’t very useful in the first place.

- High-quality - As we’ve reiterated before, quality is what matters here. If labels are inconsistent or inaccurate, even a “scaled” data labeling process will be useless to organizations’ greater business needs.

Building a Scalable Data Labeling Process

Now that we’re familiar with the roadblocks facing data teams today let’s dive into how you can build a scalable data labeling process. We’ll share how data pros and annotators can easily conquer those new challenges.

Define Your Data Labeling Process

It’s key to start with a well-defined and well-documented data labeling process. That way, data teams have a solid ground plan or basic root of truth to build off.

For starters, organizations should have a “style guide” that dictates specific guidelines for each type of data labeling within their process. This allows annotators to have a reference point to always look back to, preventing problems with data drift and quality assurance.

Next, teams should stay up-to-date by being on the lookout for new issues, exceptions, and expectations within their data labeling practice. This can be done by enabling annotators to make revisions to the guide themselves (e.g., maintaining an internal wiki) with a hold on review from administrators before changes are finalized.

Build a Data Annotation Team

More data means more people. As the volume your data labeling process consumes increases, so must the number of team members you need to bring on board. However, that doesn’t mean that you have to scale linearly. We see organizations scaling effectively with 2x-4x increases in their annotators’ productivity, as we have seen with Yext.

Organizations have a few options for building a data annotation team. They can insource via full-time employees, outsource via freelancers, or crowdsourcing.

Ultimately, the best way to create a data annotation team depends on each organization’s needs, budget, and existing resources.

Use Metrics To Assess Quality

Measuring the quality of your data labeling process with concrete guidelines can be challenging. That’s where metrics, like the Inter-Annotator Agreement (IAA), come in. These standards can provide a general numeric measure of the ongoing quality of data labeling practices. (Label Studio makes this a key part of our annotator analytics.)

Teams can also introduce metrics into automated tagging. Simply calculate and keep tabs on overall relevance scores to ensure that automated processes stay accurate. Here, light human supervision and AI can team up to make high-quality, high-volume data labeling a reality.

Run Post-Annotation Quality Checks

When it comes to maintaining quality, one thing is key: Check, double-check, and triple-check again. Human error poses the largest risk to your data set’s potential usefulness. If those errors are allowed to slip through the cracks, they can cause even bigger problems down the line in the data labeling process.

Here, teams can enlist the help of a dedicated quality assurance staff to perform regular reviews of questionable data, data with low relevance, outdated data, and other data red flags.

Win the Race to Efficiently and Effectively Label Your Data

You're not just upgrading your workflow — you're building a scalable labeling system that keeps your AI future-ready. Data teams can easily scale their work with a clearly defined process, established metrics to measure quality, and tools for accurate labeling.

The future of data labeling — especially regarding scalability — relies on the collaboration between humans and machines. Learn more about how intelligent data labeling can take your organization’s data analysis to the next level.

The time is now to invest in a smarter, more scalable data labeling process. Are you ready to turbo-boost your data labeling solution and accelerate your machine-learning model tuning? Get in touch with us today and start your journey towards more precise, more reliable, and more efficient data labeling.

You're not just upgrading your process; you're investing in the future success of your AI race time. Dive into a world where data quality matters, scale meets precision, and your team can easily conquer new data challenges. Let's win this race together.

Start building your scalable labeling system today with Label Studio. Whether you’re fine-tuning an LLM or managing high-volume data pipelines, our flexible, human-in-the-loop platform helps teams label faster and smarter.