Open Source AI Chatbots and Assistants: Why They Matter

Over the past few years, chatbots and virtual assistants have shifted from novelty features to core parts of modern applications. Whether answering support questions, guiding users through complex workflows, or serving as intelligent copilots for creative tasks, conversational AI is now a staple in enterprise and consumer products alike.

While many organizations turn to closed-source APIs, a growing number are building with open source AI chatbots and assistants. This shift isn’t just about saving money. It’s about flexibility, control, and the ability to shape conversational systems to match real-world needs.

What Do We Mean by “Open Source AI Assistants”?

An open source AI assistant is a chatbot or conversational system powered by publicly available models, frameworks, and tools. Instead of relying solely on proprietary APIs, teams can build on foundations such as LLaMA, Mistral, or Falcon for language modeling, and frameworks like Rasa or Haystack for orchestration and deployment.

Open source assistants are not “one-size-fits-all.” They range from lightweight retrieval-based bots that answer FAQs to multimodal copilots that combine reasoning, memory, and tool use. The key distinction is that the building blocks—models, pipelines, and orchestration code—are transparent and modifiable.

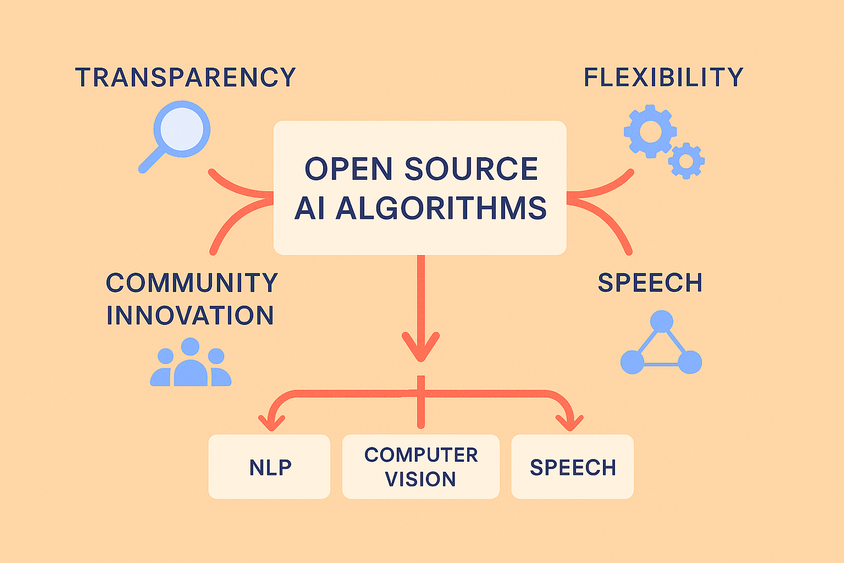

Why Teams Choose Open Source

For machine learning engineers and product teams, there are compelling reasons to go open source:

- Control and customization: Proprietary APIs are optimized for general use. Open source systems let you fine-tune for your data, domain, or compliance needs.

- Cost management: Hosting your own models may be cheaper at scale, particularly when inference can be optimized with quantization or GPU sharing.

- Data governance: Sensitive data never has to leave your environment. This can be essential in healthcare, finance, or government applications.

- Community and innovation: Open source ecosystems evolve rapidly, with contributions from researchers, startups, and enterprises alike.

These benefits come with trade-offs: managing infrastructure, ensuring scalability, and keeping up with updates. But for many teams, the long-term control outweighs the initial overhead.

Real-World Applications

- Customer Support Companies are deploying open source assistants trained on product documentation and ticket history. Unlike generic chatbots, these systems can incorporate domain-specific terminology and escalation workflows.

- Developer Tools Open source copilots help engineers query internal APIs, generate code snippets, or assist in debugging. Because the models can be fine-tuned or extended, organizations can ensure outputs align with coding standards.

- Healthcare Triage Hospitals are experimenting with assistants that guide patients through intake processes or explain treatment options. Open source frameworks allow them to keep sensitive medical data within secure infrastructure while adapting the assistant to regulatory requirements.

- Research and Education Universities and labs use open source assistants as teaching aids, enabling students to build, critique, and extend real systems rather than treating conversational AI as a “black box.”

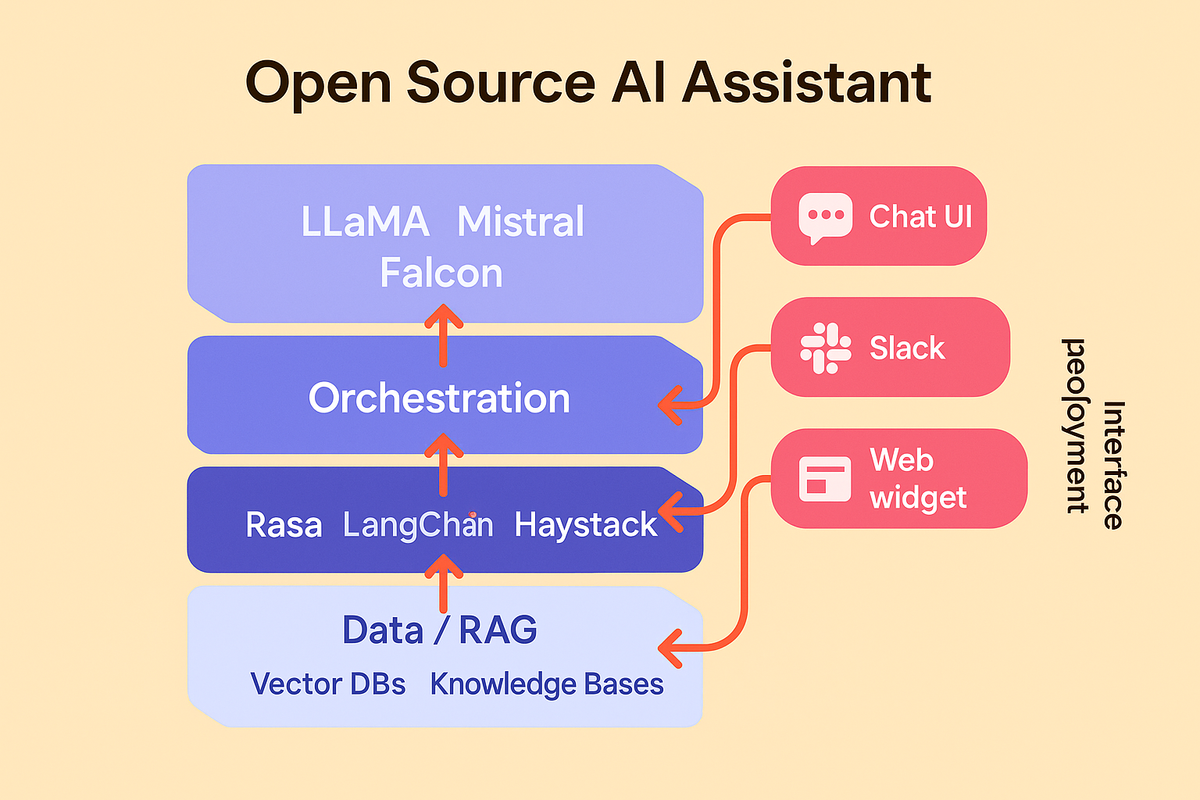

Building Blocks of an Open Source Assistant

To understand where open source fits in, it helps to break down the architecture:

- Foundation Models: Large language models (e.g., LLaMA, Mistral, Falcon) provide core language understanding and generation.

- Orchestration Layer: Frameworks like Rasa, LangChain, or Haystack handle intent detection, memory, and routing to external tools.

- Data Layer: Retrieval-augmented generation (RAG) pipelines integrate enterprise knowledge bases, ensuring answers are grounded in trusted data.

- Interface and Deployment: Chat UIs, Slack bots, or embedded widgets bring assistants into user workflows, while containerization and Kubernetes handle scaling.

Each of these layers has open source options, giving teams flexibility in how much they adopt and where they might integrate proprietary services.

When to Use an Open Source Assistant

Open source isn’t always the right path. If you need to prototype quickly with minimal setup, a closed API may be faster. If compliance, cost predictability, or deep customization matter, open source shines.

A good rule of thumb:

- Use proprietary APIs when speed to market is the priority.

- Go open source when you want long-term control, adaptability, and assurance that your data and workflows remain yours.

For many teams, a hybrid strategy works best—using open source for sensitive or core workloads and APIs for lower-stakes tasks.

Frequently Asked Questions

Frequently Asked Questions

What’s the difference between an open source and a proprietary chatbot?

Open source chatbots use transparent, modifiable frameworks and models, while proprietary ones rely on closed APIs managed by a vendor.

Can open source assistants match commercial tools in quality?

Yes, especially with domain-specific fine-tuning and retrieval pipelines. Many open source systems rival or exceed proprietary APIs in specialized tasks.

Do open source assistants require GPUs?

Not always. Smaller models can run on CPUs, but GPU acceleration is generally needed for real-time performance at scale.