Using the Segment Anything Model Integration

At our last community town hall, we explored the Segment Anything Model (SAM) integration with Label Studio, which is made possible by valued community member Shivansh Sharma.

Whether you’re a seasoned pro or just getting started in computer vision, image annotation, or machine learning — we’ve got you covered. Let’s begin with the fundamentals of Segment Anything and its integration with Label Studio.

What is the Segment Anything Model?

The Segment Anything Model (SAM) is a powerful model that helps parse separate objects from images. Meta (formerly known as Facebook) created this open-source model to identify and extract various object classes from any given image.

Curious to learn more? Check out Segment Anything’s website for a demo of SAM’s features.

SAM is a computer vision model that can efficiently detect different objects in an image while generating segmentation masks for those objects. Before SAM, image segmentation was primarily done using polygons, bounding boxes, or magic wands. SAM is a significant step forward for ease of use and accuracy compared to the latter options. Computer vision researchers and image annotators rejoice!

Label Studio is a flexible, open-sourced data labeling tool used amongst tinkerers, students, and professionals of all types — from small startups to enterprise organizations. With a highly-configurable interface, the ability to assign a multitude of different labels to a variety of different data types, and its extensibility across the entire ML ops ecosystem make it a great platform for applying novel solutions to old problems.

All of these features gave community contributor Shivansh the power to wrap SAM within the Label Studio ML backend, unlocking the power of SAM for any Label Studio user.

From the original pull request to now, updates and further development are all built in the open, allowing collaborators worldwide to participate in the efforts.

Segment Anything Walkthrough

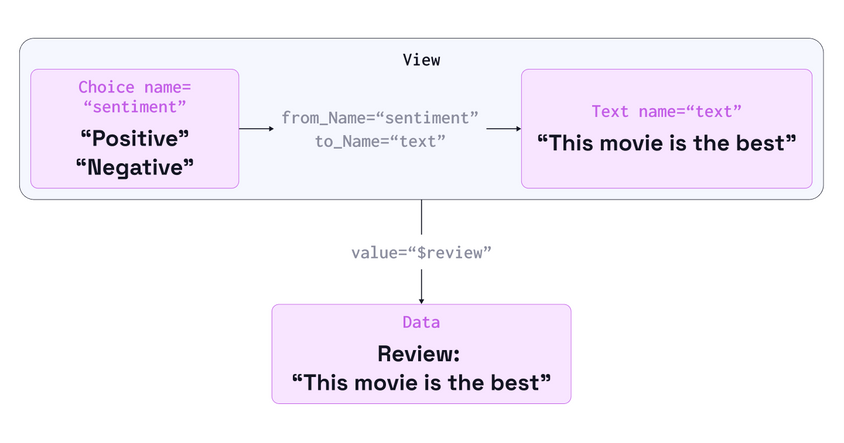

The SAM backend passes image and annotator information to a Label Studio ML backend. The SAM backend takes this data and generates an image segmentation based on user-selected key points and the original image.

Curious to get started with the ML backend? Check out the “Introduction to Machine Learning” tutorial.

The segmentation mask and object classification are then passed back to Label Studio, where the labeling interface can display the predicted mask. From there, the user can use additional tools in Label Studio to further refine the generated image mask. This tight integration loop dramatically improves the accuracy and efficiency of the annotation workflow.

Key Point Integration

When he was building the plugin, the first thing that Shivansh focused on was key point integration. Key point integration allows users to place a key point on an object within any given image, giving the model a reference point to identify the object and make the segmentation prediction.

After the initial selection, users can refine the annotation using the eraser tool to remove unwanted parts or the brush tool to add more detail. This key point integration works well with nearly any image. Usually, it’s so accurate that users don't need further adjustments.

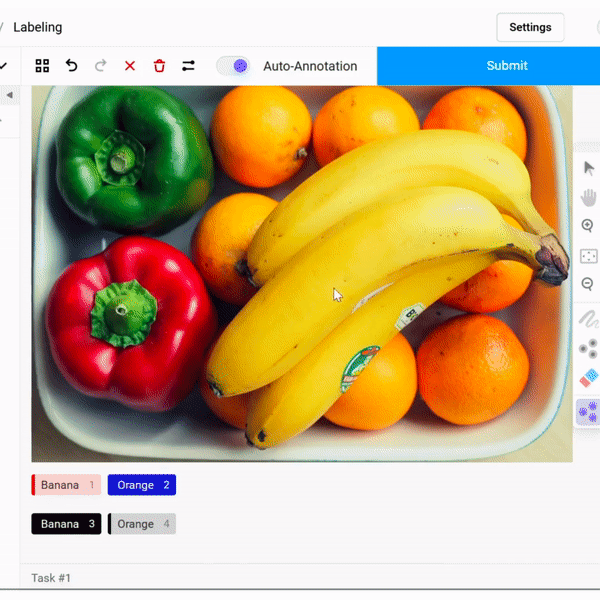

Here's an example of using key point integration with the Segment Anything model. In this case, we can see two key points selected — a banana and an orange — and the labels assigned to them.

The annotator can choose any labels that suit their needs. Check out this example using an image from the Ego Hands dataset for hand segmentation to demonstrate the process. The tool quickly selects the entire object by selecting a class, such as an arm, and placing a key point on it.

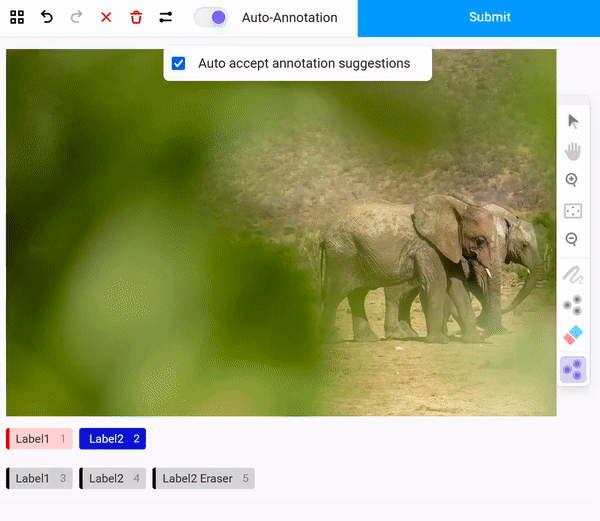

There are some instances, however, where SAM may select more of an object than you would like. For example, you may be interested in only selecting a person’s arm, but SAM’s segmentation model is tuned to select the entire person. This highlights the importance of Label Studios' annotation refinement tools. The eraser tool can clean up segmentation spillover, the brush tool can catch missed object elements, and bounding boxes can limit the region where that object segmentation takes place. Each gives annotators valuable tools for improving segmentation accuracy.

Bounding Box and Segmentation Subtraction Features

Bounding boxes can help isolate regions of an image to select just a part of an object rather than the whole. For example, to select only the arm of a person in an image, you can draw a bounding box around it, and SAM will focus on just that area. This improvement speeds up the process and reduces the need for extensive adjustments using the eraser and brush tools.

In addition to the key point and bounding box features, contributors are working on an upcoming enhancement that allows users to subtract parts of the selected object. This comes in handy if you wanted to exclude a part of the background from the selection.

Remember that this feature is still being worked on — so please report any issues if you see where improvements can be made. If you need to select a part of an image more precisely, using the bounding box feature is recommended instead.

Once fully integrated, this subtraction functionality will further enhance the annotation process and accommodate various use cases.

SAM 🤝 Label Studio = 🚀

The potential use cases for this integration are endless. Try it out for yourself and explore how the Label Studio SAM ML backend can fine-tune segmentation on your datasets.

With the ongoing development of both the SAM and the Label Studio SAM integration, we can expect further improvements and developments along the way. More efficient image annotation is just one perk to the SAM. This tool can also be employed for fine-tuning tasks, video segmentation, or extracting captions based on the objects detected within the images.

The model's versatility and ease of integration make it a valuable addition to various applications, further expanding its potential impact on multiple fields.

The SAM integration is an official component of the Label Studio ML Backend. If you’re interested in using computer vision models for data labeling and want to try it out on your own, head over to the ML backend examples and check it out. If you’re new to Label Studio and are looking for a way to get started, read the “Zero to One with Label Studio” tutorial and follow on with the “Introduction to Machine Learning” tutorial.

Contributions like this are only possible through community contributions. Join the Label Studio community, where you can meet with community champions like Shivansh, and let us know what you think!