Open Source

Data Labeling

Platform

The most flexible data labeling platform to fine-tune LLMs, prepare training data or validate AI models.

Last Commit:

Latest version:

# Install the package

# into python virtual environmentpip install -U label-studio# Launch it!label-studio

# Install the caskbrew install humansignal/tap/label-studio# Launch it!label-studio

# clone repogit clone https://github.com/HumanSignal/label-studio.git# install dependenciescd label-studio

pip install poetry

poetry install# apply db migrationspoetry run python label_studio/manage.py migrate# collect static filespoetry run python label_studio/manage.py collectstatic# launchpoetry run python label_studio/manage.py runserver

# Run latest Docker versiondocker run -it -p 8080:8080 -v `pwd`/mydata:/label-studio/data heartexlabs/label-studio:latest# Now visit http://localhost:8080/

Label every data type.

GenAI

LLM Fine-Tuning

Label data for supervised fine-tuning or refine models using RLHF

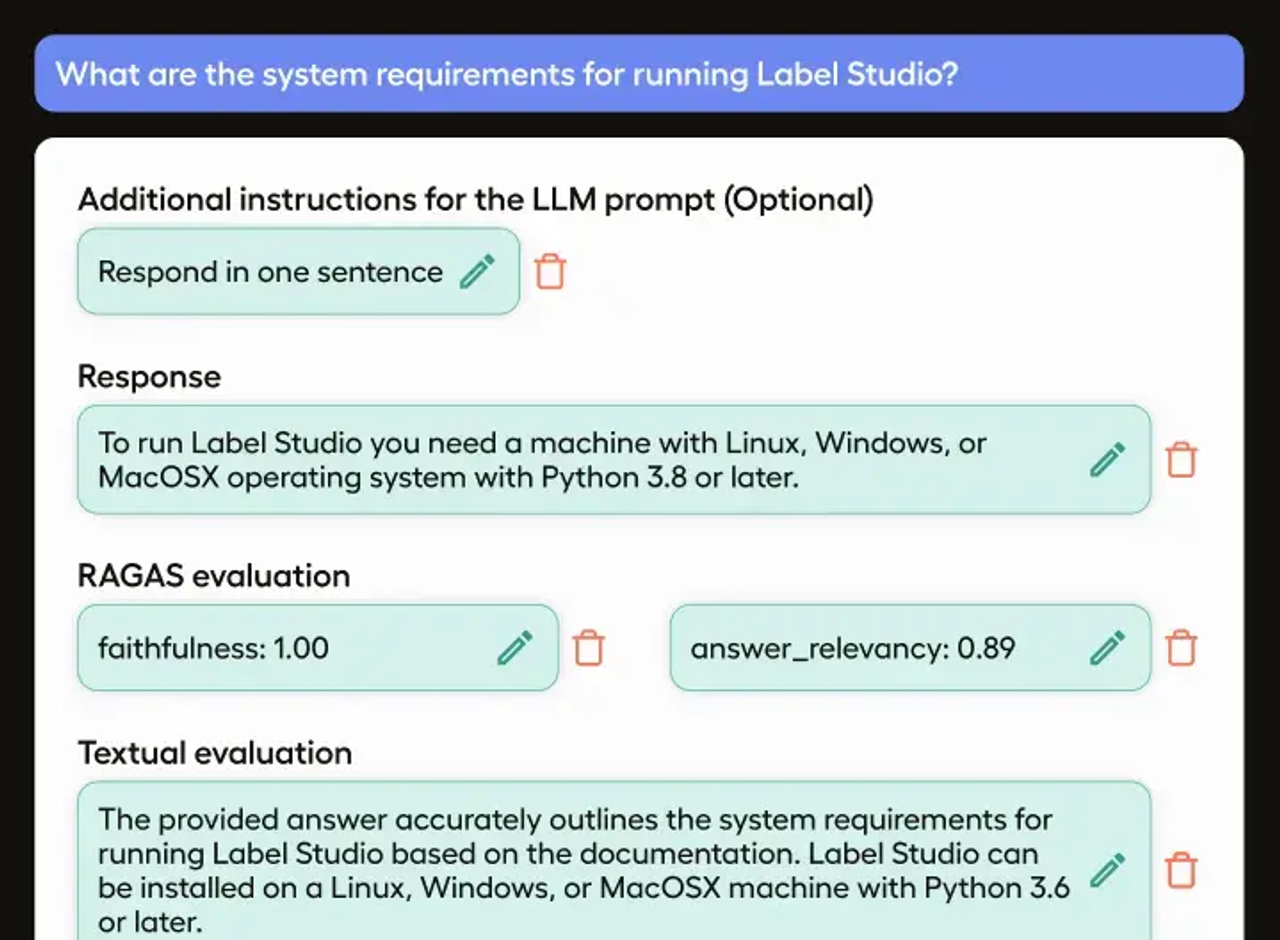

LLM Evaluations

Response moderation, grading, and side-by-side comparison

RAG Evaluation

Use Ragas scores and human feedback

Quick Start

Computer Vision

Image Classification

Put images into categories

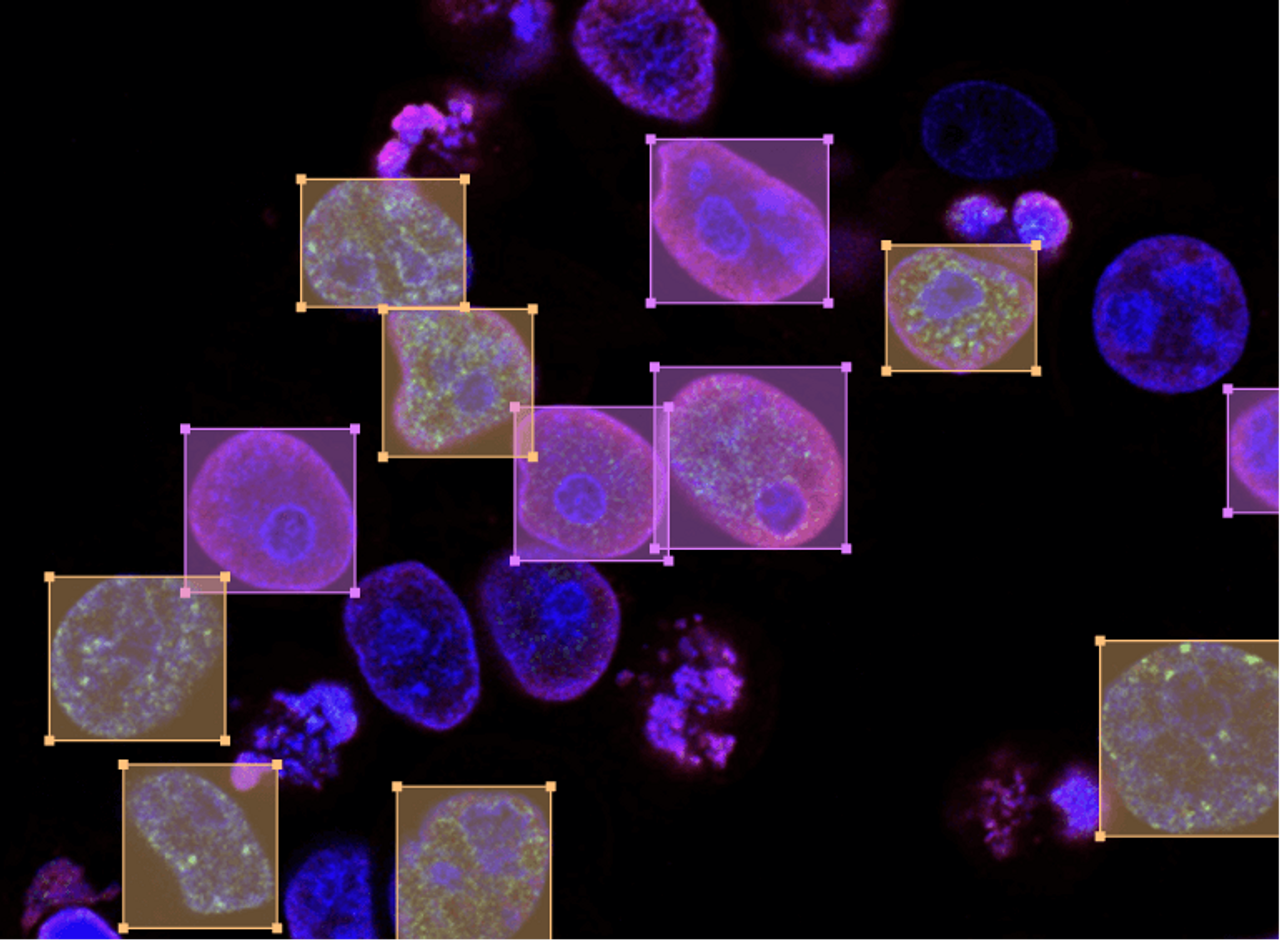

Object Detection

Detect objects on image, boxes, polygons, circular, and keypoints supported

Semantic Segmentation

Partition image into multiple segments. Use ML models to pre-label and optimize the process

Quick Start

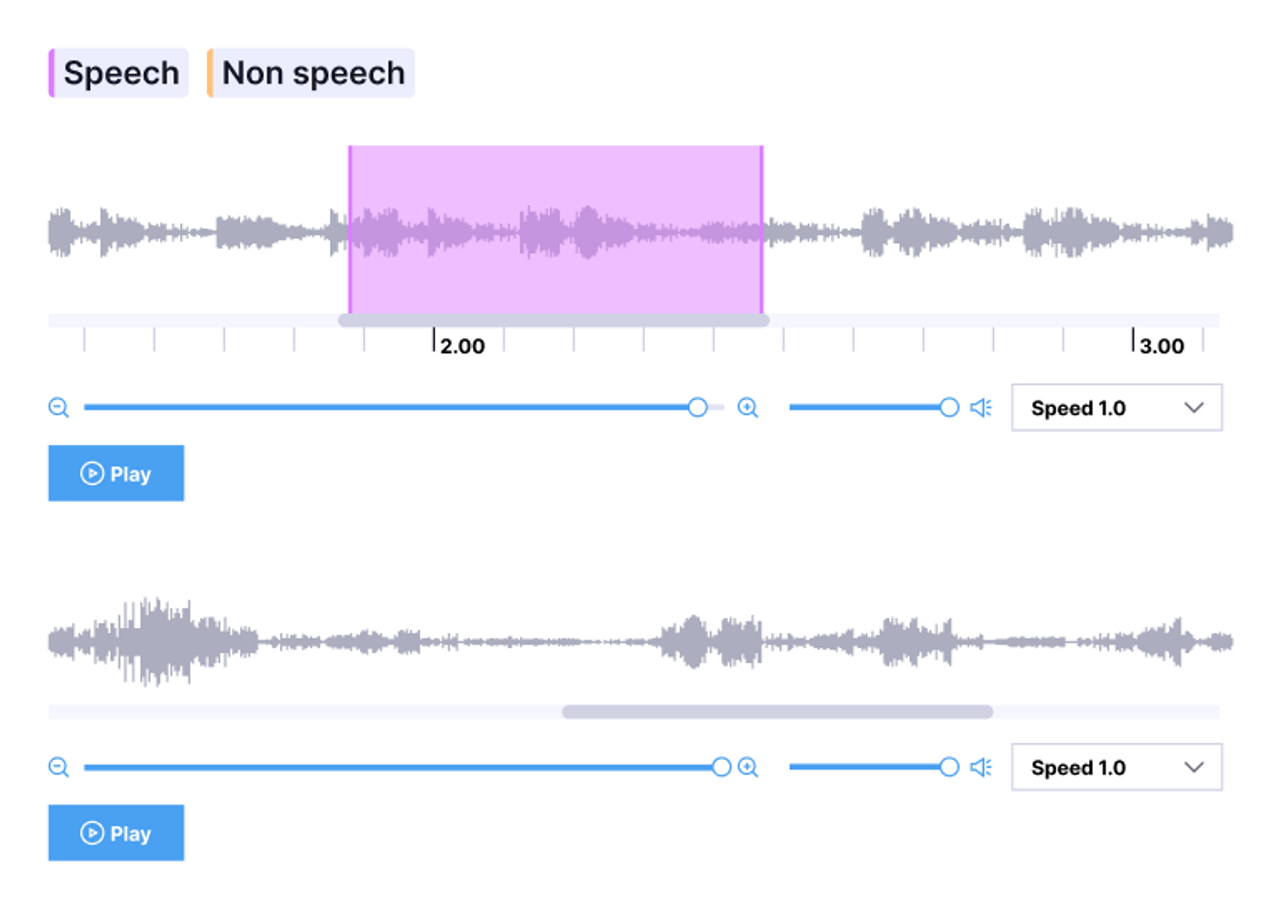

Audio & Speech Applications

Classification

Put audio into categories

Speaker Diarization

Partition an input audio stream into homogeneous segments according to the speaker identity

Emotion Recognition

Tag and identify emotion from the audio

Audio Transcription

Write down verbal communication in text

Quick Start

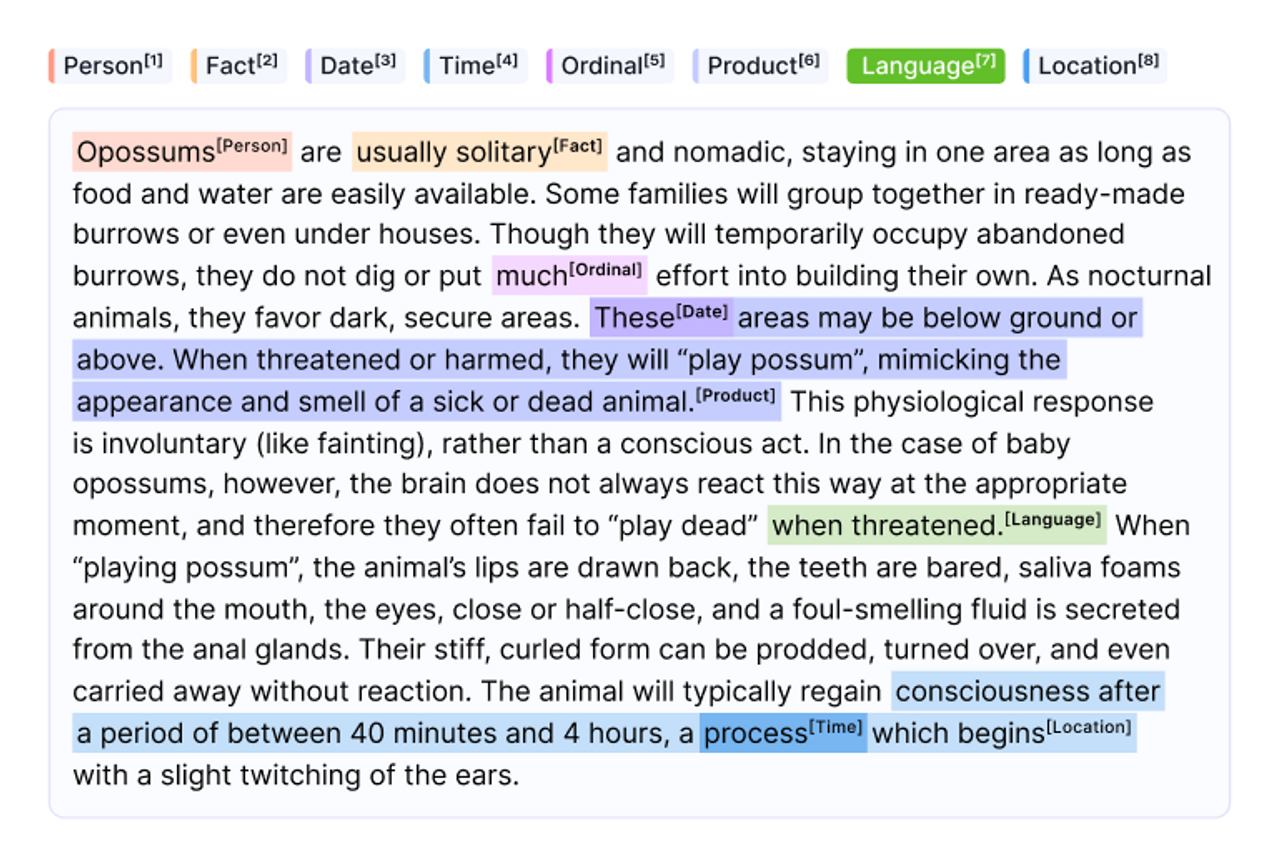

NLP, Documents, Chatbots, Transcripts

Classification

Classify document into one or multiple categories. Use taxonomies of up to 10000 classes

Named Entity

Extract and put relevant bits of information into pre-defined categories

Question Answering

Answer questions based on context

Sentiment Analysis

Determine whether a document is positive, negative or neutral

Quick Start

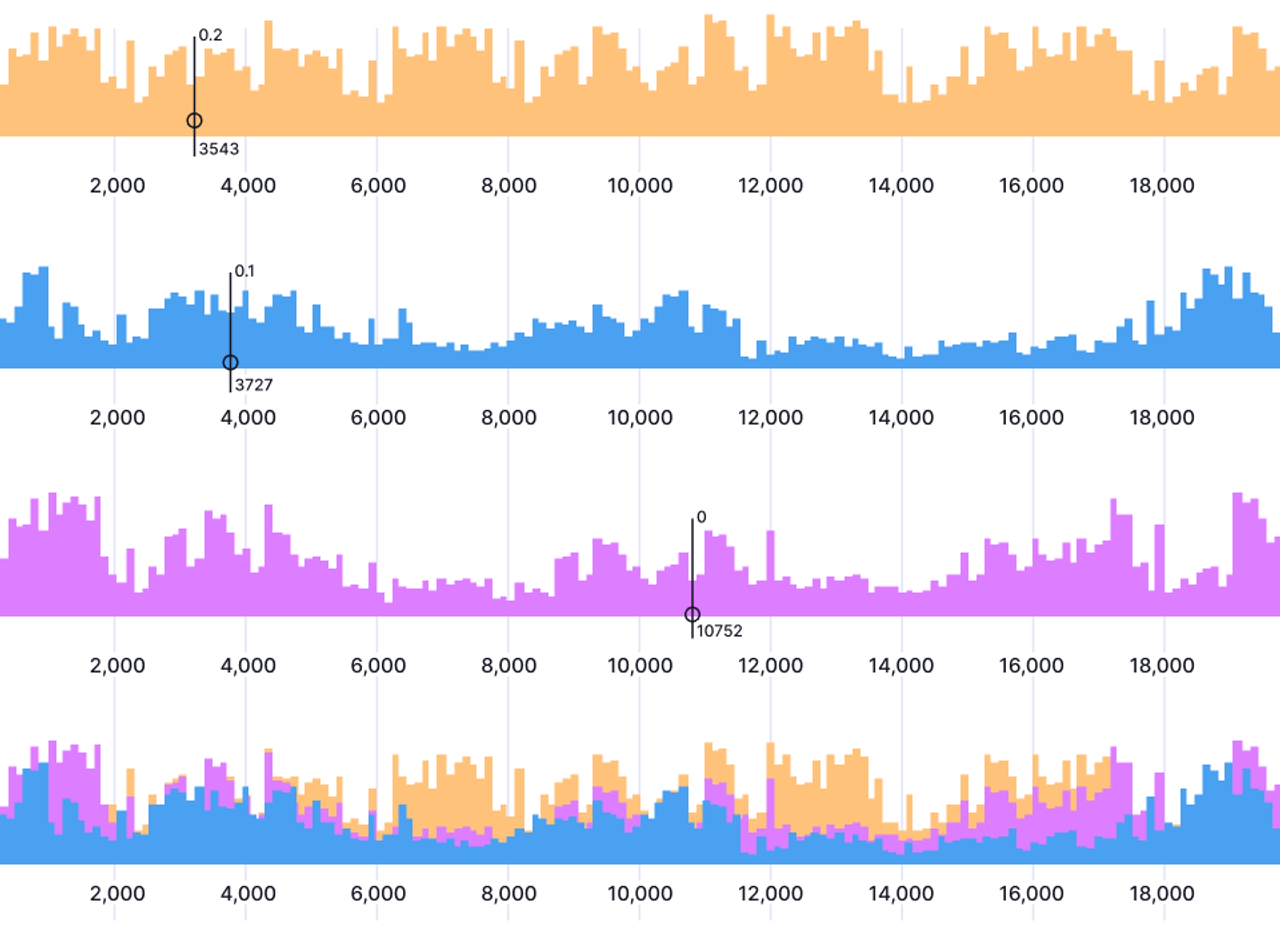

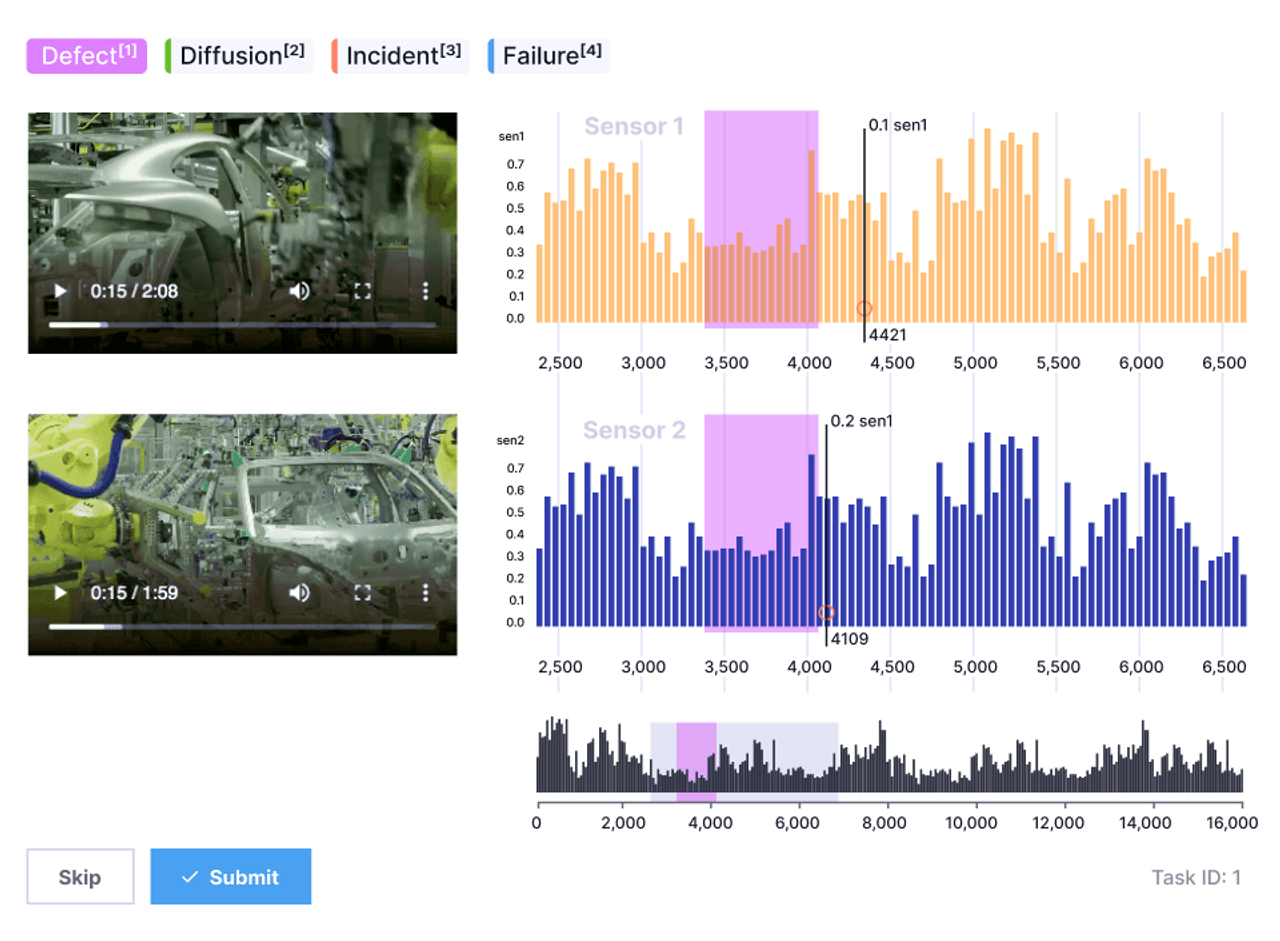

Robots, Sensors, IoT Devices

Classification

Put time series into categories

Segmentation

Identify regions relevant to the activity type you're building your ML algorithm for

Event Recognition

Label single events on plots of time series data

Quick Start

Multi-Domain Applications

Dialogue Processing

Call center recording can be simultaneously transcribed and processed as text

Optical Character Recognition

Put an image and text right next to each other

Time Series with Reference

Use video or audio streams to easier segment time series data

Quick Start

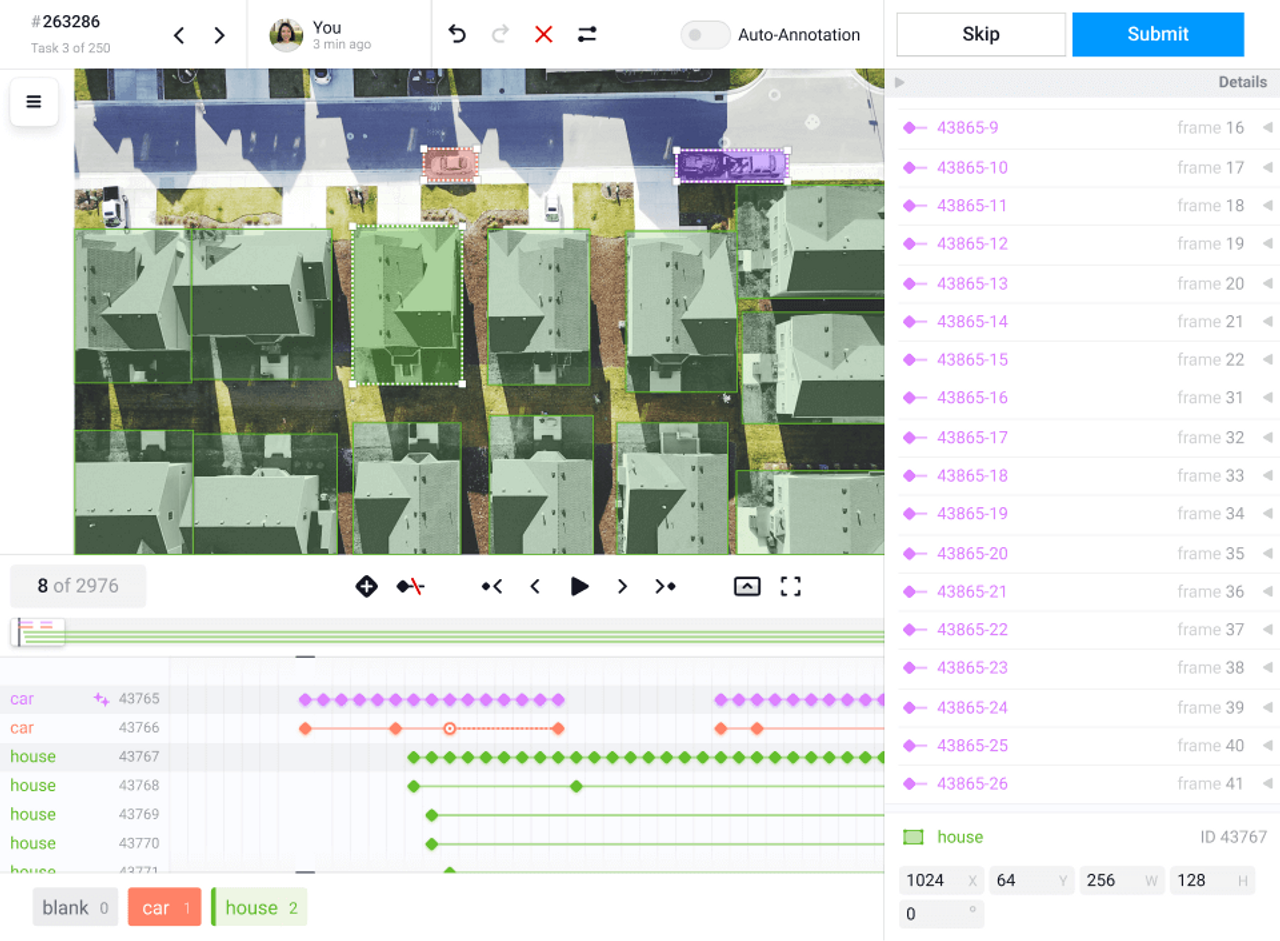

Video

Classification

Put videos into categories

Object Tracking

Label and track multiple objects frame-by-frame

Assisted Labeling

Add keyframes and automatically interpolate bounding boxes between keyframes

Quick Start

Flexible and configurable

Configurable layouts and templates adapt to your dataset and workflow.

Integrate with your ML/AI pipeline

Webhooks, Python SDK and API allow you to authenticate, create projects, import tasks, manage model predictions, and more.

ML-assisted labeling

Save time by using predictions to assist your labeling process with ML backend integration.

Connect your cloud storage

Connect to cloud object storage and label data there directly with S3 and GCP.

Explore & understand your data

Prepare and manage your dataset in our Data Manager using advanced filters.

Multiple projects and users

Support multiple projects, use cases and data types in one platform.

From the Blog

View All Articles-

Learn how to connect YOLO26 to a Label Studio project using the YOLO ML Backend so annotators can start from model predictions and focus on review and correction instead of drawing boxes from scratch.

Micaela Kaplan

February 12, 2026

-

How to Scale Evaluation for RAG and Agent Workflows

A practical workflow for evaluating RAG and agent systems consistently through prompt, model, and workflow changes.

HumanSignal Team

January 23, 2026

-

Building the New Human Evaluation Layer for AI and Agentic systems

Programmable. Embeddable. Multimodal. Meet the new evaluation engine powering custom interfaces for AI and agentic systems.

Michael Malyuk

January 14, 2026

Trusted by companies large and small

Global Community

Join the largest community of Data Scientists working on enhancing their models.