Understanding Audio Classification: Everything You Need to Know

If you are paying close attention to the news around Sequoia’s ‘Generative AI Market Map,’ you’ll see that audio and speech have started gaining a presence in this very nascent category. A significant aspect of generative AI development is focused on speech generation, which is heavily dependent on being able to synthesize and imitate emotions and expressions in human speech. So how can these apps understand prompts and find suitable audio samples to generate these realistic-sounding synthetic voices? At the heart of these apps, among other techniques at play, is one core technique: Audio Classification. Let’s take a look at audio classification and discuss the various use cases that can benefit from this technique.

What is Audio Classification?

In simple terms, audio classification refers to the ability to listen to audio sources and analyze them enough to sort the audio data into different categories. The ability to analyze and classify audio is very similar to how we use machine learning to organize text-based data to determine intent or sentiment. However, there are several added nuances in audio classification, making analyzing, labeling, and categorizing audio and voice-based data more challenging than text-based data sets. We will provide a sneak peek into how audio classification is done, but before we do that, let us look at why you need this technique with a simple example.

Let’s say you are a movie and TV series enthusiast. Whenever you go to a friend’s place for dinner and hear a song that’s playing that seems familiar, you would like to know the song and the artist who composed it. Now most iOS and Android phones can automatically identify these through a built-in interface, but apps like Shazam can also do it as a stand-alone app. When you use an app like Shazam, among the different techniques it uses behind the scenes, it also uses audio snippets or ‘fingerprints’ that have been classified and labeled for song identification.

How Audio Classification Works

Now that we have established an example from our everyday lives, let's break down how audio is ‘classified’ for an app like Shazam to work.

Collate the initial data set with required metadata

Continuing our Shazam example, we would need to first collate our audio data sets with as much informative metadata about the nature of these audio files as possible, for instance, instrumental, voice-over audio, and in-movie audio. These audio files might have the necessary metadata already embedded, or we might need to collect information manually about these audio files.

Assign and annotate labels to data set

We then assign and annotate these files using data labels. You could manually assign labels to each file in the data set or work with your team to divide the data labeling effort using a collaborative data labeling platform like Label Studio. We have a readily available audio classification labeling templates to get your team started.

Resize audio data and create spectrograms

We then pre-process these files in the data set and apply transformations to visualize them as Mel spectrograms. In simple terms, these spectrograms are a visual representation of the waveforms in the audio data. If you are familiar with how image files are processed and classified, you are already acquainted with these pre-processing steps and transformations, as these functions work on the visual representation rather than on the audio file itself.

Create models to identify and classify data

Next, we create training and validation learning models by loading our first batch of audio files. These run through a classification process similar to image classification and provide predictions for each label. The intent here is to provide adequate data to train your machine learning model to deliver accurate results. You would also have an opportunity to review these initial classification efforts and tweak the model to let it identify more accurately in the future.

Connect to your data workflows

Last, we then plug our model into an AI workflow to a more extensive data set, which keeps sending a fresh set of audio inputs. We also connect it to a reporting source, where it can display the results of the data set.

The role of data labeling in audio classification

Data labeling for any classification is prone to human error and inherent biases. These issues could be even more evident when performing classification on audio data sets because the way we might interpret and identify a particular sound or audio heavily depends on our awareness of the nature of the data. In addition, the team might also be prone to language or environment biases while labeling the data, so bringing in subject matter experts to collaborate with your data team might be immensely helpful in reducing these biases. For a detailed reference, we have put together a guide for everything you need to be mindful of, while labeling data in audio classification.

Different Use Cases for Audio Classification

Audio classification has wide-ranging applications in a variety of disciplines across industries. We focus on the most valuable use cases for your reference below.

Acoustic data classification

Audio classification is useful in security and monitoring scenarios to understand outlier patterns and provide prompt remediation. For example, understanding the typical sound patterns within a given bird or animal sanctuary can help identify if new species are entering the area and alert sanctuary officials to take note.

Environmental sound classification

Audio classification could also be used in urban settings like hospitals or industrial areas. For example, if unfamiliar sounds are discovered in mission-critical equipment or from various sensors in an urgent care unit, staff could be notified to address the situation immediately.

Natural language utterance

As is widely prevalent with today’s language learning apps like Duolingo, spoken language detection and translation are primarily possible thanks to audio classification techniques. However, these could be more widely used in helping marginalized communities interact in urban and international settings to build communities that can connect and understand people from different worldwide regions.

Audio Classification: Implementation Challenges

As mentioned earlier in this article, the sheer volume and resources required for audio classification can make this a logistical challenge for product and engineering teams. Apart from the sheer amount of resources needed from a storage and computing standpoint, the critical barrier to entry is the ability to grapple with the possibilities that classified audio can bring to the front. The ability to enable new applications with audio classification techniques heavily depends on the proper awareness and understanding of best practices in labeling, tweaking, and maintaining the quality of these data sets. For your reference, this article could provide a great starting point in understanding what it would take for your team to build an efficient data labeling process in your organization.

Audio classification using Label Studio

Label Studio is a fully-collaborative data labeling platform that enables audio data classification and labeling. If you are getting started with audio classification, the following features in the platform should help you get started on the right foot.

- Easily integrates with your ML/AI pipeline

- Uses predictions to assist and accelerate your labeling process with ML backend integration

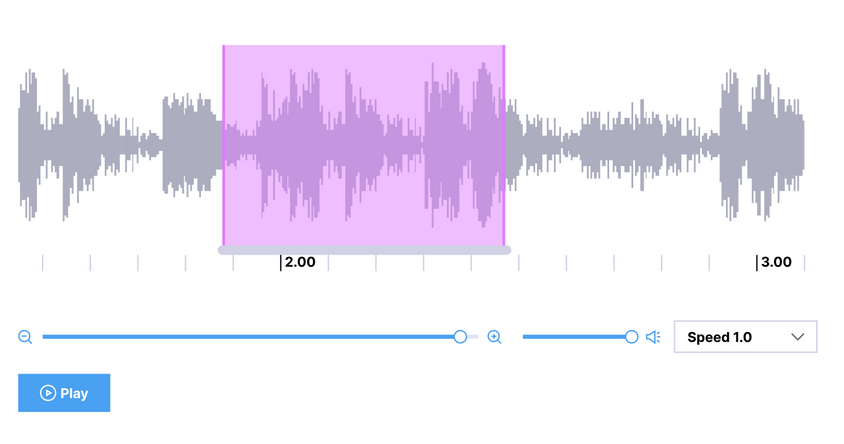

- Allows you to perform audio segmentation labeling

- Is collaborative by design to work with your team for quick turnaround times on labeling training data

Are you interested in learning more? Take Label Studio for a spin to experience what makes us the most popular open-source data labeling platform for audio classification.