A Guide to Augmented Language Models

Large language models (LLMs) are a leap forward in natural language understanding, but even the most advanced models have well-known limits. They forget everything the moment a prompt ends. They can’t look up current information. They can’t reason across multiple steps or use external tools. And on their own, they can’t improve through feedback.

That’s where augmented language models come in.

Augmentation isn’t a single technique it’s a broad set of strategies for giving LLMs access to knowledge, context, actions, and learning mechanisms beyond what’s captured in their pretraining. Think of it as infrastructure for turning a general-purpose language model into a specialized, useful system.

Let’s walk through the most common forms of augmentation in practice today, when to use them, and how they work together.

Teaching Models to Retrieve

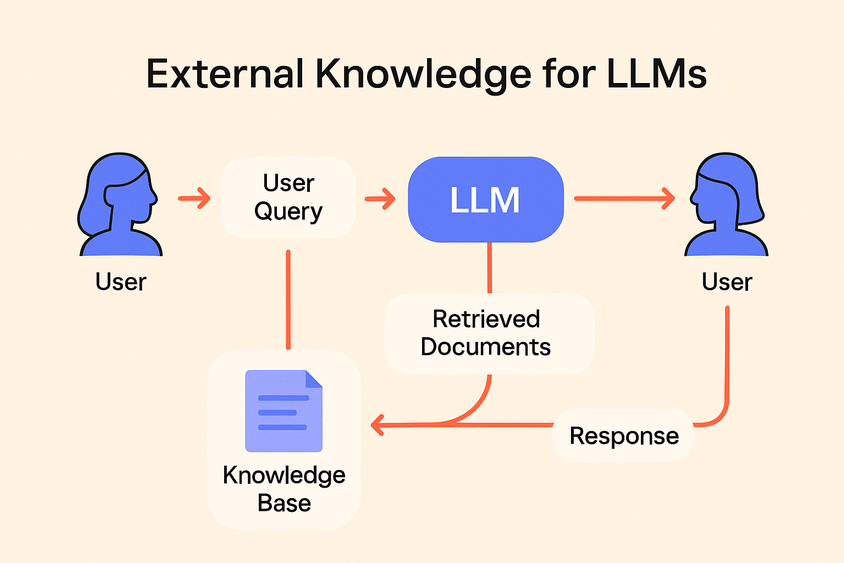

One of the simplest and most widely adopted forms of augmentation is retrieval, giving the model access to an external knowledge base at inference time. Instead of asking a model to recall a fact from its frozen weights, you allow it to look up relevant documents and include those in the prompt.

This is the core idea behind Retrieval Augmented Generation (RAG). The model isn't trained on everything, it just needs to know how to read.

Say you're building a legal assistant. Laws change constantly, and a static model won’t have the latest updates. With RAG, you can index court rulings, statutes, and case files, and let the model retrieve and ground its responses in that data on the fly. It’s not just about accuracy, it’s about adaptability.

Read more about how RAG compares to memory augmentation →

Giving Models a Sense of Memory

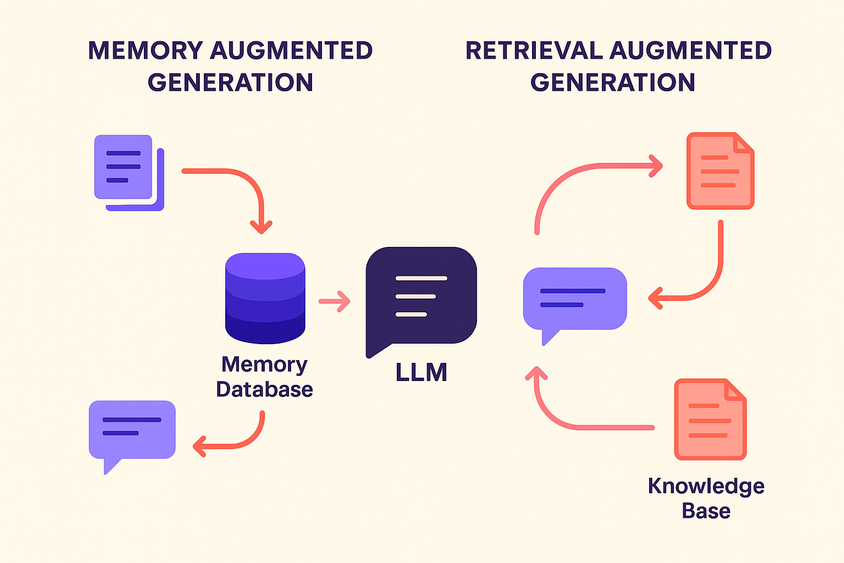

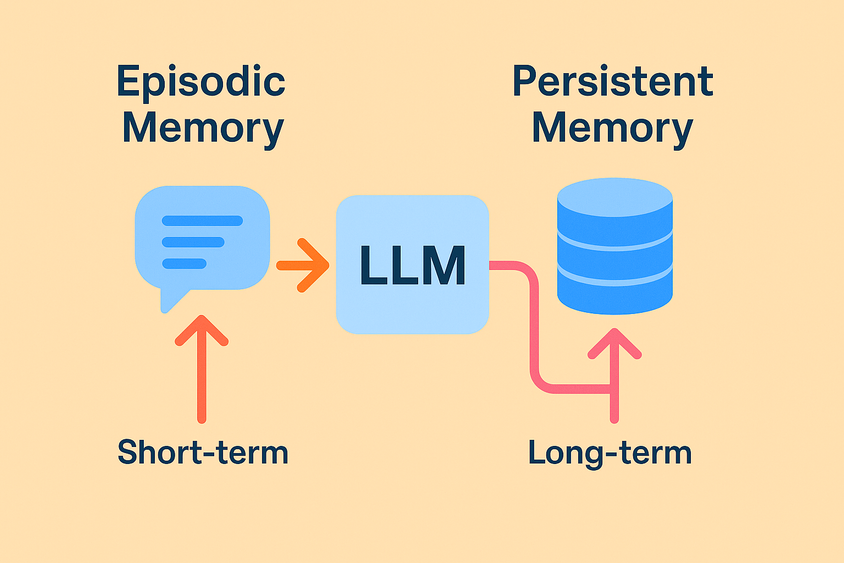

If retrieval is about facts, memory augmentation is about continuity. Instead of pulling from a static knowledge base, memory-augmented systems store and recall a model’s previous interactions, what the user asked, what the model responded, what actions were taken.

This is especially useful in personalized or long-running systems. A tutoring agent might remember which topics a student struggled with. A product assistant could recall user preferences without starting from scratch every time. These memories can be retrieved and embedded into future prompts to create more consistent, contextual, and personal experiences.

The key distinction? While RAG gives the model access to external documents, memory gives it access to its own history.

Letting Models Use Tools

Language models are good at predicting text, but they can’t directly perform actions. Tool augmentation changes that by giving models the ability to call APIs, use calculators, execute code, or take steps in an environment.

This is often described as agent-based reasoning. The model acts more like a planner or orchestrator, deciding when and how to use tools to complete a task. For example, an agent might:

- Look up a restaurant via a search API,

- Calculate a delivery ETA using a maps service,

- Or run a code snippet to transform a dataset.

It’s not about turning the model into a search engine or script runner. It’s about letting the model know when it needs help and giving it ways to get that help.

Helping Models Learn from Feedback

Finally, many production systems don’t stop once a model generates a response—they track how it performed. Was the answer accepted? Did a human correct it? Did the model misunderstand the question?

Feedback loops, often implemented via evaluations, scoring, or fine-tuning—are how augmented models improve over time. This might involve:

- Re-ranking responses based on quality scores,

- Learning from labeled corrections,

- Or surfacing examples for retraining or RLHF.

Feedback isn’t just about better models. It’s also about trust. It gives teams the ability to monitor, refine, and continuously validate system behavior against real-world expectations.

Putting It All Together

Augmentation isn’t an either-or decision. In fact, the most effective systems combine multiple techniques:

A customer support chatbot might retrieve relevant docs with RAG, remember a user's prior issues with memory, escalate to a live agent using a tool API, and log feedback to improve future responses.

These systems don’t just talk, they reason, adapt, and improve.

Want to Go Deeper?

If you're just starting to explore augmentation, a good first step is understanding how memory and retrieval differ in practice. They may sound similar, but they solve very different problems.

👉 Memory vs Retrieval Augmented Generation: What’s the Difference?

You can also explore these related topics:

- A Guide to Evaluations in AI

- How to Monitor AI Model Performance

- Human-in-the-Loop Systems: Why People Still Matter