How to choose a data labeling platform with integrated project management tools

A data labeling platform should help teams do more than annotate. It should help teams run labeling work consistently, especially when projects multiply, contributors rotate, and quality expectations tighten. The strongest platforms make day-to-day coordination feel simple, while keeping enough structure in place to avoid rework and drift.

What to look for in an integrated labeling and project workflow

Integrated project management means the platform supports the operational reality of labeling. That includes how projects are created, how work is assigned and reviewed, how quality is enforced, and how progress is tracked. The goal is predictable execution: fewer handoffs, less manual tracking, and clearer visibility into where the work stands.

Project setup that stays consistent

Most teams label in iterations. New batches arrive, taxonomies evolve, and similar projects get repeated across teams or domains. A platform should make project setup easy to standardize so projects start from a known baseline and changes do not create confusion later. A concrete example of project configuration and management concepts can be found in Label Studio, which outlines how projects are managed inside the labeling workflow.

Task organization that supports real operations

Project management shows up in how tasks are handled once the project is live. Look for task organization that makes it easy to prioritize the right work, segment tasks by metadata, and adjust routing when priorities shift. Strong task views reduce the need for external spreadsheets because teams can see what is happening inside the workflow.

Clear roles, permissions, and review stages

As soon as more than a few people are involved, roles become part of quality control. A platform should support separation between annotators, reviewers, and project owners, with permissions that prevent accidental configuration changes. Review should also be a defined flow, with clear handoffs and rework loops that keep context attached to the task.

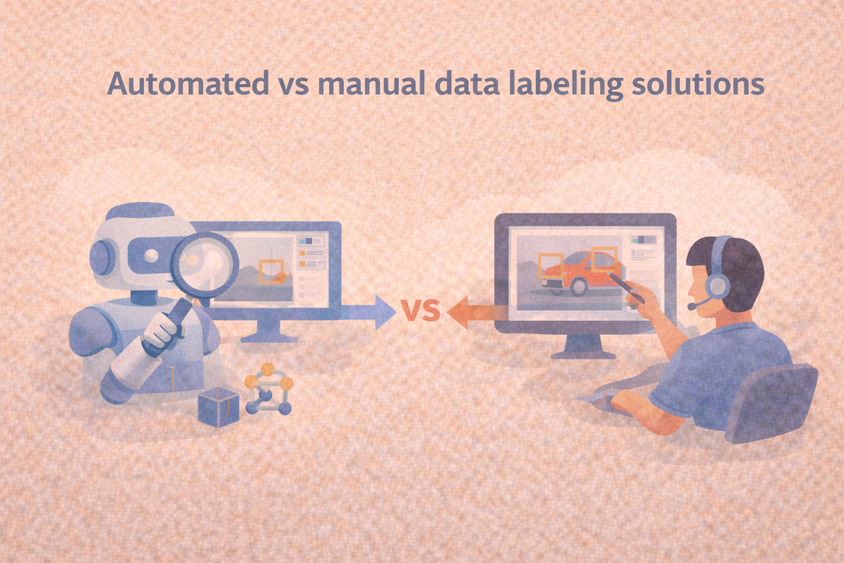

Quality controls that create feedback loops

Quality improves when errors lead to better guidance. Look for workflows that make it easy to spot recurring mistakes, update instructions, and confirm the changes are working. Agreement checks, validation rules, and structured review can all help, but the key signal is whether the platform turns quality issues into actionable feedback instead of one-off rejections.

Reporting that answers operational questions quickly

Progress reporting should do more than count completed tasks. It should help you understand what is blocked, where review is slowing down, and which task types cause confusion. When reporting is clear, project leads can adjust staffing, update instructions, or reshape task queues early instead of reacting at the end.

Data connectivity that preserves traceability

Labeling is part of a larger workflow. Imports, exports, and metadata handling determine whether datasets can be reproduced and audited later. A platform that preserves context and keeps outputs traceable supports iteration across training and evaluation cycles. For a broader view of end-to-end workflow themes like collaboration, quality, and governance, Label Studio provides a useful reference point.

Why workflow matters as much as labels

Choosing a data labeling platform with integrated project management comes down to operational fit. Prioritize consistent project setup, practical task coordination, a clear review flow, quality feedback loops, and reporting that reveals bottlenecks early. When those foundations are in place, labeling becomes easier to scale and easier to trust.

Frequently Asked Questions

Frequently Asked Questions

What should a small team prioritize first?

Start with project setup, task coordination, and a review flow that fits your team. These elements shape consistency early and reduce rework later.

How can a team tell whether project management will scale?

Look at how easily projects can be repeated, how smoothly rework is handled, and whether reporting provides a clear view of progress across multiple workstreams.

Do review workflows matter if the team trusts annotators?

Review workflows still help. They provide consistent checks, surface ambiguous cases, and create a feedback loop that improves guidelines.

What causes the most operational overhead in labeling projects?

Manual coordination around assignment, rework, and progress tracking tends to create the most overhead, especially once multiple projects run in parallel.

How do permissions affect quality?

Permissions protect configuration and prevent accidental changes. Clear roles reduce confusion about who can adjust labels, instructions, or exports.