How to Evaluate AI Models Effectively

The AI landscape moves fast, and evaluation is the difference between a model that looks strong in a notebook and one that holds up in production. Whether you’re building a recommendation engine, a computer vision pipeline, or a language model, AI model evaluation helps you measure performance, diagnose weaknesses, and build trust in the outputs.

For a broader overview of evaluation methods (metrics, human review, LLM judges, and hybrid strategies), see .

So, how do you evaluate AI models effectively?

1) Define What “Good” Means for Your Use Case

Every model serves a different purpose, and “good” depends on the decision your system supports. Fraud detection often prioritizes recall to catch suspicious transactions, while content moderation may prioritize precision to reduce false positives. Recommendation systems focus on ranking quality and downstream engagement, and language models often need to balance helpfulness with safety and factuality.

Start by translating that into a small set of evaluation criteria tied to real outcomes. Define the primary goal, identify which failure modes are unacceptable, and set thresholds that indicate readiness for testing or release. When possible, evaluate by meaningful slices, such as regions, languages, device types, or key content categories, so you can see where performance breaks down.

2) Choose the Right Metrics

Metrics should match your task, your data, and your risk profile. Accuracy alone can mislead on imbalanced datasets, and a single score can hide important failure modes.

For classification, teams often track precision, recall, and F1, and add ROC-AUC or PR-AUC when ranking quality matters. For computer vision, Intersection over Union is common for detection tasks. For text generation, automated metrics like BLEU or ROUGE can provide a baseline signal, but they rarely capture whether outputs are correct, useful, or safe.

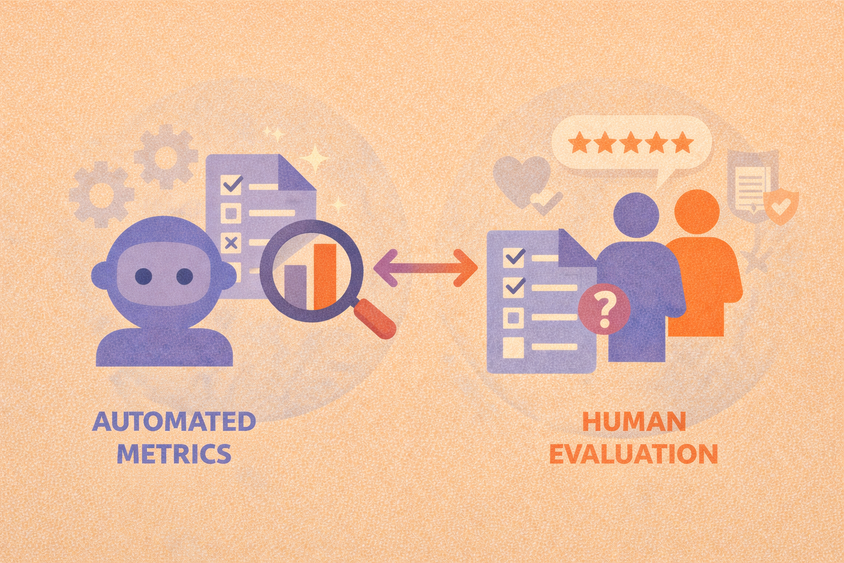

For language models in particular, it helps to complement automated metrics with checks for factuality and safety, along with a structured way to judge overall response quality.

If you want guidance on where metrics end and human review becomes necessary, see

3) Look Beyond Benchmarks

Benchmarks are helpful, but they can diverge from production reality. Domain shift, adversarial inputs, and new user behaviors often create failure modes that curated test sets don’t capture.

Strengthen your evaluation by adding domain-specific examples, reviewing long-tail cases, and doing lightweight manual error analysis to categorize what’s going wrong. Treat your evaluation set as something you maintain over time: version it, keep a stable regression subset, and update it intentionally as the product evolves.

4) Use Human-in-the-Loop Feedback

For moderation, summarization, Q&A, and domain-specific classification, structured human review surfaces issues that automated metrics miss. Human evaluation scales more effectively when it’s repeatable: clear rubrics, consistent scoring criteria, and periodic calibration so reviewers stay aligned.

Many teams focus human review on targeted slices, edge cases, sensitive topics, new intents, and confusing examples, rather than trying to review everything. That approach keeps cost manageable while still surfacing the failure modes that matter.

For a deeper look at how teams structure and scale human review, see

5) Test for Bias and Fairness

When models affect people’s outcomes, evaluation should include fairness checks. The simplest starting point is measuring performance across relevant segments and looking for systematic gaps, then using those findings to improve data coverage, refine guidelines, or adjust thresholds and post-processing.

6) Monitor Post-Deployment

Evaluation continues after deployment because real-world conditions change. Data drift, new user behaviors, and evolving content can degrade performance over time.

A practical approach is to schedule re-evaluation on fresh samples, keep a stable regression set to catch unintended drops after updates, and use feedback signals to prioritize review and dataset improvements. This keeps evaluation tied to real usage and helps teams catch issues before they become costly.

Final Thoughts

AI model evaluation is a core discipline for teams deploying models into real workflows. Strong evaluation combines quantitative measurement with structured review, domain-relevant testing, and ongoing monitoring.

If your models influence real decisions, define evaluation criteria tied to user needs and risk, measure performance across meaningful slices, and keep a repeatable review loop that surfaces failures early.

Frequently Asked Questions

Frequently Asked Questions

What is AI model evaluation?

AI model evaluation is the process of measuring how well a machine learning model performs on specific tasks. It involves using metrics, test data, and sometimes human feedback to assess accuracy, reliability, and fairness.

Why is evaluating AI models important?

Without proper evaluation, AI models can make poor decisions, introduce bias, or fail in real-world scenarios. Evaluation ensures your models are not only accurate but also trustworthy and fit for purpose.

What are the most common metrics used in AI model evaluation?

Popular metrics include precision, recall, F1 score, AUC-ROC, BLEU (for language tasks), and IoU (for computer vision). The best metric depends on the task and what outcomes matter most.

How do you evaluate generative AI models like LLMs?

Generative models are often evaluated using automatic metrics like BLEU, ROUGE, or METEOR, along with human review for subjective qualities like coherence, helpfulness, or bias.