LLM Evaluation Methods: How to Trust What Your Model Says

Large language models (LLMs) are powerful, but can you trust their output? Evaluating LLMs is one of the most debated challenges in AI today. Traditional accuracy metrics don’t always apply, and even human reviewers can disagree on what a "good" response looks like. This blog explores practical, scalable LLM evaluation methods that go beyond surface-level scores.

Why LLM Evaluation Is Different

LLMs don’t just classify or predict; they generate language. This makes evaluation inherently more subjective. The same input can produce multiple valid outputs, and context often determines whether a response is helpful, misleading, or flat-out wrong.

On top of that, LLMs can sound confident while hallucinating facts. Evaluating their reliability requires more than checking for the "right" answer.

Common LLM Evaluation Methods

1. Human Judgment

Still the gold standard. Domain experts review responses for factuality, coherence, helpfulness, and safety. It's time-intensive but crucial for sensitive use cases.

2. Reference-Based Metrics

Metrics like BLEU, ROUGE, and METEOR compare LLM output to a set of ground-truth references. Useful for summarization and translation tasks, but limited when valid outputs vary.

3. LLM-as-a-Judge

Use one LLM to rate or rank another's output. Fast and scalable, but introduces model biases. Works best when cross-validated with human-reviewed examples.

4. Preference Data and Ranking

Collect human preferences between multiple model outputs. Used heavily in reinforcement learning from human feedback (RLHF) to train and evaluate LLMs.

5. Behavioral Testing

Stress test models with prompts designed to expose weaknesses—like factual traps, ethical dilemmas, or contradictory instructions. Then evaluate how the model responds.

6. Custom Rubrics

Develop rubrics based on your domain. For example, a legal AI assistant may be scored on citation accuracy, tone, and jurisdictional alignment. These structured reviews help scale expert oversight.

To dive deeper into evaluation frameworks, whether you're scoring annotations, fine-tuning LLMs, or validating outputs with human oversight, check out our Complete Guide to Evaluations in AI. It offers a broader view of strategies and tools to measure model performance with clarity and confidence.

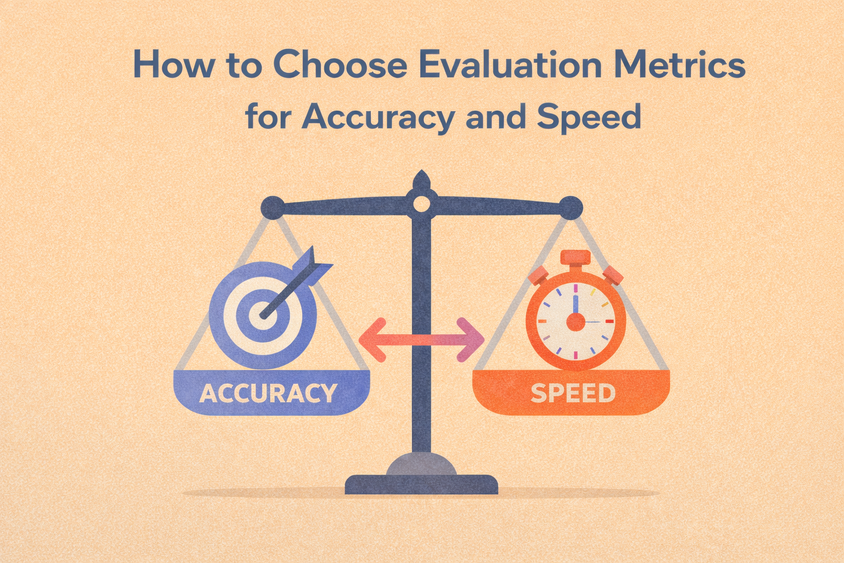

Evaluating LLMs isn’t just about metrics, it’s about aligning your model with real-world needs. Whether you're comparing fine-tuned variants or auditing responses before deployment, your evaluation strategy should be as nuanced as the model you're testing.

Make evaluation an active, evolving part of your LLM pipeline. Your model’s credibility depends on it.

Frequently Asked Questions

Frequently Asked Questions

What are LLM evaluation methods?

LLM evaluation methods are strategies used to assess the quality, accuracy, and reliability of large language model outputs. These can include human review, automatic metrics, preference data, and more advanced techniques like LLM-as-a-judge or behavioral testing.

Why is evaluating LLMs different from other AI models?

Unlike classification models, LLMs generate free-form text. This makes their outputs more subjective and context-dependent, often requiring nuanced evaluation beyond accuracy or F1 score.

What is LLM-as-a-Judge?

LLM-as-a-Judge refers to using one language model to evaluate the output of another. It's scalable and cost-effective, but must be validated carefully, as it can reflect and amplify model biases.

Can I automate LLM evaluation completely?

While some aspects of evaluation can be automated using metrics or model-based judging, full automation isn’t recommended for high-stakes applications. A hybrid approach that includes human oversight is typically more reliable.