Machine Learning Evaluation Metrics: What Really Matters?

When building a machine learning model, performance is often judged by a single number, accuracy. But that number alone can be misleading, especially when working with imbalanced datasets or high-risk applications. To evaluate your model fairly and meaningfully, you need the right set of metrics tailored to your problem.

In this post, we’ll walk through essential machine learning evaluation metrics, why they matter, and how to choose the best ones for your specific task.

1. Why Evaluation Metrics Matter

A model that performs well on paper can still fail in production. Evaluation metrics are your first line of defense against deploying unreliable, biased, or misleading models. They provide a quantitative way to compare models, track progress, and understand trade-offs.

Choosing the wrong metric can lead to dangerous conclusions. For example, in fraud detection or medical diagnosis, a high accuracy score might hide the fact that your model misses nearly all positive cases.

2. Core Evaluation Metrics (and When to Use Them)

Accuracy

- Definition: The proportion of total predictions your model got right.

- Use When: Classes are balanced and errors are equally costly.

- Watch Out: In imbalanced datasets (e.g., 95% class A, 5% class B), accuracy can be deceptive.

Precision

- Definition: Of all predicted positives, how many were actually correct?

- Use When: False positives are costly (e.g., spam detection, medical tests).

- Formula: TP / (TP + FP)

Recall (Sensitivity)

- Definition: Of all actual positives, how many did the model correctly identify?

- Use When: Missing a positive is worse than a false alarm (e.g., cancer detection).

- Formula: TP / (TP + FN)

F1 Score

- Definition: Harmonic mean of precision and recall.

- Use When: You need a balance between false positives and false negatives.

- Formula: 2 * (Precision * Recall) / (Precision + Recall)

ROC-AUC (Receiver Operating Characteristic - Area Under Curve)

- Definition: Measures the model’s ability to distinguish between classes.

- Use When: You want a threshold-independent view of model performance.

- Strength: Especially useful for binary classification and comparing multiple models.

3. Specialized Metrics for NLP and Beyond

BLEU (Bilingual Evaluation Understudy)

- Used For: Evaluating machine translation and other text generation tasks.

- Limitations: Focuses on n-gram overlap, which doesn’t always capture meaning.

ROUGE (Recall-Oriented Understudy for Gisting Evaluation)

- Used For: Summarization tasks; measures recall of overlapping units such as words or n-grams.

- Best When: You want to evaluate how well the summary covers the original content.

Token-Level Accuracy

- Used For: Sequence labeling tasks (e.g., Named Entity Recognition).

- Watch Out: Doesn’t penalize partial mismatches (e.g., partial entity capture).

4. Regression Metrics

If your model predicts continuous values, you’ll want different metrics:

- Mean Absolute Error (MAE): Average magnitude of errors, no direction considered.

- Mean Squared Error (MSE): Like MAE, but penalizes large errors more.

- R² Score: Proportion of variance explained by the model.

5. Choosing the Right Metric

Think about:

- Task Type: Classification, regression, or generation?

- Class Balance: Imbalanced datasets often need precision/recall.

- Cost of Errors: Are false positives or false negatives more harmful?

- Interpretability: Will your stakeholders understand the metric?

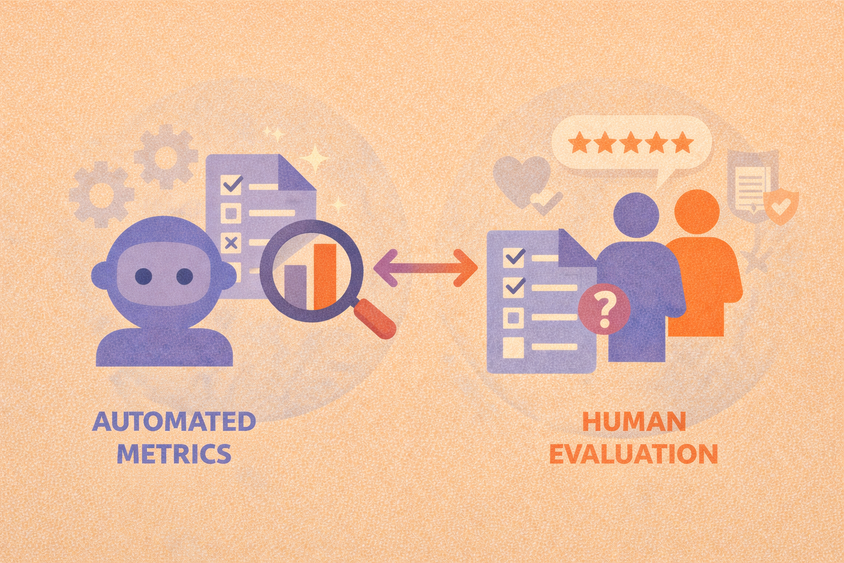

Don’t rely on a single number. Instead, use a suite of metrics to capture a fuller picture. In real-world deployments, you may even track metrics over time or across user segments.

6. Putting Metrics Into Practice

Tools like Label Studio, scikit-learn, and MLflow let you integrate metric tracking into your ML pipeline. Whether you’re tuning hyperparameters or running a production model, automated metric reporting can help catch issues early and keep models aligned with goals.

If you're managing annotation workflows, tools like Label Studio let you inspect label quality directly, using metrics like Krippendorff’s Alpha for inter-annotator agreement and precision/recall for label review models.

Frequently Asked Questions

Frequently Asked Questions

What is the most important machine learning evaluation metric?

There’s no single best metric—it depends on the task, class balance, and business objectives

Why is accuracy not always a good metric?

Accuracy can be misleading in imbalanced datasets where one class dominates. It doesn’t tell you how well the model performs on minority classes.

What’s the difference between precision and recall?

Precision measures correctness among positive predictions; recall measures coverage of actual positives.

When should I use the F1 score?

Use F1 when you need to balance both precision and recall, especially with imbalanced classes.