Human-in-the-Loop Evaluations: Why People Still Matter in AI

As AI systems become more advanced, the need for human oversight hasn’t disappeared—it’s grown. Human-in-the-loop (HITL) evaluation is a cornerstone of responsible AI development, especially when accuracy alone isn’t enough. This blog explores how and why to involve humans in the evaluation process, and where HITL methods provide the most value.

What Is Human-in-the-Loop Evaluation?

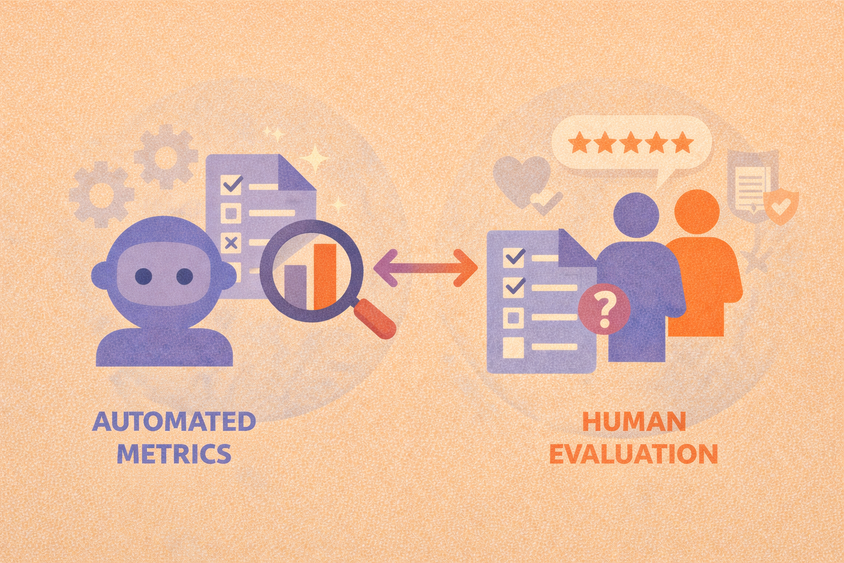

Human-in-the-loop evaluation refers to the practice of incorporating people—often domain experts, reviewers, or annotators—into the process of assessing model outputs. Unlike automated metrics, HITL evaluation allows for nuanced judgment, domain awareness, and ethical reasoning that machines can’t fully replicate.

These evaluations often focus on:

- Factual accuracy

- Coherence or fluency

- Relevance to user intent

- Bias, safety, and ethical concerns

When to Use HITL Evaluation

Human input is especially valuable when:

- Tasks are open-ended (e.g., summarization, question answering)

- Outputs require domain-specific knowledge (e.g., legal, medical)

- You’re assessing subjective traits (e.g., tone, helpfulness)

- Ethical risks or harms are hard to detect via automation

In these cases, HITL evaluation serves not just as a quality check but as a safeguard against failure modes that metrics alone can miss.

HITL Evaluation in Practice

Depending on the complexity of your use case, HITL can take different forms:

- Spot checks: Periodic review of model outputs by experts.

- Side-by-side comparisons: Reviewers compare two outputs and select the preferred one.

- Scoring with rubrics: Structured evaluation based on predefined criteria.

- Escalation workflows: Automatic routing of uncertain or sensitive cases to a human reviewer.

Many organizations use Label Studio or similar platforms to build structured, auditable HITL workflows that can scale with their teams. Try Label Studio and start your free trial today.

Scaling Human Review

HITL evaluation is resource-intensive, but not impossible to scale. Strategies include:

- Training internal QA teams or leveraging external experts

- Using sampling methods to review a subset of outputs

- Combining automated scoring with selective human checks

A well-designed HITL workflow doesn't bottleneck development—it enhances trust and accountability.

Final Thoughts

Human-in-the-loop evaluations keep AI grounded in human values. In a world where models can sound confident even when wrong, human review is a critical counterbalance. As you scale your systems, remember: the more your model affects people, the more people should be involved in evaluating it.

Frequently Asked Questions

FAQs

What is human-in-the-loop evaluation?

It’s the practice of using human reviewers to evaluate AI model outputs, especially for tasks that require subjective or ethical judgment.

When should I use HITL evaluations?

Use HITL when tasks are subjective, high-risk, or domain-specific—like medical analysis, legal documents, or moderation.

Can human-in-the-loop evaluation scale?

Yes. While resource-intensive, it can be scaled using sampling, escalation workflows, and expert labeling platforms.