Export annotations and data from Label Studio

At any point in your labeling project, you can export the annotations from Label Studio.

Label Studio stores your annotations in a raw JSON format in the SQLite database backend, PostgreSQL database backend, or whichever cloud or database storage you specify as target storage. Cloud storage buckets contain one file per labeled task named task_id.json. For more information about syncing target storage, see Cloud storage setup.

Image annotations exported in JSON format use percentages of overall image size, not pixels, to describe the size and location of the bounding boxes. For more information, see how to convert the image annotation units.

note

Some export formats export only the annotations and not the data from the task. For more information, see the export formats supported by Label Studio.

How Label Studio saves results in annotations

Each annotation that you create when you label a task contains regions and results.

- Regions refer to the selected area of the data, whether a text span, image area, audio segment, or another entity.

- Results refer to the labels assigned to the region.

Each region has a unique ID for each annotation, formed as a string with the characters A-Za-z0-9_-. Each result ID is the same as the region ID that it applies to.

When a prediction is used to create an annotation, the result IDs stay the same in the annotation field. This allows you to track the regions generated by your machine learning model and compare them directly to the human-created and reviewed annotations.

Export using the UI in Community Edition of Label Studio

Use the following steps to export data and annotations from the Label Studio UI.

- For a project, click Export.

- Select an available export format.

- Click Export to export your data.

note

- The export will always include the annotated tasks, regardless of filters set on the tab.

- Cancelled annotated tasks will be included in the exported result too.

- If you want to apply tab filters to the export, try creating export snapshots using the SDK.

Export timeout in Community Edition

Exports from the Community Edition UI are generated synchronously as part of the request. Community Edition keeps deployment simple and does not run background export workers by default. Label Studio Enterprise supports background workers for asynchronous snapshot exports, which is better suited for large-scale projects. For large projects, the community’s export can take longer than the timeout configured in your reverse proxy or ingress (often around 90 seconds), which can result in 502/504 errors or an export timeout.

If you hit this limitation, you can still export your data using one of these options:

- Export snapshots using the SDK: See how to export snapshots using the SDK.

- Export using the console command: Use the console command to export your project directly from the machine running Label Studio.

- Export in the UI at scale: Label Studio Enterprise includes background snapshot exports in the UI for large datasets (see Export snapshots using the UI).

Export using console command

Use the following command to export data and annotations.

label-studio export <project-id> <export-format> --export-path=<output-path>To enable logs:

DEBUG=1 LOG_LEVEL=DEBUG label-studio export <project-id> <export-format> --export-path=<output-path>Export using the Easy Export API

You can call the Label Studio API to export annotations. For a small labeling project, call the export endpoint to export annotations.

Export all tasks including tasks without annotations

Label Studio open source exports tasks with annotations only by default. If you want to easily export all tasks including tasks without annotations, you can call the Easy Export API with query param download_all_tasks=true. For example:

curl -X GET https://localhost:8080/api/projects/{id}/export?exportType=JSON&download_all_tasks=trueIf your project is large, you can use a snapshot export to avoid timeouts in most cases. Snapshots include all tasks without annotations by default.

Export snapshots using the Snapshot API

For a large labeling project with hundreds of thousands of tasks, do the following:

- Make a POST request to create a new export file or snapshot. The response includes an

idfor the created file. - Check the status of the export file created using the

idas theexport_pk. - Using the

idfrom the created snapshot as the export primary key, orexport_pk, make a GET request to download the export file.

Export formats supported by Label Studio

Label Studio supports many common and standard formats for exporting completed labeling tasks. If you don’t see a format that works for you, you can contribute one. For more information, see the Label Studio Converter tool in our SDK repo.

ASR_MANIFEST

Export audio transcription labels for automatic speech recognition as the JSON manifest format expected by NVIDIA NeMo models. Supports audio transcription labeling projects that use the Audio tag with the TextArea tag.

{“audio_filepath”: “/path/to/audio.wav”, “text”: “the transcription”, “offset”: 301.75, “duration”: 0.82, “utt”: “utterance_id”, “ctm_utt”: “en_4156”, “side”: “A”}Brush labels to NumPy and PNG

Export your brush mask labels as NumPy 2d arrays and PNG images. Each label outputs as one image. Supports brush labeling image projects that use the BrushLabels tag.

COCO

A popular machine learning format used by the COCO dataset for object detection and image segmentation tasks. Supports bounding box and polygon image labeling projects that use the BrushLabels, RectangleLabels, KeyPointLabels (see note below), or PolygonLabels tags.

KeyPointLabels Export Support

If using KeyPointLabels, you will need to add the following to your labeling config:

- At least one

<RectangleLabels>option. You will use this as a parent bounding box for the keypoints. - Add a

model_indexto every<Label>inside your<KeyPointLabels>tag. Themodel_indexvalue defines the order of the keypoint coordinates in the output array for YOLO.

For example:

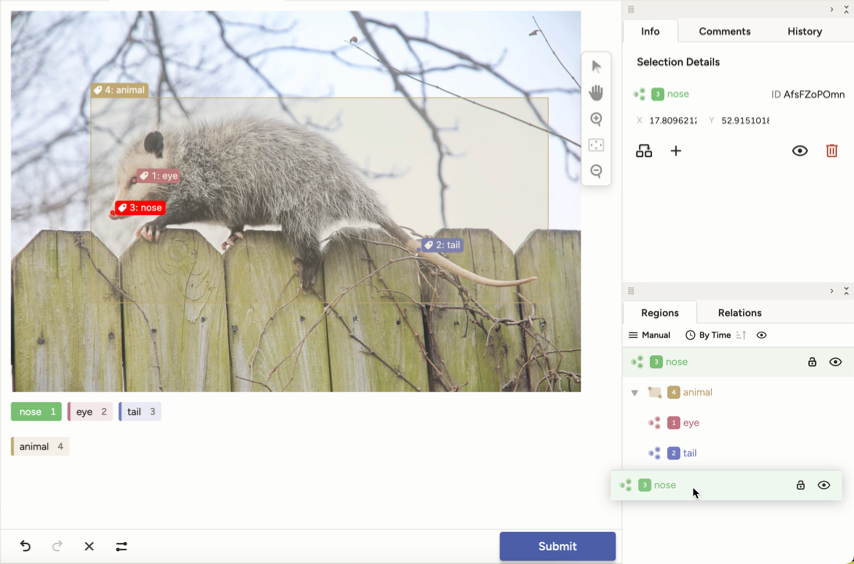

<View>

<Image name="image" value="$image"/>

<KeyPointLabels name="kp" toName="image">

<Label value="nose" model_index="0"/>

<Label value="eye" model_index="1"/>

<Label value="tail" model_index="2"/>

</KeyPointLabels>

<RectangleLabels name="bbox" toName="image">

<Label value="animal"/>

</RectangleLabels>

</View>

After annotating, you must drag-and-drop each keypoint region under its corresponding rectangle region in the Regions panel.

This establishes a parent–child hierarchy (via parentID), which is necessary for export. See the export examples below.

Export examples

[

{

"result": [

{

"id": "17n06ubOJs",

"type": "keypointlabels",

"value": {

"x": 6.675567423230974,

"y": 20.597014925373134,

"width": 0.26702269692923897,

"keypointlabels": [

"nose"

]

},

"origin": "manual",

"to_name": "image",

"parentID": "QHG4TBXuNC",

"from_name": "kp",

"image_rotation": 0,

"original_width": 200,

"original_height": 179

},

{

"id": "QHG4TBXuNC",

"type": "rectanglelabels",

"value": {

"x": 3.871829105473965,

"y": 4.029850746268656,

"width": 94.39252336448598,

"height": 92.08955223880598,

"rotation": 0,

"rectanglelabels": [

"animal"

]

},

"origin": "manual",

"to_name": "image",

"from_name": "bbox",

"image_rotation": 0,

"original_width": 200,

"original_height": 179

}

][

{

"id": 0,

"image_id": 0,

"category_id": 0,

"segmentation": [],

"bbox": [

7.74365821094793,

7.213432835820895,

188.78504672897196,

164.84029850746268

],

"ignore": 0,

"iscrowd": 0,

"area": 31119.38345654903

},

{

"id": 1,

"image_id": 0,

"category_id": 0,

"keypoints": [

13,

37,

2,

33,

33,

2,

167,

24,

2

],

"num_keypoints": 3,

"bbox": [

13,

24,

154,

13

],

"iscrowd": 0

}

]0 0.5106809078771696 0.5007462686567165 0.9439252336448598 0.9208955223880598 0.06675567423230974 0.20597014925373133 2 0.1628838451268358 0.18507462686567164 2 0.8371161548731643 0.13134328358208955 2CoNLL2003

A popular format used for the CoNLL-2003 named entity recognition challenge. Supports text labeling projects that use the Text and Labels tags.

CSV

Results are stored as comma-separated values with the column names specified by the values of the "from_name" and "to_name" fields in the labeling configuration. Supports all project types.

JSON

List of items in raw JSON format stored in one JSON file. Use this format to export both the data and the annotations for a dataset. Supports all project types.

JSON_MIN

List of items where only "from_name", "to_name" values from the raw JSON format are exported. Use this format to export the annotations and the data for a dataset, and no Label-Studio-specific fields. Supports all project types.

For example:

{

"image": "https://htx-pub.s3.us-east-1.amazonaws.com/examples/images/nick-owuor-astro-nic-visuals-wDifg5xc9Z4-unsplash.jpg",

"tag": [{

"height": 10.458911419423693,

"rectanglelabels": [

"Moonwalker"

],

"rotation": 0,

"width": 12.4,

"x": 50.8,

"y": 5.869797225186766

}]

}Pascal VOC XML

A popular XML-formatted task data is used for object detection and image segmentation tasks. Supports bounding box image labeling projects that use the RectangleLabels tag.

spaCy

Label Studio does not support exporting directly to spaCy binary format, but you can convert annotations exported from Label Studio to a format compatible with spaCy. You must have the spacy python package installed to perform this conversion.

To transform Label Studio annotations into spaCy binary format, do the following:

Export your annotations to CONLL2003 format.

Open the downloaded file and update the first line of the exported file to add

Oon the first line:-DOCSTART- -X- O OFrom the command line, run spacy convert to convert the CoNLL-formatted annotations to spaCy binary format, replacing

/path/to/<filename>with the path and file name of your annotations:spacy version 2:

spacy convert /path/to/<filename>.conll -c nerspacy version 3:

spacy convert /path/to/<filename>.conll -c conll .For more information, see the spaCy documentation on Converting existing corpora and annotations on running spacy convert.

TSV

Results are stored in a tab-separated tabular file with column names specified by "from_name" and "to_name" values in the labeling configuration. Supports all project types.

YOLO

Export object detection annotations in the YOLOv3 and YOLOv4 format. Supports object detection labeling projects that use the RectangleLabels and KeyPointLabels tags.

note

If using KeyPointLabels, see the note under COCO.

Label Studio JSON format of annotated tasks

When you annotate data, Label Studio stores the output in JSON format. The raw JSON structure of each completed task uses the following example:

{

"id": 1,

"created_at":"2021-03-09T21:52:49.513742Z",

"updated_at":"2021-03-09T22:16:08.746926Z",

"project":83,

"data": {

"image": "https://example.com/opensource/label-studio/1.jpg"

},

"annotations": [

{

"id": "1001",

"result": [

{

"from_name": "tag",

"id": "Dx_aB91ISN",

"source": "$image",

"to_name": "img",

"type": "rectanglelabels",

"value": {

"height": 10.458911419423693,

"rectanglelabels": [

"Moonwalker"

],

"rotation": 0,

"width": 12.4,

"x": 50.8,

"y": 5.869797225186766

}

}

],

"was_cancelled":false,

"ground_truth":false,

"created_at":"2021-03-09T22:16:08.728353Z",

"updated_at":"2021-03-09T22:16:08.728378Z",

"lead_time":4.288,

"result_count":0,

"task":1,

"completed_by":10

}

],

"predictions": [

{

"created_ago": "3 hours",

"model_version": "model 1",

"result": [

{

"from_name": "tag",

"id": "t5sp3TyXPo",

"source": "$image",

"to_name": "img",

"type": "rectanglelabels",

"value": {

"height": 11.612284069097889,

"rectanglelabels": [

"Moonwalker"

],

"rotation": 0,

"width": 39.6,

"x": 13.2,

"y": 34.702495201535505

}

}

]

},

{

"created_ago": "4 hours",

"model_version": "model 2",

"result": [

{

"from_name": "tag",

"id": "t5sp3TyXPo",

"source": "$image",

"to_name": "img",

"type": "rectanglelabels",

"value": {

"height": 33.61228406909789,

"rectanglelabels": [

"Moonwalker"

],

"rotation": 0,

"width": 39.6,

"x": 13.2,

"y": 54.702495201535505

}

}

]

}

]

}Relevant JSON property descriptions

Review the full list of JSON properties in the API documentation.

| JSON property name | Description |

|---|---|

| id | Identifier for the labeling task from the dataset. |

| data | Data copied from the input data task format. See the documentation for Task Format. |

| project | Identifier for a specific project in Label Studio. |

| annotations | Array containing the labeling results for the task. |

| annotations.id | Identifier for the completed task. |

| annotations.lead_time | Time in seconds to label the task. |

| annotations.result | Array containing the results of the labeling or annotation task. |

| annotations.updated_at | Timestamp for when the annotation is created or modified. |

| annotations.completed_at | Timestamp for when the annotation is created or submitted. |

| annotations.completed_by | User ID of the user that created the annotation. Matches the list order of users on the People page on the Label Studio UI. See Specifying annotators during import for import format options. |

| annotations.was_cancelled | Boolean. Details about whether or not the annotation was skipped, or cancelled. |

| result.id | Identifier for the specific annotation result for this task. Use it to combine together regions from different control tags, e.g. <Labels> and <Rectangle> |

| result.parentID | (Optional) Reference to the parent region result.id. It organizes regions into a hierarchical tree in the Region panel |

| result.from_name | Name of the tag used to label the region. See control tags. |

| result.to_name | Name of the object tag that provided the region to be labeled. See object tags. |

| result.type | Type of tag used to annotate the task. |

| result.value | Tag-specific value that includes details of the result of labeling the task. The value structure depends on the tag for the label. For more information, see Explore each tag. |

| drafts | Array of draft annotations. Follows similar format as the annotations array. Included only for tasks exported as a snapshot from the UI or using the API. |

| predictions | Array of machine learning predictions. Follows the same format as the annotations array, with one additional parameter. |

| predictions.score | The overall score of the result, based on the probabilistic output, confidence level, or other. |

| task.updated_at | Timestamp for when the task or any of its annotations or reviews are created, updated, or deleted. |

Specifying annotators during import

Units of image annotations

The units the x, y, width and height of image annotations are provided in percentages of overall image dimension.

Use the following conversion formulas for x, y, width, height:

pixel_x = x / 100.0 * original_width

pixel_y = y / 100.0 * original_height

pixel_width = width / 100.0 * original_width

pixel_height = height / 100.0 * original_heightFor example:

task = {

"annotations": [{

"result": [

{

"...": "...",

"original_width": 600,

"original_height": 403,

"image_rotation": 0,

"value": {

"x": 5.33,

"y": 23.57,

"width": 29.16,

"height": 31.26,

"rotation": 0,

"rectanglelabels": [

"Airplane"

]

}

}

]

}]

}

# convert from LS percent units to pixels

def convert_from_ls(result):

if 'original_width' not in result or 'original_height' not in result:

return None

value = result['value']

w, h = result['original_width'], result['original_height']

if all([key in value for key in ['x', 'y', 'width', 'height']]):

return w * value['x'] / 100.0, \

h * value['y'] / 100.0, \

w * value['width'] / 100.0, \

h * value['height'] / 100.0

# convert from pixels to LS percent units

def convert_to_ls(x, y, width, height, original_width, original_height):

return x / original_width * 100.0, y / original_height * 100.0, \

width / original_width * 100.0, height / original_height * 100

# convert from LS

output = convert_from_ls(task['annotations'][0]['result'][0])

if output is None:

raise Exception('Wrong convert')

pixel_x, pixel_y, pixel_width, pixel_height = output

print(pixel_x, pixel_y, pixel_width, pixel_height)

# convert back to LS

x, y, width, height = convert_to_ls(pixel_x, pixel_y, pixel_width, pixel_height, 600, 403)

print(x, y, width, height)Manually convert JSON annotations to another format

You can run the Label Studio converter tool on a directory or file of completed JSON annotations using the command line or Python to convert the completed annotations from Label Studio JSON format into another format.

note

If you use versions of Label Studio earlier than 1.0.0, then this is the only way to convert your Label Studio JSON format annotations into another labeling format.

Access task data (images, audio, texts) outside of Label Studio for ML backends

Machine Learning backend uses data from tasks for predictions, and you need to download them on Machine Learning backend side. Label Studio provides tools for downloading of these resources, and they are located in label-studio-tools Python package. If you are using official Label Studio Machine Learning backend, label-studio-tools package is installed automatically with other requirements.

Accessing task data from Label Studio instance

There are several ways of storing tasks resources (images, audio, texts, etc) in Label Studio:

- Cloud storages

- External web links

- Uploaded files

- Local files directory

Label Studio stores uploaded files in Project level structure. Each project has it’s own folder for files.

You can use label_studio_tools.core.utils.io.get_local_path to get task data - it will transform path or URL from task data to local path.

In case of local path it will return full local path and download resource in case of using download_resources parameter.

Provide Hostname and access_token for accessing external resource.

Accessing task data outside of Label Studio instance

You can use label_studio_tools.core.utils.io.get_local_path method to get data from outside machine for external links and cloud storages.

important

Don't forget to provide credentials.

You can get data with label_studio_tools.core.utils.io.get_local_path in case if you mount same disk to your machine. If you mount same disk to external box

Another way of accessing data is to use link from task and ACCESS_TOKEN (see documentation for authentication). Concatenate Label Studio hostname and link from task data. Then add access token to your request:

curl -X GET http://localhost:8080/api/projects/ -H 'Authorization: Token {YOUR_TOKEN}'Frequently asked questions

Question #1: I have made a request and received the following API responses:

- No data was provided.

- 404 or 403 error code was returned.

Answer: First check the network access to your Label Studio instance when you send API requests. You can execute test curl request with sample data.

Question #2: I tried to access files and received a FileNotFound error.

Answer:

Check that you have mounted the same disk as your Label Studio instance. Then check your files’ existence in Label Studio instance first.

Check

LOCAL_FILES_DOCUMENT_ROOTenvironment variable in your Label Studio instance and add it to your accessing data script.

Question #3: How to modify order of categories for COCO and YOLO exports?

Labels are sorted in alphabetical order, that is default behavior. If you want to modify that, please add category attribute in <Label> to modify that behaviour. For example:

<Label value="abc" category="1" />

<Label value="def" category="2" />