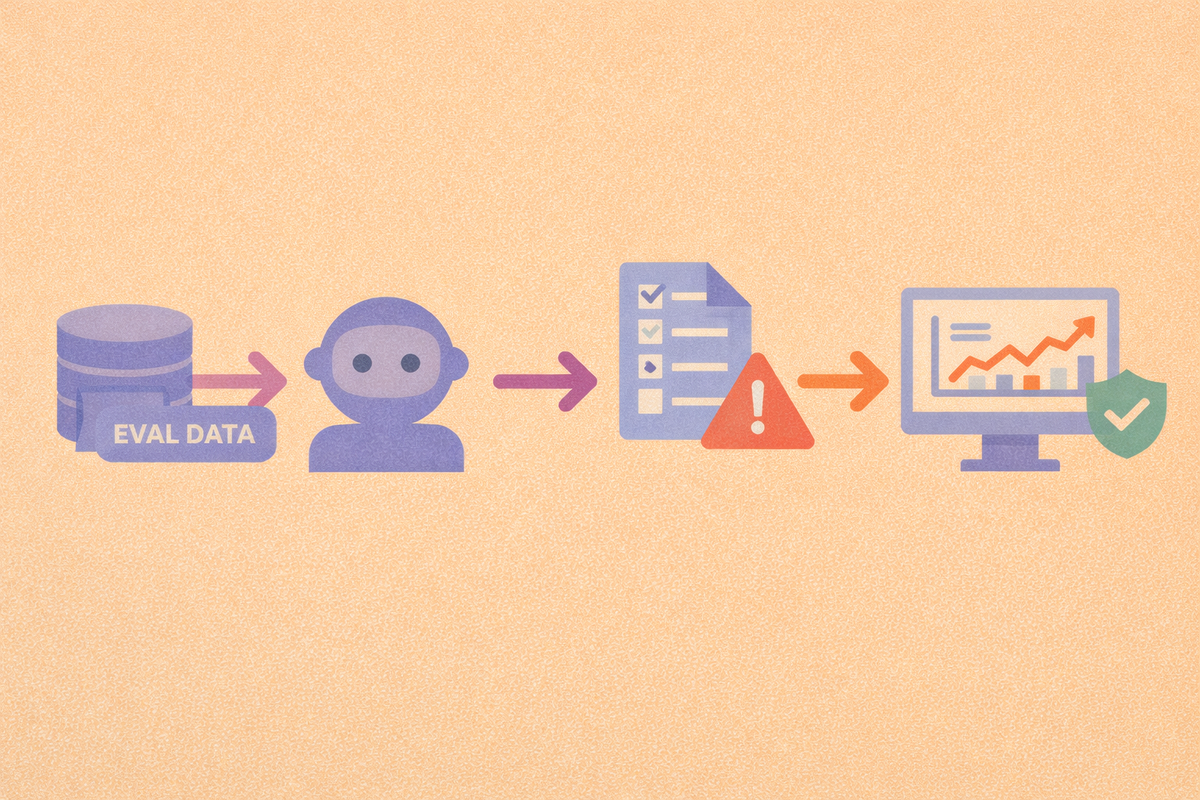

How to Set up AI Evaluation Pipelines in a Machine Learning Workflow

An AI evaluation pipeline is a structured, repeatable process for measuring model performance over time. Well-designed pipelines help teams catch regressions early, understand why performance changes, and maintain trust in models as data and requirements evolve.

1) Establish evaluation datasets and data versioning

Every evaluation pipeline starts with stable evaluation data. This dataset serves as the reference point for comparing model performance across versions. Without it, evaluation results become difficult to interpret because changes in metrics may reflect changes in data rather than improvements or regressions in the model.

Evaluation datasets should be separate from training data and documented clearly. Teams need to know where the data came from, what it represents, and what it does not. Including edge cases and known problem scenarios improves the pipeline’s ability to surface meaningful failures.

Versioning is critical. When evaluation data changes, it should be done intentionally and tracked explicitly. This allows teams to answer a key question later: Did the model change, or did the benchmark change? Without data versioning, long-term performance trends become unreliable.

Over time, teams may maintain multiple evaluation sets, such as a stable “core” set for trend tracking and a rotating set that reflects newer data or emerging risks.

2) Automate evaluation runs and metric tracking

Once datasets are defined, the next step is automating evaluation runs. Each time a model changes, whether due to new training data, parameter updates, or architecture changes—the pipeline should run the same evaluations and produce comparable results.

Automation ensures consistency. Manual evaluation often leads to subtle differences in setup or measurement that make comparisons unreliable. Automated pipelines apply the same metrics, thresholds, and scoring logic every time.

Metrics should be tracked over time, not just reported once. Trend views make it easier to see gradual degradation or unexpected jumps. This is especially important when models evolve incrementally rather than through large releases.

Good pipelines also make regressions obvious. Instead of asking whether a model is “good,” teams can ask whether it is better or worse than the last version and in which dimensions.

3) Include failure analysis and qualitative review

Aggregate metrics are useful, but they rarely explain why performance changes. This is why effective evaluation pipelines include failure analysis as a first-class component.

Failure analysis surfaces individual examples where the model performs poorly. Reviewing these examples helps teams understand whether metric changes reflect meaningful improvements, edge-case regressions, or noise.

Qualitative review is especially important for tasks involving language, perception, or judgment. Two models with similar scores may behave very differently in practice. Seeing concrete outputs makes those differences visible.

Over time, failure analysis results often feed back into evaluation design. Repeated failure patterns can inform new test cases, refined metrics, or updated evaluation criteria.

4) Expand pipelines to support continuous evaluation

As models move closer to production, evaluation pipelines often expand beyond pre-deployment testing. Continuous evaluation involves periodically re-running evaluations to detect changes caused by data drift, evolving user behavior, or shifting requirements.

This does not mean constant retraining or re-approval. Instead, it provides early warning signals. If performance degrades in specific slices or failure types increase, teams can investigate before issues escalate.

Continuous evaluation also supports governance and accountability. When questions arise about why a model was approved or how it has changed over time, pipelines provide an audit trail of evaluation results and decisions.

The goal of an evaluation pipeline is not automation for its own sake. It is about building confidence. Teams should be able to explain what they measured, what changed, and why a model was considered acceptable at each stage.

Frequently Asked Questions

Frequently Asked Questions

Do small teams need evaluation pipelines?

Yes. Even simple pipelines improve consistency and reduce guesswork.

What’s the biggest benefit of an evaluation pipeline?

Early detection of regressions and clearer understanding of performance changes.

Can evaluation pipelines replace human review?

No. Pipelines support decision-making, but human judgment remains essential.