What are the differences between synthetic and real-world AI benchmarks?

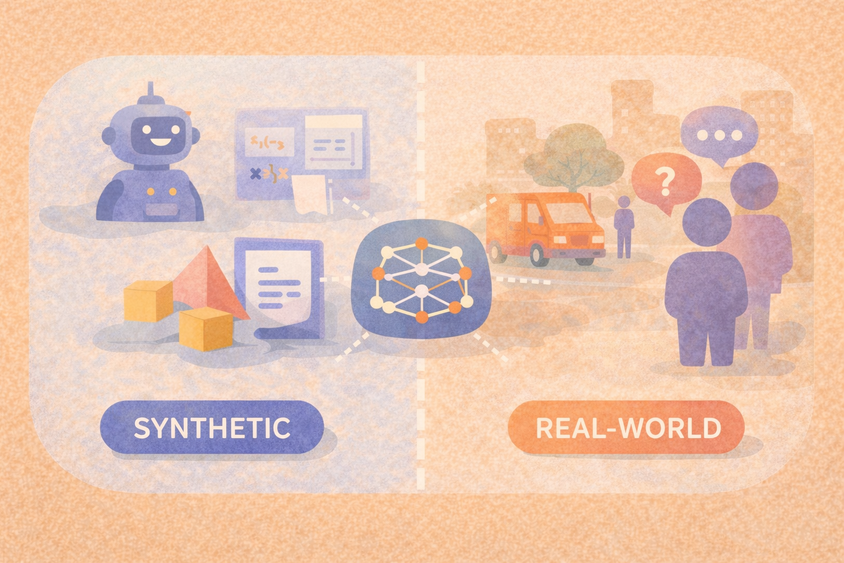

When people talk about “benchmarks,” they are often mixing two different families of tests:

- Synthetic benchmarks, built from generated or highly controlled data

- Real-world benchmarks, built from organic, messy data that comes from actual users or environments

Understanding the gap between them helps you avoid over-trusting pretty charts.

Synthetic benchmarks

Synthetic benchmarks use data that is created by rules, templates, simulation, or another model. Examples include:

- Template-generated prompts that test a specific reasoning skill

- Synthetic question–answer pairs built from structured data

- Simulated environments where agents interact with scripted worlds

Teams reach for synthetic benchmarks because they are easier to scale and control. You can generate thousands of examples, keep the distribution stable over time, and target specific abilities or failure modes.

System-level suites such as sit somewhat closer to this side: they define reference models and datasets to represent common workloads, then measure training or inference speed in standardized conditions.

The tradeoff is that synthetic tests often miss the nuance of real user behavior. A model might do well on clean, generated math questions but struggle with noisy, half-specified prompts in production.

Real-world benchmarks

Real-world benchmarks use data that originates in actual usage:

- Logs of real conversations

- Images from real devices and environments

- Naturally occurring documents such as forms, tickets, or reports

Datasets like , , and started as large collections of natural images, scenes, and speech, then went through standardization and annotation to become widely used benchmarks.

Real-world benchmarks help you see:

- How a model handles noise, edge cases, and ambiguous inputs

- Whether performance holds up across different subpopulations or contexts

- Failure patterns that only appear when people use the system in unexpected ways

They are usually harder and more expensive to build and maintain, but they tell you much more about real reliability.

How modern evaluation frameworks bridge the two

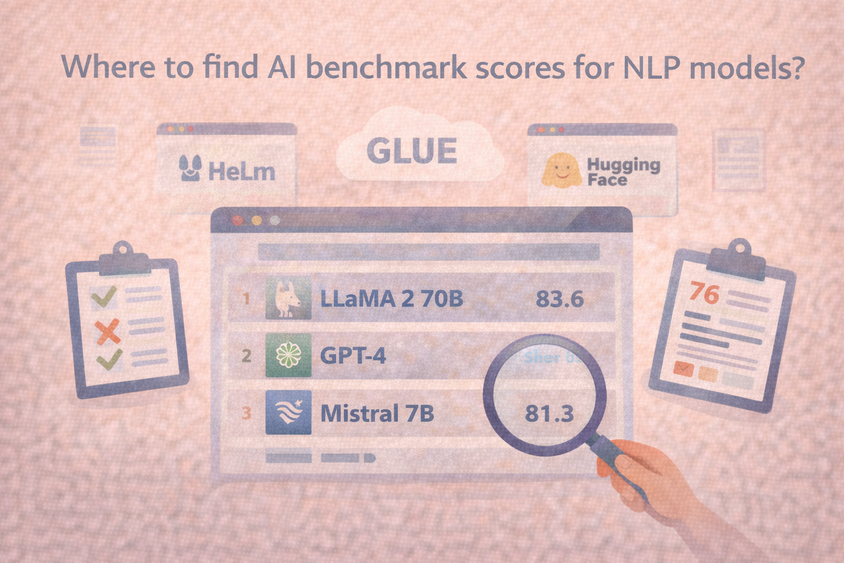

Modern evaluation frameworks try to combine both worlds. , for example, organizes evaluations into “scenarios” and metrics and covers a mix of synthetic-style tasks and more realistic language-use settings.

For product teams, a practical approach is:

- Use synthetic-style tests during development for fast iteration and regression checks.

- Before rollout, run evaluations on curated real-world data from your own domain (for example, production logs or representative user queries).

- Refresh those real-world samples periodically as usage patterns change.

Frequently Asked Questions

Frequently Asked Questions

Are synthetic benchmarks “bad”?

No. They are very useful, especially for stress tests and fine-grained analysis. The problem is only when synthetic scores are treated as the whole story instead of one slice of it.

Do synthetic benchmarks ever match real-world performance?

They can correlate, especially when they are carefully designed, but they almost always miss some failure modes that only appear with real users and data.

How should I combine synthetic and real-world tests?

A simple recipe:

- Use synthetic tasks during development to catch regressions quickly.

- Before major changes or launches, evaluate on a curated set of real production examples.

- Periodically update that real-world set so it reflects current behavior and edge cases.

Where do frameworks like HELM and MLPerf fit in?

They provide standardized, shared scenarios and metrics. HELM focuses on language-model behavior across many scenarios and metrics, while MLPerf focuses on system performance for training and inference. You still need your own domain-specific evaluations on top.