What are the most reliable AI benchmarks used in industry?

When people talk about “reliable” AI benchmarks, they usually mean benchmarks that are:

- Widely adopted by both researchers and industry teams

- Transparent about their datasets and scoring

- Kept reasonably up to date as models improve

In practice, a small set of benchmarks shows up again and again in conversations about language, vision, and speech models.

Language benchmarks you’ll see everywhere

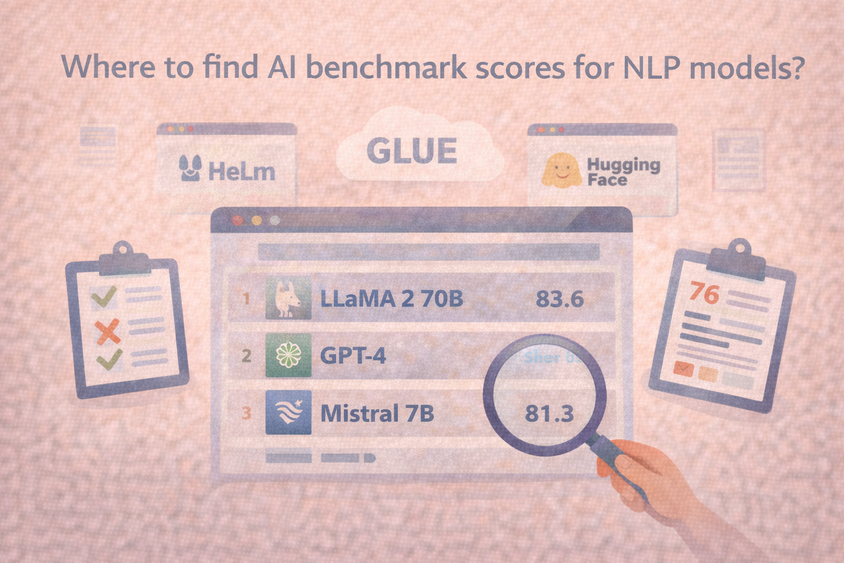

For language models, a few names come up constantly:

- GLUE evaluates general language understanding across tasks like sentiment, textual entailment, and similarity. It’s one of the first broad “NLU” benchmarks and still appears in papers and comparisons.

- SuperGLUE builds on GLUE with harder tasks and a public leaderboard, and it is often used for stronger, more modern language models.

- MMLU (Massive Multitask Language Understanding) measures performance across dozens of subjects, from history to computer science, and shows up a lot in “general intelligence” comparisons for large models.

On top of those individual benchmarks, you see “umbrella” efforts that try to evaluate models across many tasks and metrics:

- HELM (Holistic Evaluation of Language Models) from Stanford CRFM is a living benchmark that spans multiple scenarios and metrics like accuracy, robustness, fairness, and efficiency, with an open-source framework and public leaderboards.

These have become reliable anchors because they are transparent, well-documented, and widely discussed in papers, blog posts, and product announcements.

Vision benchmarks that shaped deep learning

In computer vision, a few benchmarks essentially defined the field:

- ImageNet, and specifically the ImageNet Large Scale Visual Recognition Challenge (ILSVRC), is the classic dataset and competition that drove early deep learning progress on image classification.

- COCO (Common Objects in Context) is the go-to dataset and benchmark for object detection, instance segmentation, and captioning. It includes hundreds of thousands of images and standardized evaluation metrics like mean Average Precision (mAP).

If you read any serious paper or product spec about detection, there is a good chance you’ll see COCO numbers.

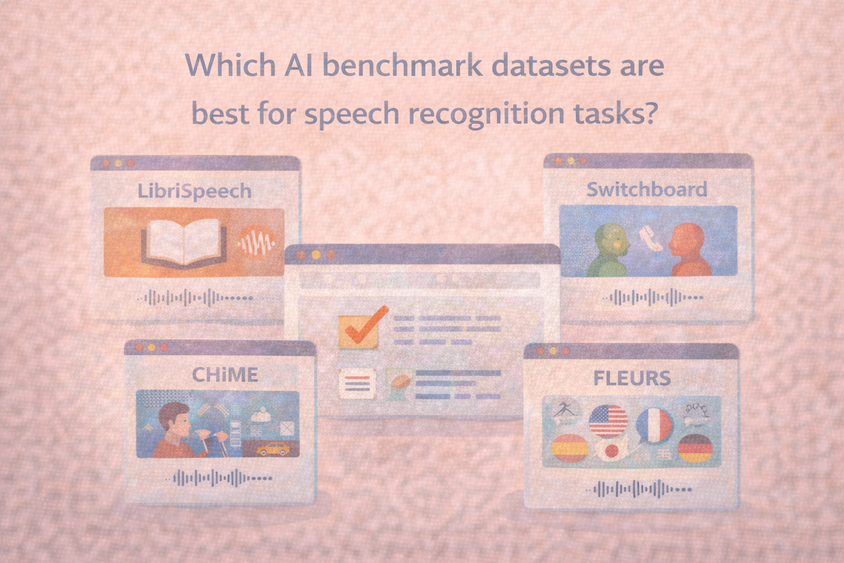

Speech and audio

For speech recognition, LibriSpeech shows up almost everywhere as the default word-error-rate benchmark for English read speech.

There are many other speech benchmarks, but LibriSpeech is still the “common language” for quick comparisons.

System and hardware benchmarks

On the systems side, MLPerf (run by MLCommons) is the closest thing to an industry standard. It measures how fast hardware and software stacks can train and run a set of reference models, and it’s used heavily by chip vendors and cloud providers.

When vendors talk about “record-setting performance” on AI workloads, they are often citing MLPerf numbers.

How to think about “reliable”

A benchmark can be popular and still be a poor fit for your use case. As a rule of thumb:

- Use GLUE, SuperGLUE, MMLU, HELM for a shared baseline on language models

- Use ImageNet, COCO, LibriSpeech for a shared baseline in vision and speech

- Use MLPerf when you care about system-level training and inference performance

Then layer your own domain-specific tests on top. The “reliability” comes from combining widely recognized benchmarks with evaluations that reflect your real-world data and constraints.

Frequently Asked Questions

Frequently Asked Questions

Do I need to use all of these benchmarks?

No. Most teams pick one or two that match their domain and then add custom tests. For example, a chatbot team might focus on MMLU plus their own conversation logs.

Are these benchmarks still useful now that models are so large?

Yes. Some tasks are saturated, but the benchmarks still provide a shared reference point and historical context. Newer variants like MMLU-Pro and updated MLPerf suites continue to increase difficulty or reflect new workloads.

What if my use case doesn’t look like any of these datasets?

That’s common. Use the public benchmarks for rough comparison, then build a smaller, targeted benchmark that looks like your actual data and decisions.

Is there a single “best” benchmark?

No. Benchmarks are good at answering specific questions. “Best on COCO” is not the same as “best for radiology images” or “best for call-center transcripts.”