What is the process for submitting AI models to benchmarking challenges?

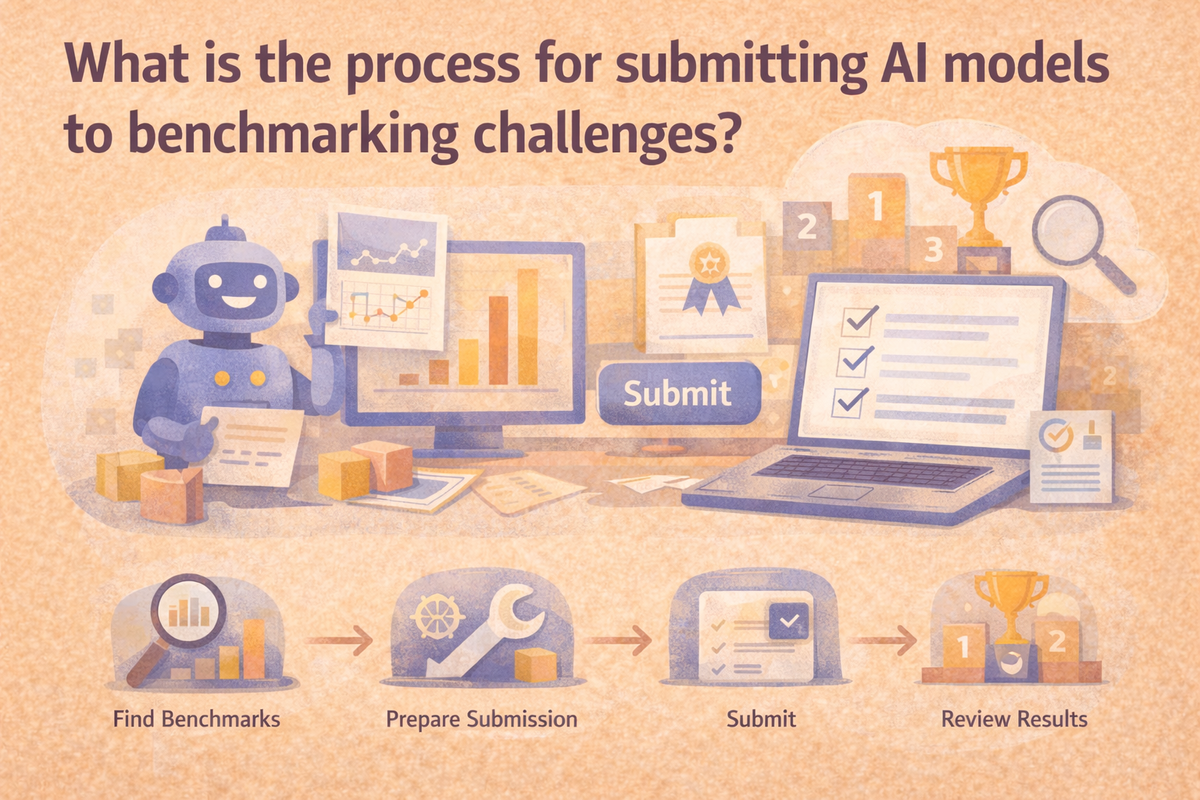

Submitting an AI model to a benchmarking challenge is less about “upload the model” and more about proving your system can be evaluated fairly. In most challenges, you pick a track, follow strict rules about what’s allowed, package your system in a required format (predictions file, container, or hosted endpoint), and submit results plus metadata so the organizers can score and verify your run.

Submitting AI models in practice

Start by choosing the right benchmark and track, because the rules define what the evaluation is actually measuring. Many challenges split submissions into tracks such as open versus closed (whether you can use external data or tools), or into domain-specific tracks with different constraints. If your system relies on retrieval, proprietary data, or third-party APIs, you need a track that explicitly permits those. This step matters because the fastest way to get disqualified is to build a great system that violates the track requirements.

Next, read the evaluation requirements like you’re integrating an API. Benchmarks typically require very specific input and output formats, and those details can shape your implementation. Some expect you to submit a predictions file with exact IDs and a strict schema. Others require a container or code package so the organizers can run your model in a controlled environment. Some evaluate by calling your hosted endpoint and measuring not just quality, but also response behavior under load. The “submission method” is not an afterthought—it determines what you need to ship and what can go wrong.

Once you know the submission style, you prepare your model or system so it’s reproducible and compliant. That usually means locking versions, capturing the exact configuration used (prompt template, decoding settings, retrieval parameters, or checkpoint hash), and keeping your data handling clean. Benchmarks often include hidden test sets, private evaluation splits, or rules meant to prevent leakage. Treat those constraints as part of the challenge: you’re demonstrating performance under conditions you can’t optimize against directly.

Then comes the part that trips up most first-time submissions: generating outputs that match the benchmark’s requirements perfectly. Even a strong model can fail if IDs don’t match, outputs aren’t normalized the expected way, or the file format is slightly off. A good habit is to validate your submission before you upload it—check the schema, confirm counts and IDs, and spot-check a handful of examples end to end. This is the difference between meaningful feedback and a wasted submission slot.

After you submit, you’ll typically get a score (sometimes multiple metrics) and a status. If the submission fails validation, fix formatting and compliance first. don’t tune the model until the pipeline is correct. Once your pipeline is stable, iteration becomes straightforward: make a change, run inference the same way, submit, and compare results. If there’s a public leaderboard plus a private final evaluation, avoid chasing tiny public score gains that might not hold up on the hidden set; aim for improvements that make sense across error categories.

Frequently Asked Questions

Frequently Asked Questions

Do I submit the model itself or just outputs?

It depends on the challenge. Some accept a predictions file, others require a container or code, and some evaluate a hosted endpoint.

What’s the most common reason submissions fail?

Formatting and compliance issues: wrong IDs, invalid schema, missing required metadata, or violating track constraints.

Can I use retrieval or external tools in my submission?

Only if the rules allow it. Many benchmarks have separate “open” tracks for systems that use external data or tools.