Where can I find AI benchmark scores for natural language processing models?

When you’re shopping for an NLP model, benchmark scores are usually the first thing you reach for. They’re easy to cite, easy to compare, and they give you a shortcut to “what’s working right now.” The catch is that benchmark numbers are only as useful as the context around them. Two scores can look comparable while being produced with different prompt templates, different dataset revisions, or even different model variants.

A good way to approach this is to treat leaderboards as a map, not a verdict. Use them to narrow your options, then confirm the details that make those numbers meaningful for your use case.

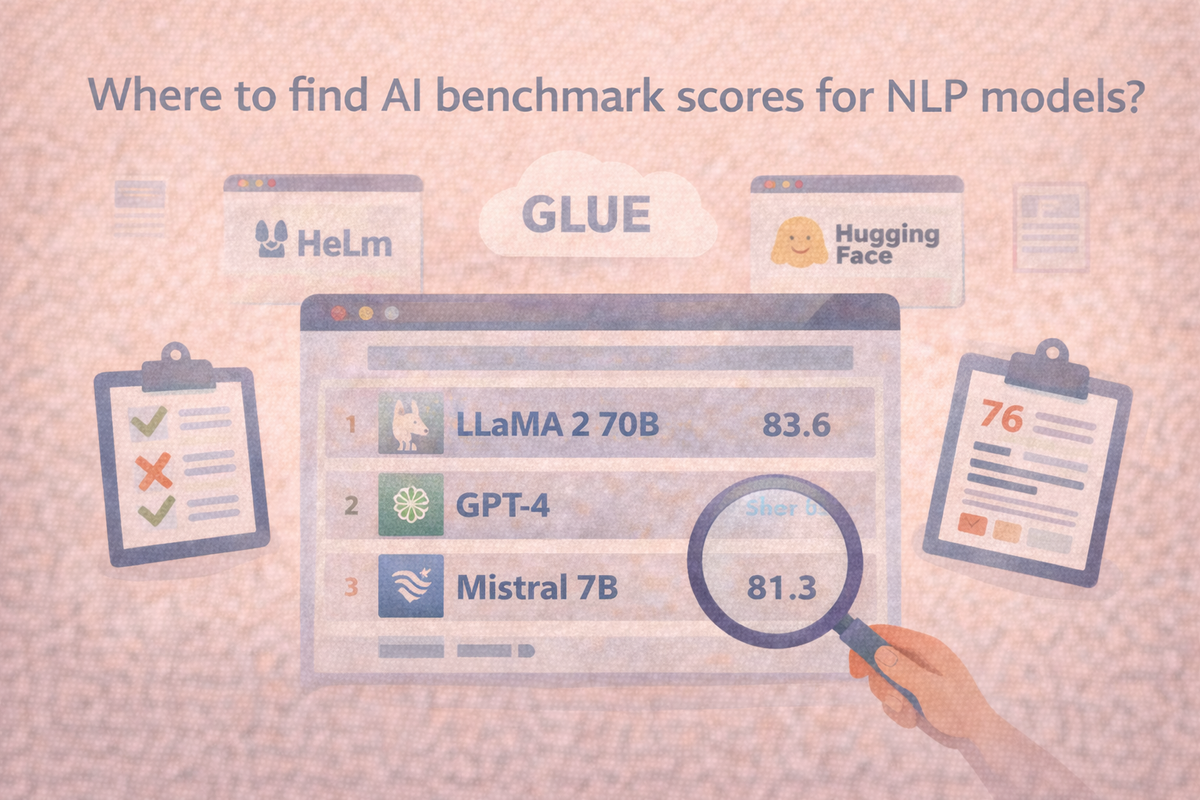

Start with broad model leaderboards for fast comparisons

If you want a quick sense of how a large set of models stack up, broad leaderboards are the fastest entry point. They’re designed to answer the “what’s competitive right now?” question without requiring you to read a dozen papers first. These leaderboards tend to work best for early exploration, especially when you’re comparing open models and want a consistent starting point.

Two helpful places to begin are the Open LLM Leaderboard (Hugging Face) and the broader Hugging Face Leaderboards hub. Both make it easier to scan rankings, then dig into how a score was produced. When you click through, pay attention to what suite was used, which tasks are included, and whether the evaluation configuration is stable across runs.

Use benchmark-owned leaderboards when you need canonical scores

Sometimes you are not trying to “browse,” you are trying to verify. Maybe you saw a score in a blog post or paper and want to check whether it is being cited accurately. In those cases, the most dependable source is often the benchmark’s own leaderboard because it reflects the benchmark’s official reporting conventions and task definitions.

For example, the GLUE leaderboard remains a well-known reference point for language understanding tasks. Even when you do not plan to use GLUE as your primary decision signal, it can be a useful anchor for understanding what a model claims to be good at and how those results have been historically presented.

Check “living” evaluation suites for broader, scenario-based reporting

Many modern NLP use cases do not fit neatly into a single benchmark. You may care about robustness, safety-adjacent behavior, or performance across different prompt styles and evaluation scenarios. That’s where broader evaluation suites can be useful, since they publish results across multiple settings and often make changes visible over time.

A strong example is Stanford CRFM HELM, along with its regularly updated HELM leaderboards. These are especially helpful when you want something more descriptive than “Model A beats Model B,” because the reporting tends to show how results vary by scenario. As you review them, focus on whether the benchmark tasks resemble your workload, since “coverage” can matter more than rank.

For embedding models, use embedding-specific benchmarks instead of LLM leaderboards

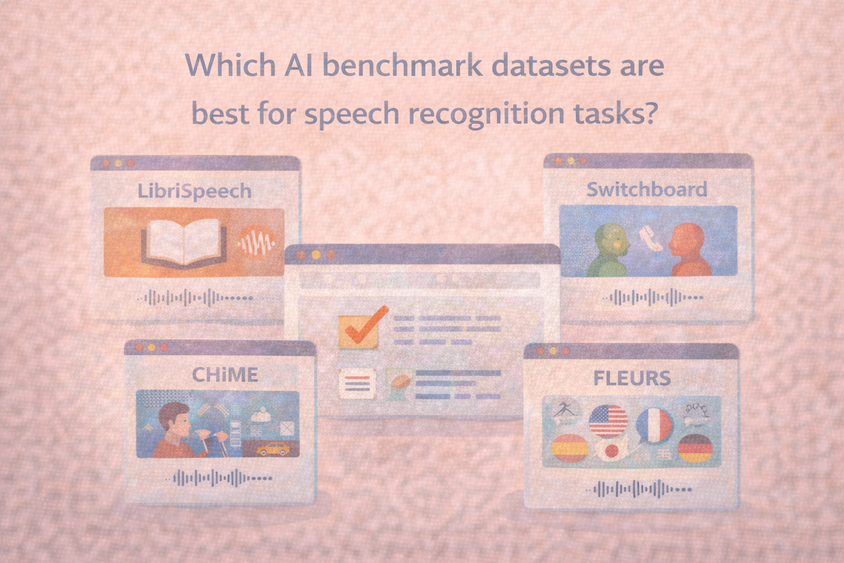

Not all NLP models are used for generation. If you’re choosing an embedding model for semantic search, clustering, or retrieval, you’ll get more relevant signal from embedding-focused evaluations than from general LLM leaderboards. Embedding performance depends on different task families, and the best model for retrieval may not look impressive on generation-heavy suites.

The MTEB leaderboard is a common place to compare embedding models because it includes many retrieval and classification-style tasks that reflect how embeddings are actually used. For background and context, the MTEB overview on Hugging Face is also helpful, especially if you want to understand what tasks are included and what “good performance” means across categories.

| If you’re trying to… | Start Here | Why this helps |

| Compare many open models quickly | Open LLM Leaderboard | Fast rankings across a shared suite |

| Verify a benchmark score that someone cited | GLUE leaderboard | GLUE leaderboard |

| Understand results across different scenarios | HELM leaderboards | Multi-scenario evaluation and updated reporting |

| Compare embedding models for retrieval/search | MTEB leaderboard | Embedding-focused tasks and breakdowns |

What to verify before you trust a benchmark score

Once you’ve found a score, the next step is confirming whether it is comparable to the numbers you’re placing beside it. Benchmarking often fails in subtle ways, and those subtleties tend to show up in exactly the places most people do not look.

Start by confirming the benchmark version and evaluation date. Leaderboards evolve, tasks get revised, and “latest” results can diverge from older citations. Next, check the model variant. Base, instruction-tuned, fine-tuned, and quantized versions can behave very differently even when their names look nearly identical. Finally, look for evaluation settings that can shift outcomes, including prompt templates, decoding parameters, and metric definitions. If those details are not visible, treat the score as directional rather than definitive.

At a practical level, the goal is to use leaderboards to narrow down candidates, then validate performance on a small, versioned internal test set that reflects your prompts, your data, and your constraints. That step is what turns benchmark browsing into a decision you can defend.

Frequently Asked Questions

Frequently Asked Questions

Is there one best site for all NLP benchmark scores?

There isn’t a single perfect source. Benchmark-owned leaderboards are the most reliable for canonical scores, while hubs like the Hugging Face Leaderboards hub and suites like HELM are better for broad comparisons across many models.

Where should I look for multilingual NLP benchmark results?

If you’re comparing embedding models, the MTEB leaderboard is a strong place to start because it includes multilingual coverage and multiple task types. For more general LLM comparisons, look for leaderboards that explicitly list multilingual tasks and datasets rather than assuming multilingual strength from a single score.

Why do I see different scores for what looks like the same benchmark?

Differences usually come from changes in benchmark versions, evaluation settings, or model variants. When possible, use sources that publish updated “latest” results clearly, such as the HELM leaderboards