Which AI benchmarks focus on energy efficiency of models?

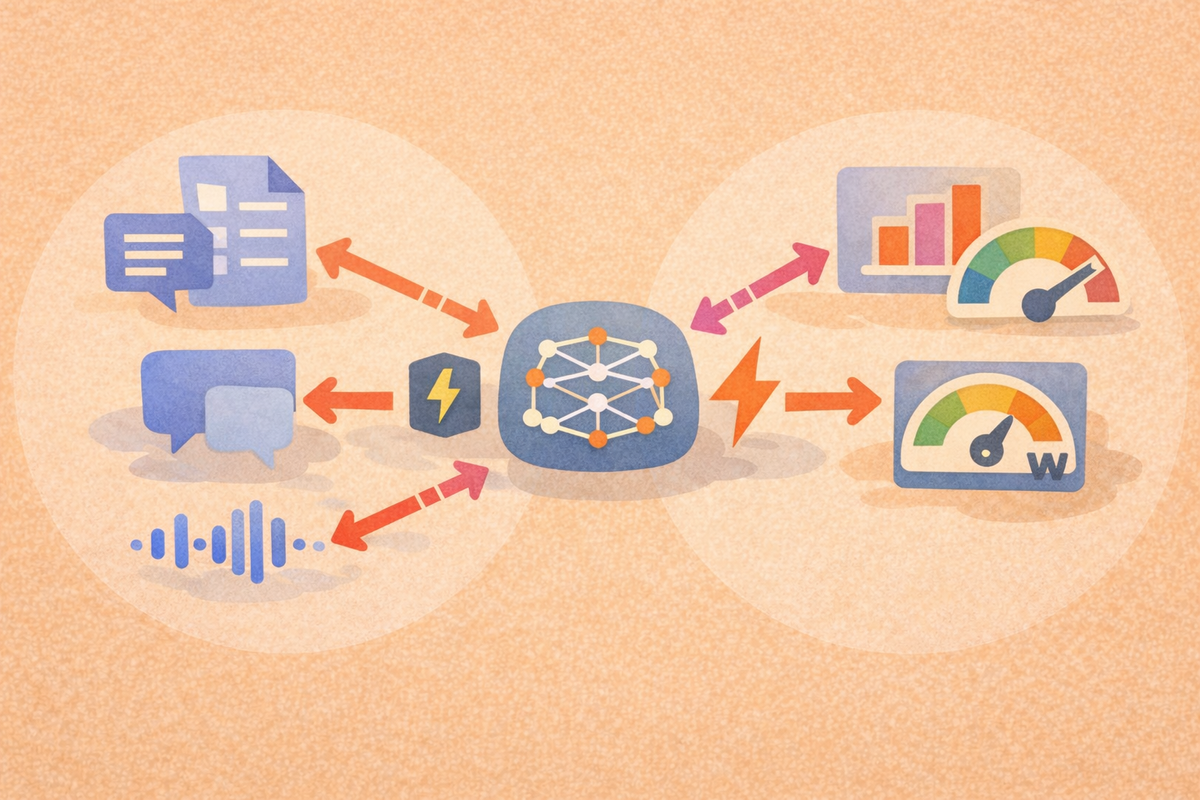

Energy-efficiency benchmarks measure how much useful AI work a system performs per unit of energy, not just how fast or accurate it is. These benchmarks help teams understand tradeoffs between performance, cost, and sustainability by pairing model results with power and energy measurements taken under controlled conditions.

More details

Why energy efficiency needs its own benchmarks

Traditional AI benchmarks emphasize accuracy, latency, or throughput. Those metrics matter, but they do not explain how much energy a model consumes to achieve that performance. As models grow larger and inference workloads scale, energy use becomes a limiting factor for cost, deployment, and environmental impact. Energy-focused benchmarks exist to make this dimension visible and comparable.

System-level benchmarks that include power measurement

One of the most widely referenced efforts is MLPerf, which includes power and energy measurements alongside performance results in several of its benchmark suites. These results report metrics such as performance per watt or total energy to complete a defined workload. The emphasis is on standardized measurement methods so results can be compared across hardware, software stacks, and model configurations.

Another long-standing example is the Green500, which ranks large-scale computing systems based on energy efficiency. While not limited to AI models, it is often cited when evaluating the efficiency of systems used for AI training and inference at scale.

Benchmarks that emphasize server and system efficiency

Some benchmarks focus on the efficiency of the underlying systems that run AI workloads rather than the models alone. For example, SPECpower_ssj2008 measures server performance per watt under different load levels. These results are frequently used as supporting evidence when discussing the energy efficiency of AI deployments in data centers, even though the benchmark itself is not model-specific.

How energy efficiency is typically reported

Energy-focused benchmarks usually combine workload definitions with power measurement protocols. Results may be reported as:

- Performance per watt

- Total energy consumed to complete a task

- Energy normalized by accuracy or throughput

This framing matters because a faster model is not always more energy-efficient, and a highly accurate model may consume significantly more power for marginal gains.

We put together a free guide on scaling benchmarking and evaluation in enterprise settings, including how to separate benchmarks from targeted evals, apply rubrics consistently across SMEs, and use calibrated automation to scale review without losing trust.

Frequently Asked Questions

Frequently Asked Questions

Are there benchmarks that measure model energy efficiency directly?

Most benchmarks measure energy at the system or workload level rather than isolating the model alone, since models rarely run without supporting infrastructure.

Do energy-efficient benchmarks replace accuracy benchmarks?

No. They complement accuracy and performance benchmarks by adding visibility into cost and sustainability tradeoffs.

Can energy benchmarks be compared across different organizations?

Comparisons are most meaningful when measurement methods, workloads, and configurations are similar. Large differences in setup can distort results.

Are energy benchmarks relevant for inference as well as training?

Yes. Inference often runs continuously at scale, which makes energy efficiency especially important for long-term operational costs.

Why do some benchmarks report performance per watt instead of total energy?

Performance-per-watt metrics make it easier to compare systems that complete work at different speeds while still accounting for power usage.